|

GraphEQA: Using 3D Semantic Scene Graphs for Real-time Embodied Question Answering

Saumya Saxena and Blake Buchanan and Chris Paxton and Peiqi Liu and Bingqing Chen and Narunas Vaskevicius and Luigi Palmieri and Jonathan Francis and Oliver Kroemer

@inproceedings{saxena2025grapheqa,

title={GraphEQA: Using 3D Semantic Scene Graphs for Real-time Embodied Question Answering},

author={Saxena, Saumya and Buchanan, Blake and Paxton, Chris and Liu, Peiqi and Chen, Bingqing and Vaskevicius, Narunas and Palmieri, Luigi and Francis, Jonathan and Kroemer, Oliver},

booktitle={Conference on Robot Learning (CoRL)},

year={2025}

}

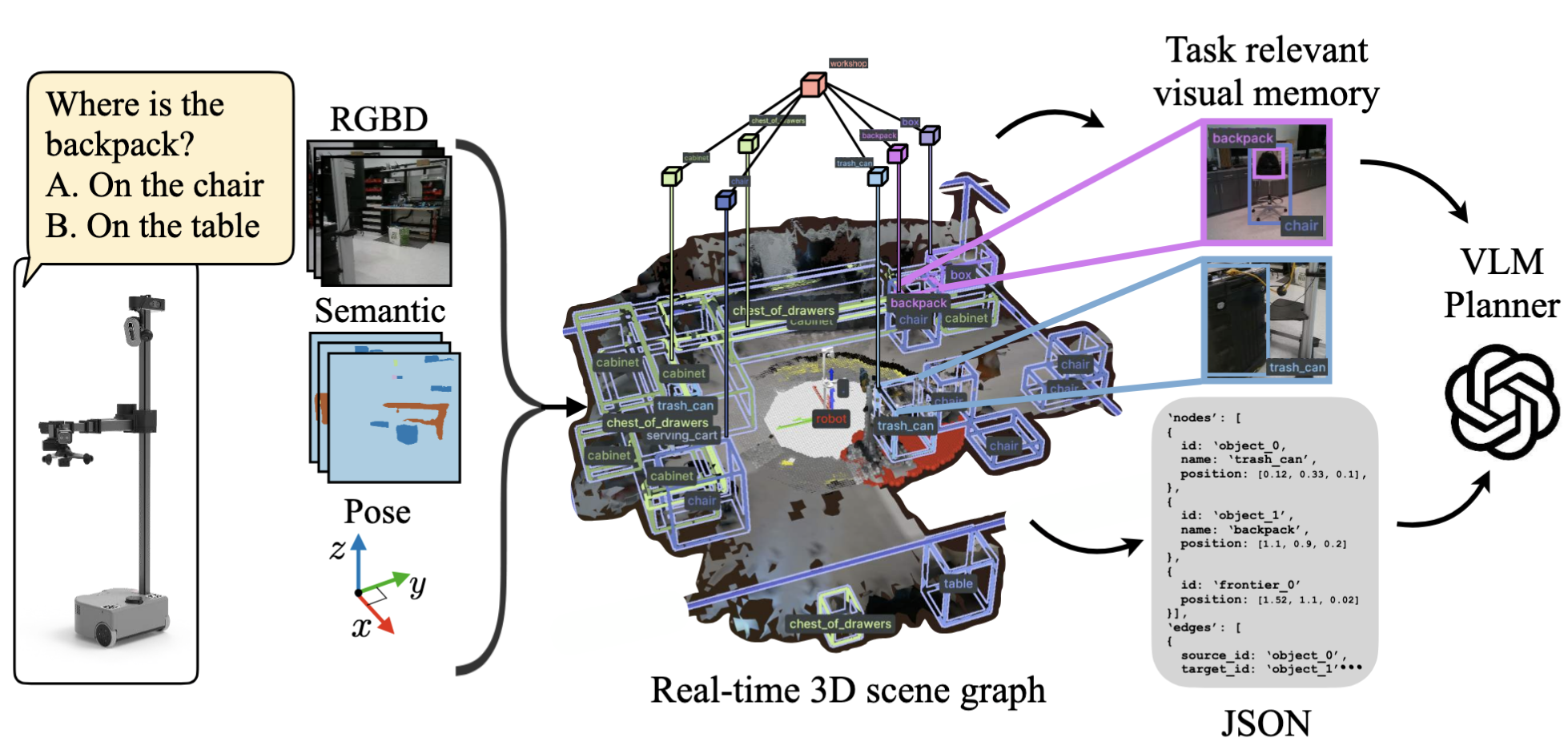

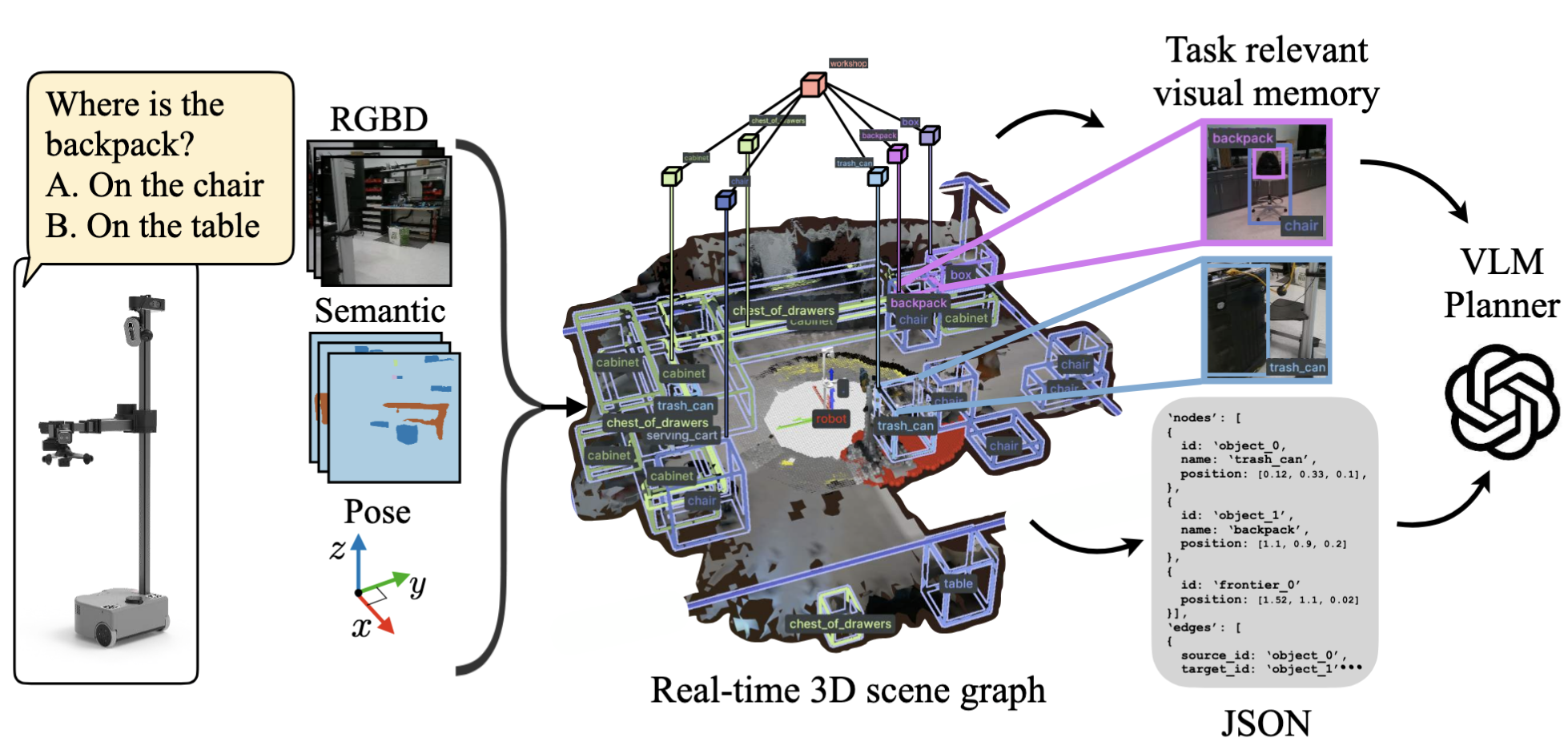

Embodied Question Answering (EQA) tasks require an agent to navigate and understand an unseen 3D environment in order to answer natural-language questions about that environment. This paper proposes GraphEQA, a method that builds and maintains real-time 3D metric-semantic scene graphs (3DSGs) as a compact, multimodal memory combining geometry and semantics for efficient exploration and planning. GraphEQA leverages task-relevant images and the hierarchical structure of 3DSGs to guide exploration and ground Vision-Language Models (VLMs) for real-time question answering. Evaluation in simulated environments shows that the approach improves semantic understanding and task performance for embodied reasoning compared to existing baselines.

Conference on Robot Learning (CoRL), 2025

|

|

Cascaded Diffusion Models for Neural Motion Planning

Mohit Sharma and Adam Fishman and Vikash Kumar and Chris Paxton and Oliver Kroemer

@inproceedings{sharma2025cascadeddiffusion,

title={Cascaded Diffusion Models for Neural Motion Planning},

author={Sharma, Mohit and Fishman, Adam and Kumar, Vikash and Paxton, Chris and Kroemer, Oliver},

booktitle={IEEE International Conference on Robotics and Automation (ICRA)},

year={2025}

}

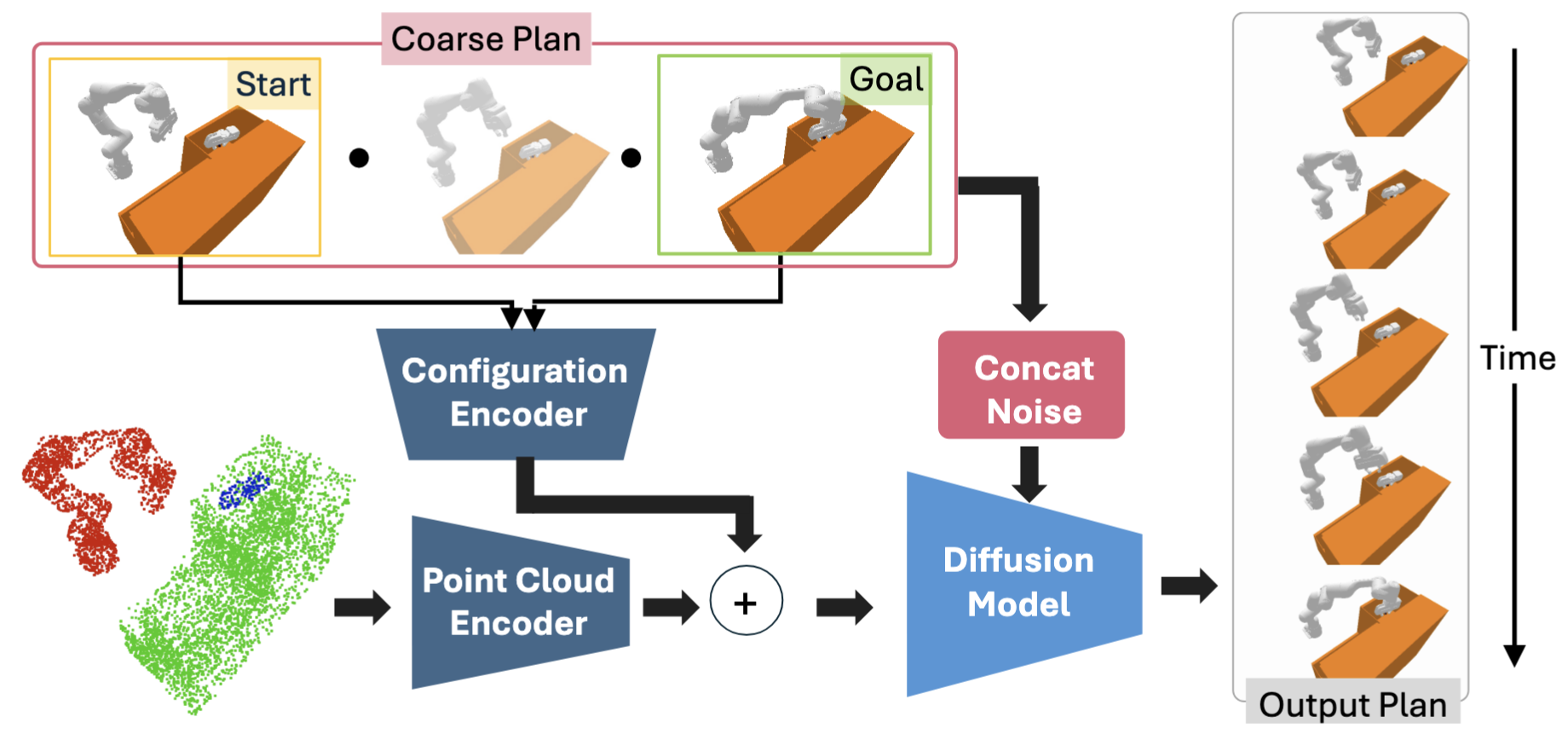

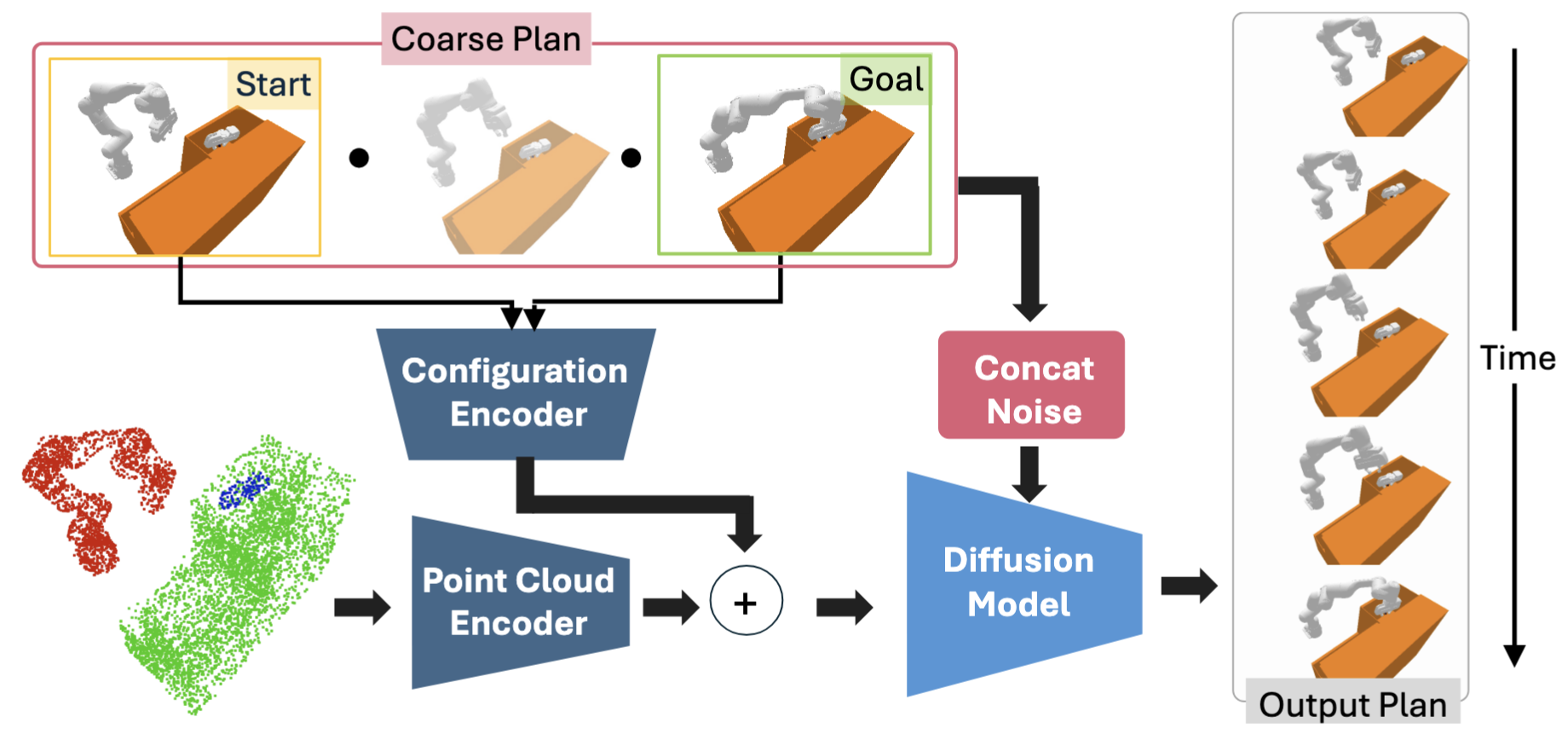

Robots in complex real-world environments must perceive and navigate to goals without collisions, a challenge especially difficult in cluttered scenes with only raw sensory perception. Traditional diffusion policies and other generative models excel at local planning but struggle to enforce all constraints needed for global motion planning. This work introduces cascaded diffusion models that hierarchically unify global prediction and local refinement with online plan repair to generate full, collision-free trajectories. By combining high-level diffusion for overall path structure with lower-level refinement models, the approach produces trajectories that outperform a wide range of baselines across navigation and manipulation tasks.

IEEE International Conference on Robotics and Automation (ICRA), 2025

|

|

Autonomous Sensor Exchange and Calibration for Cornstalk Nitrate Monitoring Robot

Janice Seungyeon Lee and Thomas Detlefsen and Shara Lawande and Saudamini Ghatge and Shrudhi Ramesh Shanthi and Sruthi Mukkamala and George Kantor and Oliver Kroemer

@inproceedings{lee2025autonomoussensor,

title={Autonomous Sensor Exchange and Calibration for Cornstalk Nitrate Monitoring Robot},

author={Lee, Janice Seungyeon and Detlefsen, Thomas and Lawande, Shara and Ghatge, Saudamini and Shanthi, Shrudhi Ramesh and Mukkamala, Sruthi and Kantor, George and Kroemer, Oliver},

booktitle={IEEE International Conference on Robotics and Automation (ICRA)},

pages={16803--16809},

year={2025},

organization={IEEE}

}

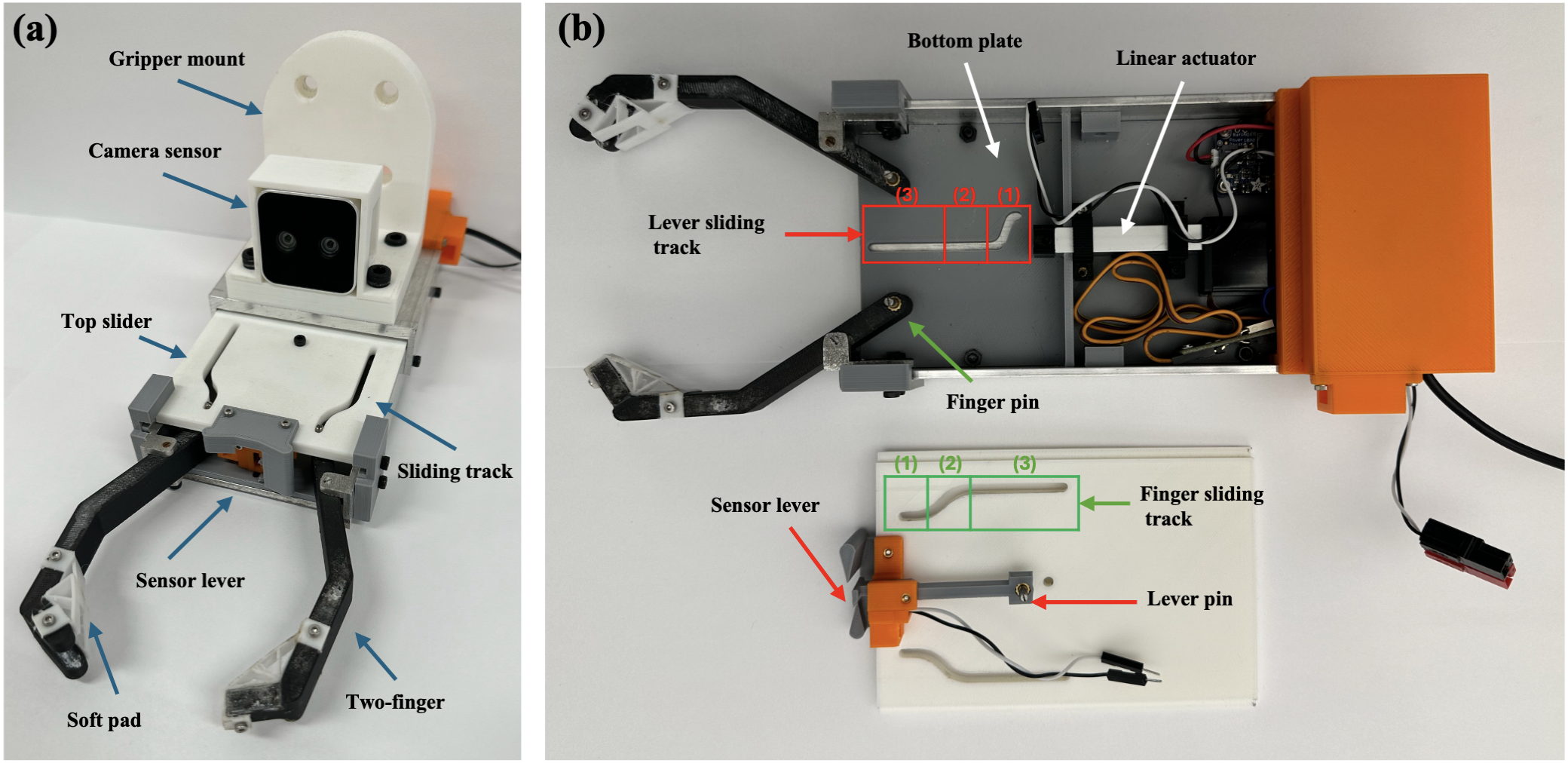

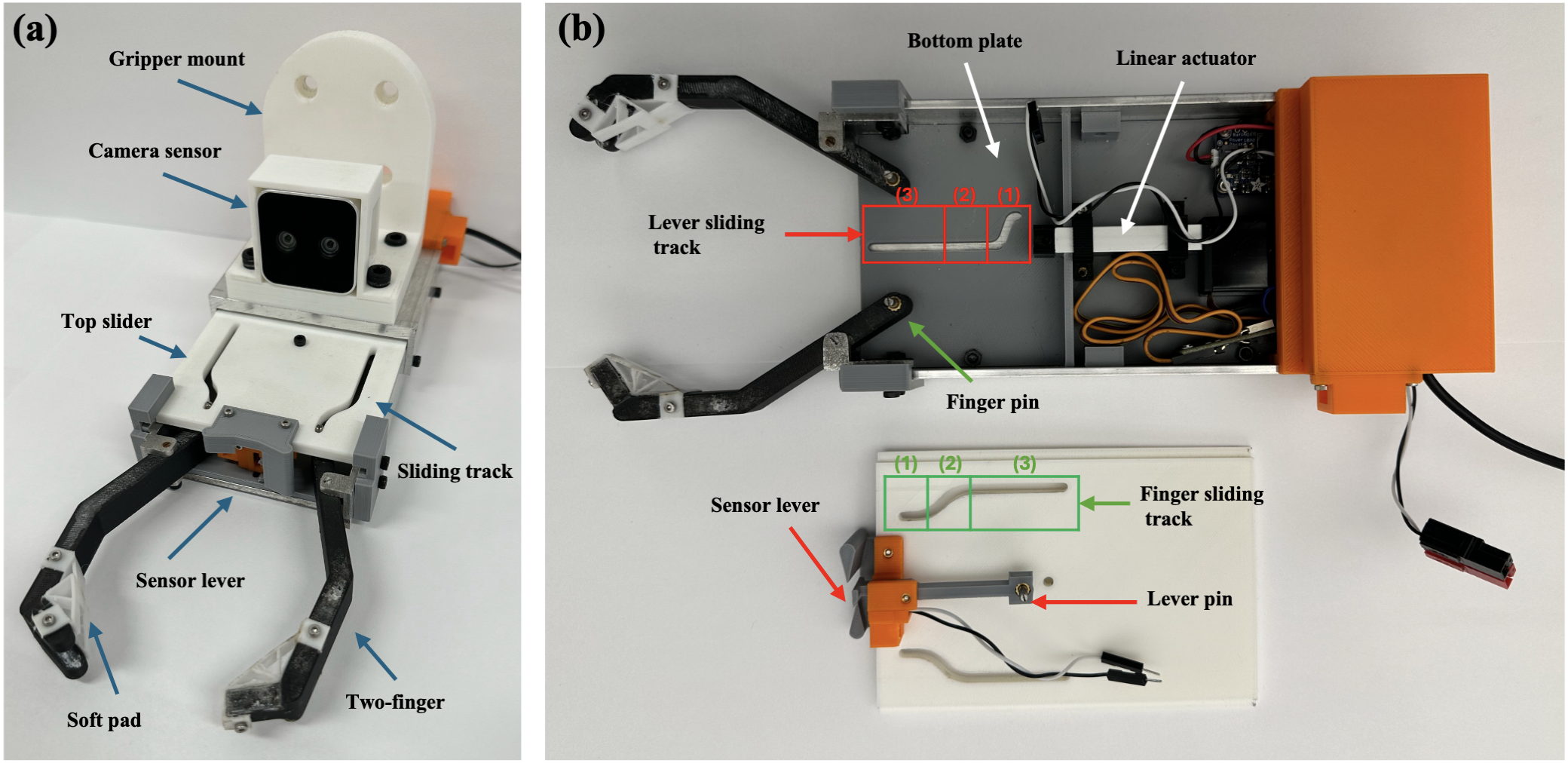

Interactive sensors are an important component of robotic systems but often require manual replacement due to wear and tear. This paper presents an autonomous sensor exchange and calibration system for an agricultural crop monitoring robot that inserts a nitrate sensor into cornstalks. A novel gripper and replacement mechanism with a funneling design enable efficient and reliable sensor exchanges. An on-board calibration station provides in-field sensor cleaning and calibration for consistent nitrate readings, and the system was deployed in a real cornfield where it successfully inserted nitrate sensors with a high measured success rate. The integration of exchange and calibration mechanisms enhances autonomy for long-term robot operation in agricultural environments.

IEEE International Conference on Robotics and Automation (ICRA), 2025

|

|

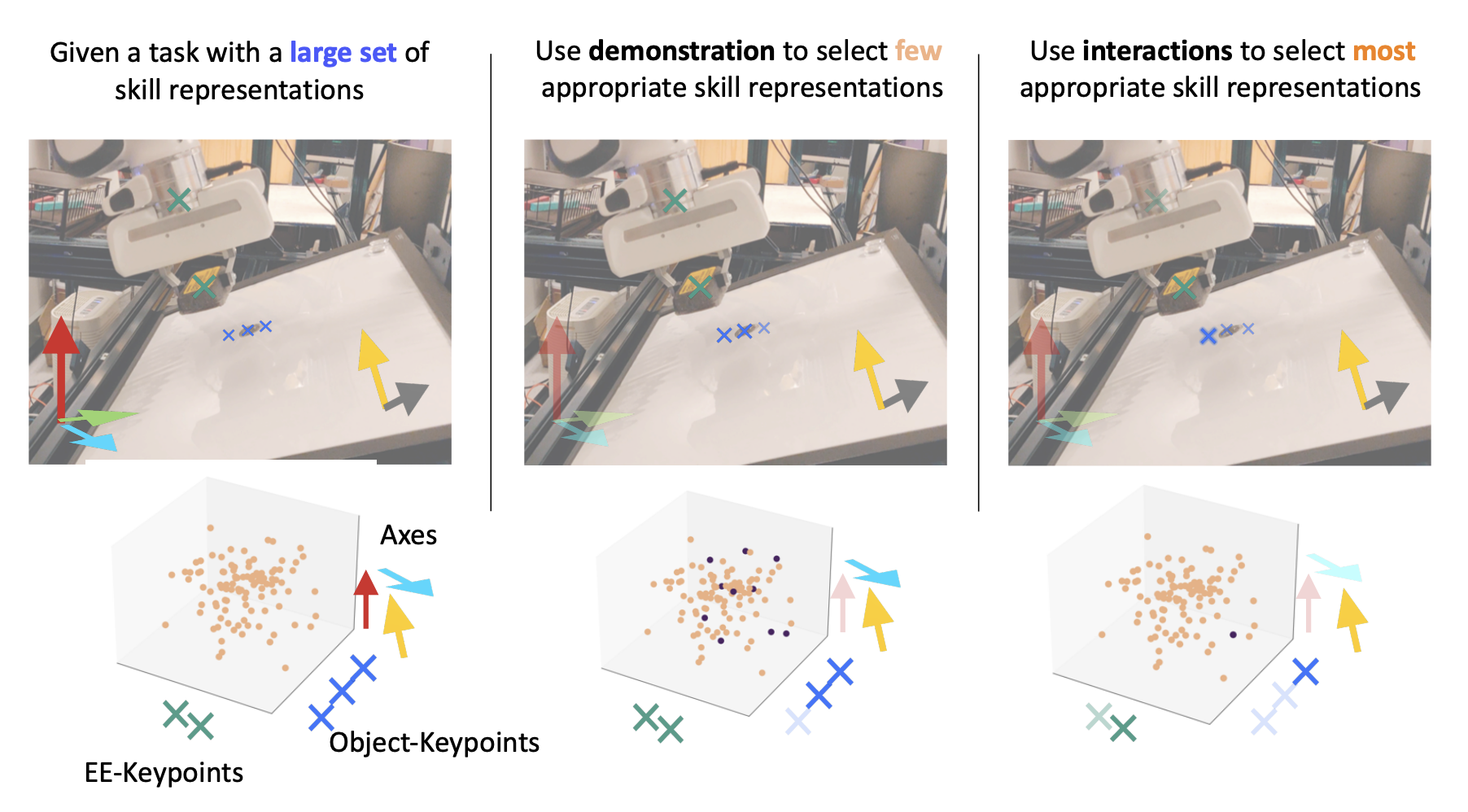

Grounded Task Axes: Zero-Shot Semantic Skill Generalization Via Task-Axis Controllers and Visual Foundation Models

M. Yunus Seker and Shobhit Aggarwal and Oliver Kroemer

@inproceedings{seker2025groundedtaskaxes,

title={Grounded Task Axes: Zero-Shot Semantic Skill Generalization Via Task-Axis Controllers and Visual Foundation Models},

author={Seker, M. Yunus and Aggarwal, Shobhit and Kroemer, Oliver},

booktitle={IEEE-RAS International Conference on Humanoid Robots (Humanoids)},

year={2025},

pages={791--798}

}

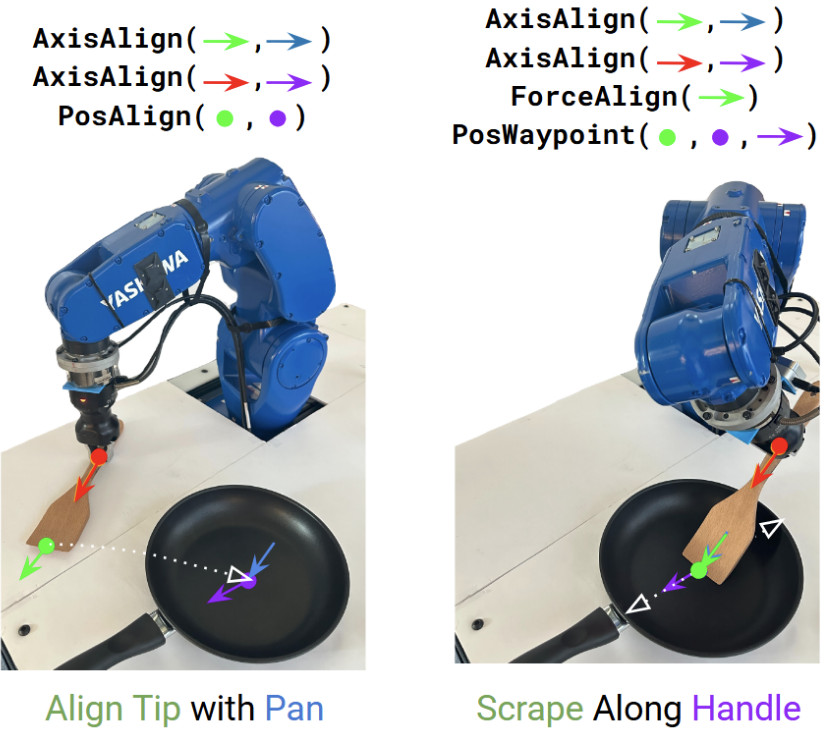

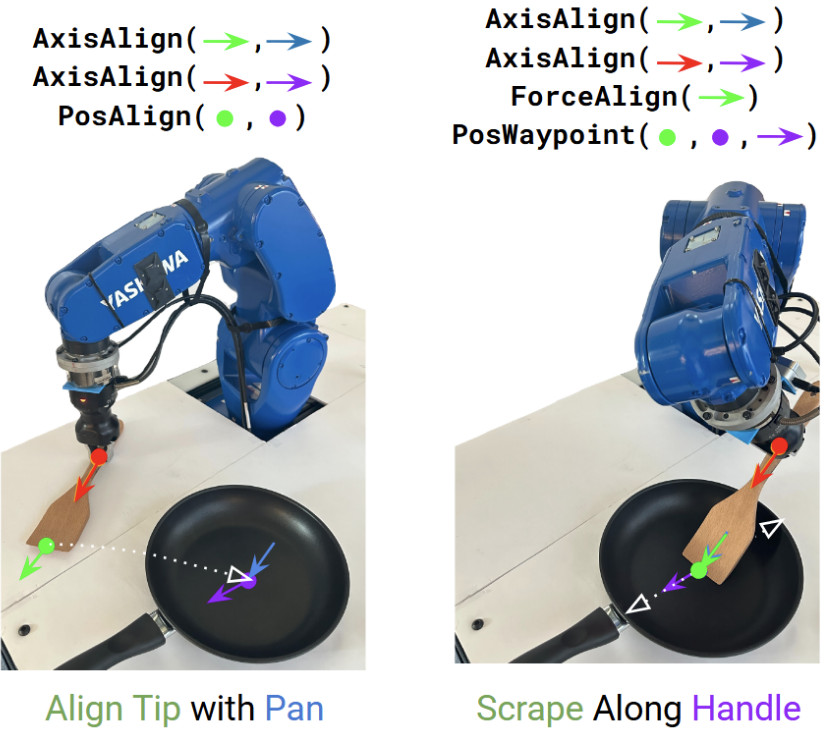

Transferring skills between different objects remains one of the core challenges of open-world robot manipulation. Generalization needs to take into account the high-level structural differences between distinct objects while still maintaining similar low-level interaction control. In this work, we propose an example-based zero-shot approach to skill transfer that decomposes skills into a prioritized set of grounded task-axis controllers (GTACs). Each GTAC defines an adaptable controller, such as a position or force controller, along an axis that is semantically grounded to object keypoints and axes. Zero-shot transfer is achieved by identifying semantically similar grounding features on novel target objects using visual foundation models such as SD-DINO. We validate our approach through real robot experiments including screwing, pouring, and spatula scraping tasks, demonstrating robust controller transfer across diverse object geometries without requiring task-specific training or demonstrations.

IEEE-RAS International Conference on Humanoid Robots (Humanoids), 2025

|

|

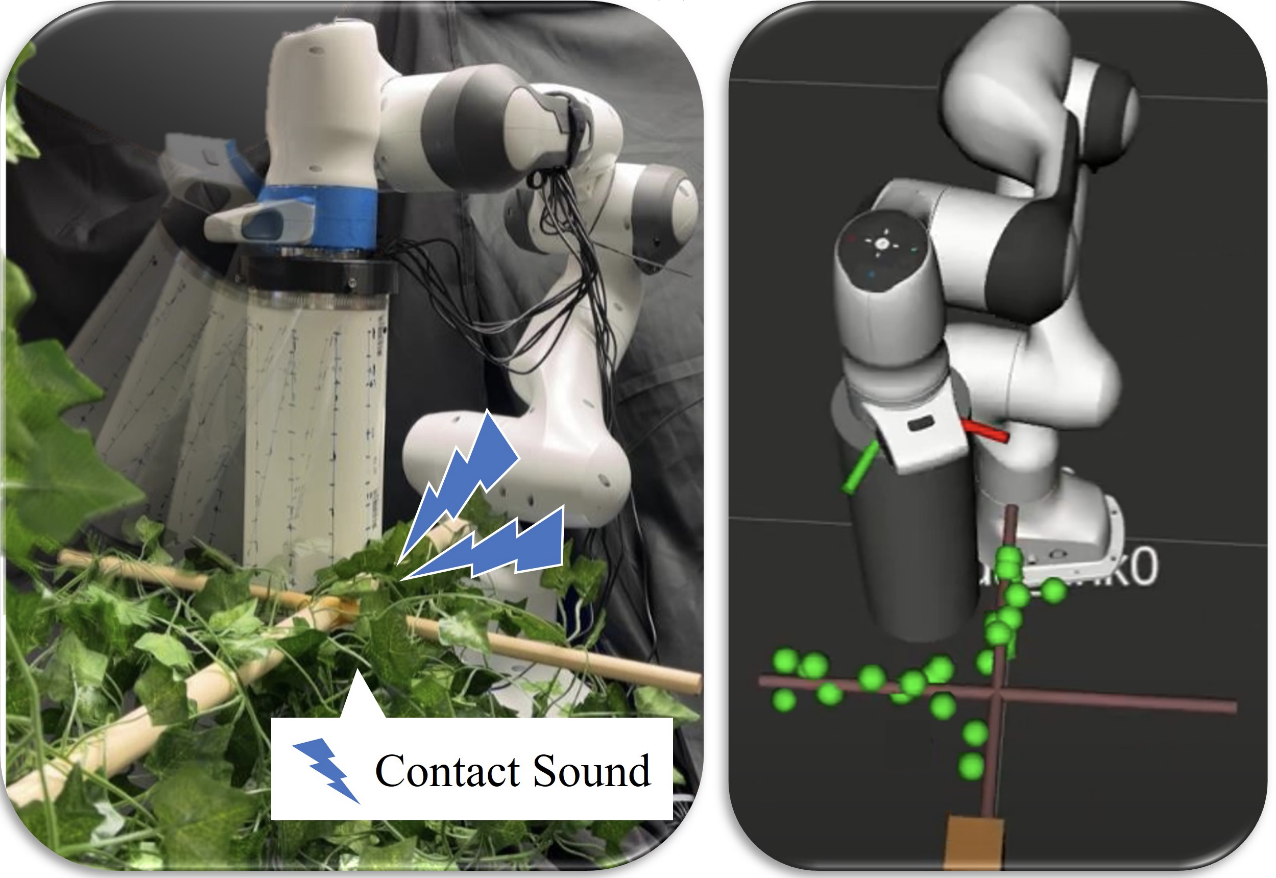

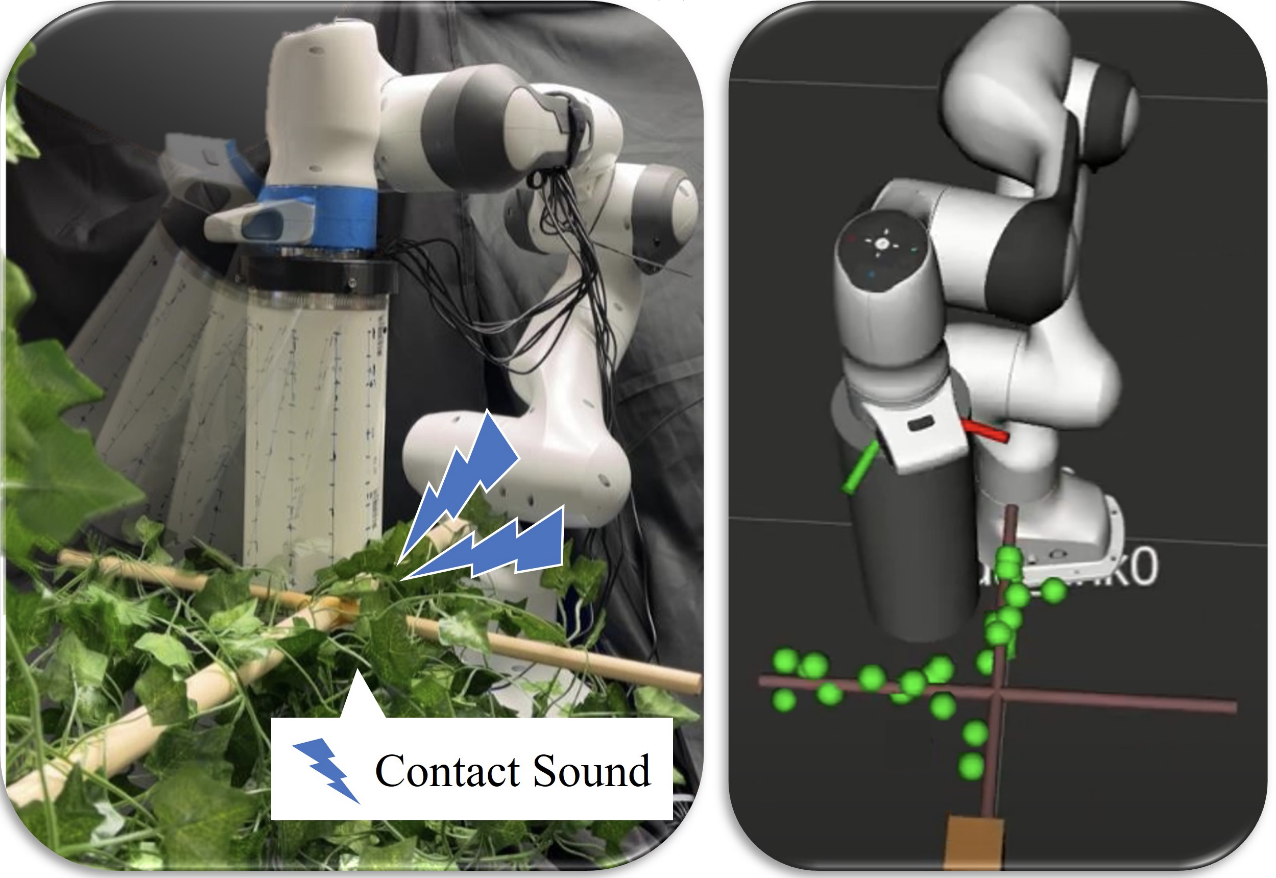

Audio-Visual Contact Classification for Tree Structures in Agriculture

Ryan Spears and Moonyoung Lee and George Kantor and Oliver Kroemer

IEEE-RAS International Conference on Humanoid Robots (Humanoids), 2025

|

|

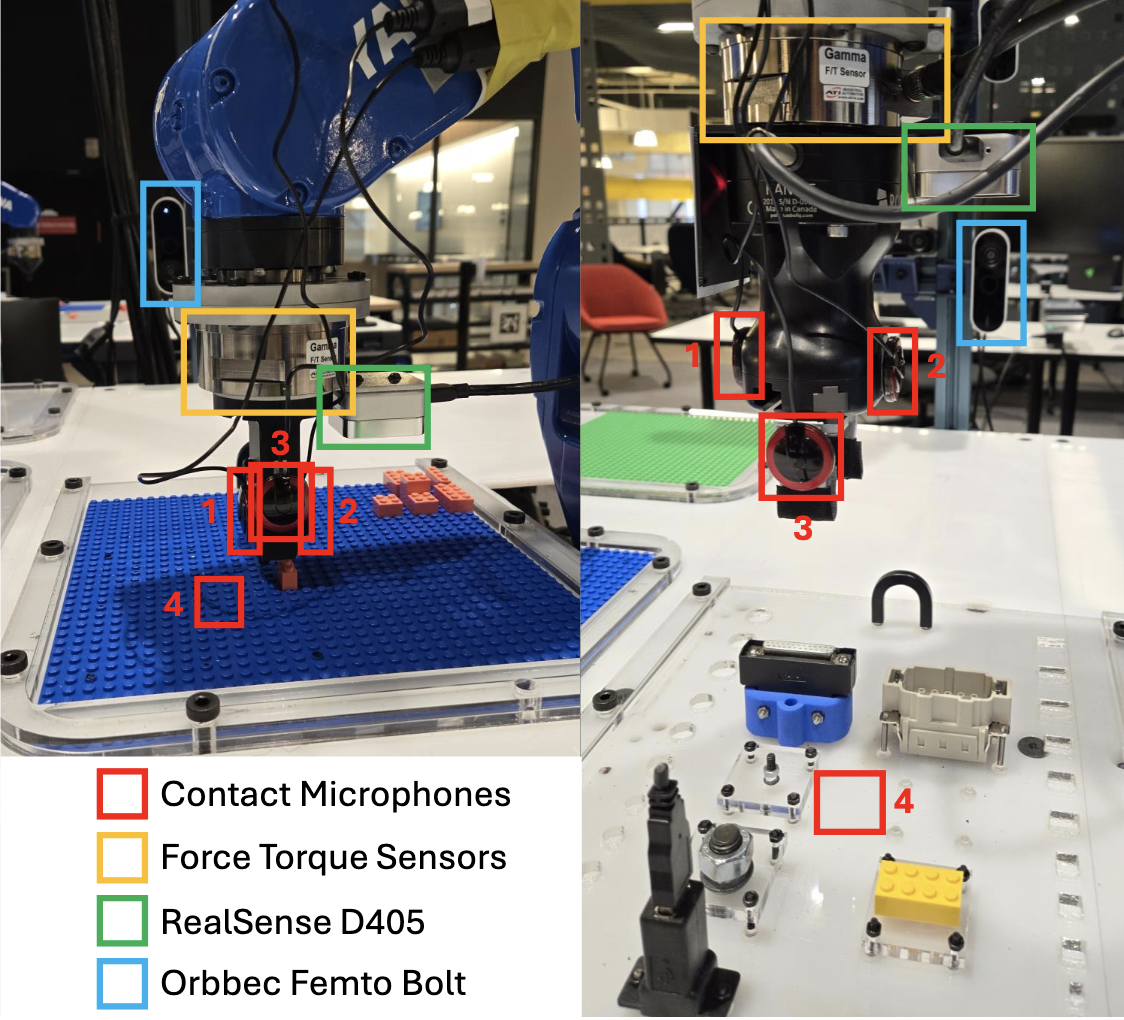

Vibrotactile Sensing for Detecting Misalignments in Precision Manufacturing

Kevin Zhang and Christopher Chang and Shobhit Aggarwal and Manuela Veloso and Zeynep Temel and Oliver Kroemer

@inproceedings{zhang2025vibrotactilesensing,

title={Vibrotactile Sensing for Detecting Misalignments in Precision Manufacturing},

author={Zhang, Kevin and Chang, Christopher and Aggarwal, Shobhit and Veloso, Manuela and Temel, Zeynep and Kroemer, Oliver},

booktitle={IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)},

pages={10408--10415},

year={2025},

organization={IEEE},

doi={10.1109/IROS60139.2025.11246759}

}

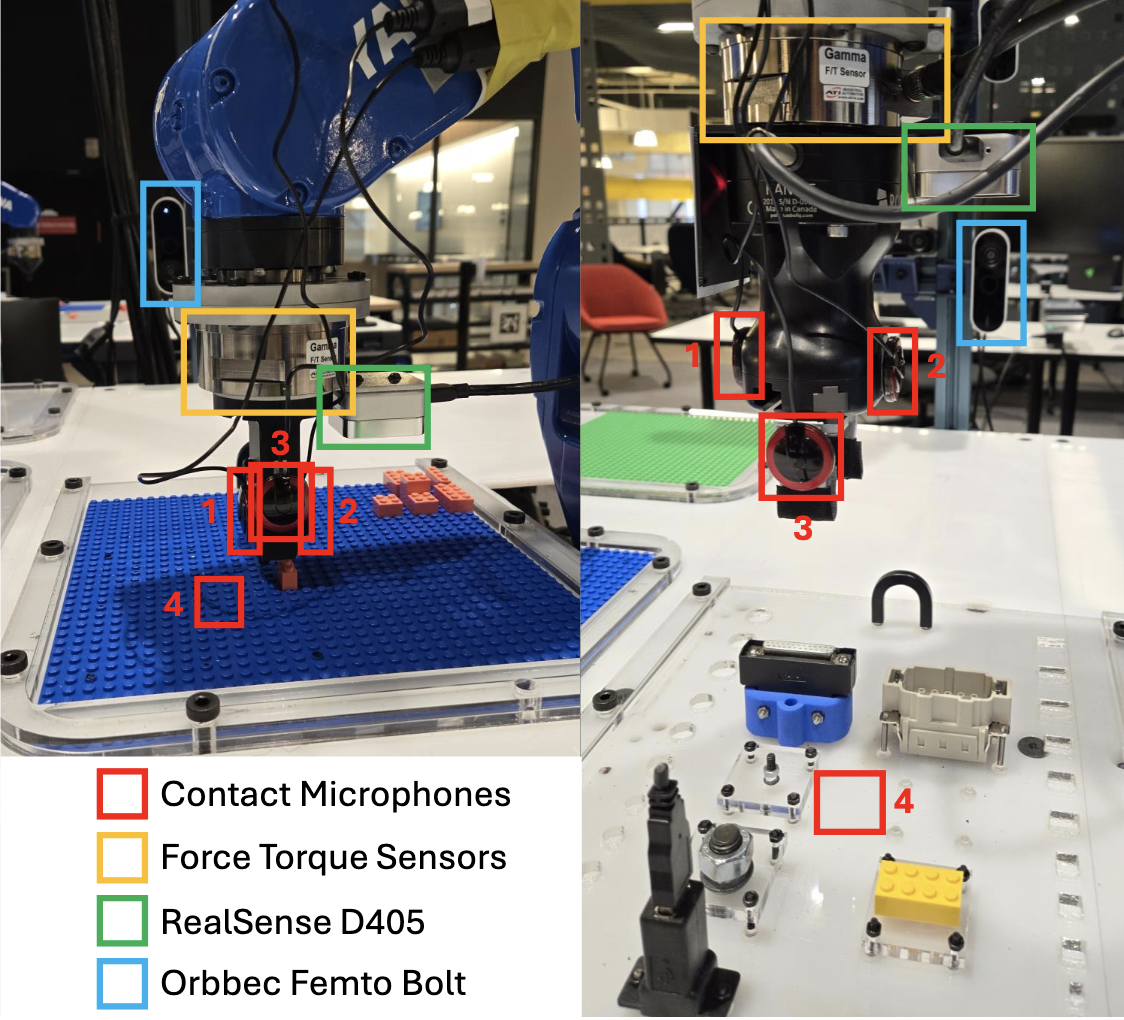

Small and medium-sized enterprises (SMEs) often struggle with automating high-mix, low-volume (HMLV) manufacturing due to the inflexibility and high cost of traditional automation solutions. This paper presents a novel approach to robotic manipulation for HMLV environments that leverages vibrotactile sensing. We propose integrating vibrotactile sensors, which capture subtle vibrations and acoustic signals, to provide real-time feedback during manipulation tasks. This approach enables the robot to detect subtle misalignments, which can assist in refining vision-based policies and improving the robot’s overall manipulation skills. We demonstrate the effectiveness of this method in several representative insertion tasks, showing how vibrotactile feedback can be used to predict success or failure of an insertion task as well as predict initial contact between an object grasped in-hand and the placement location. Our results suggest that vibrotactile sensing offers a promising pathway towards more robust and adaptable robotic systems that can better empower SMEs to embrace automation.

IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2025

|

|

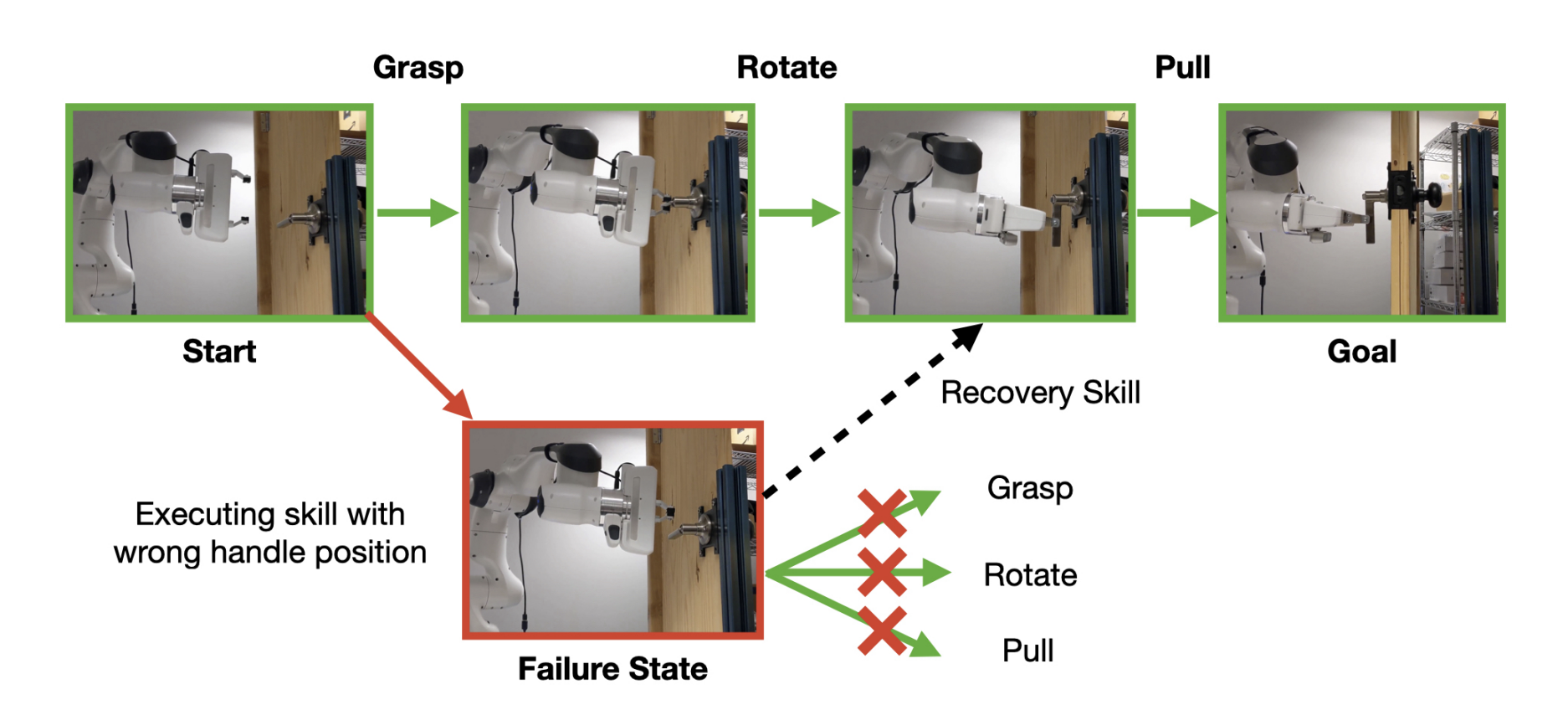

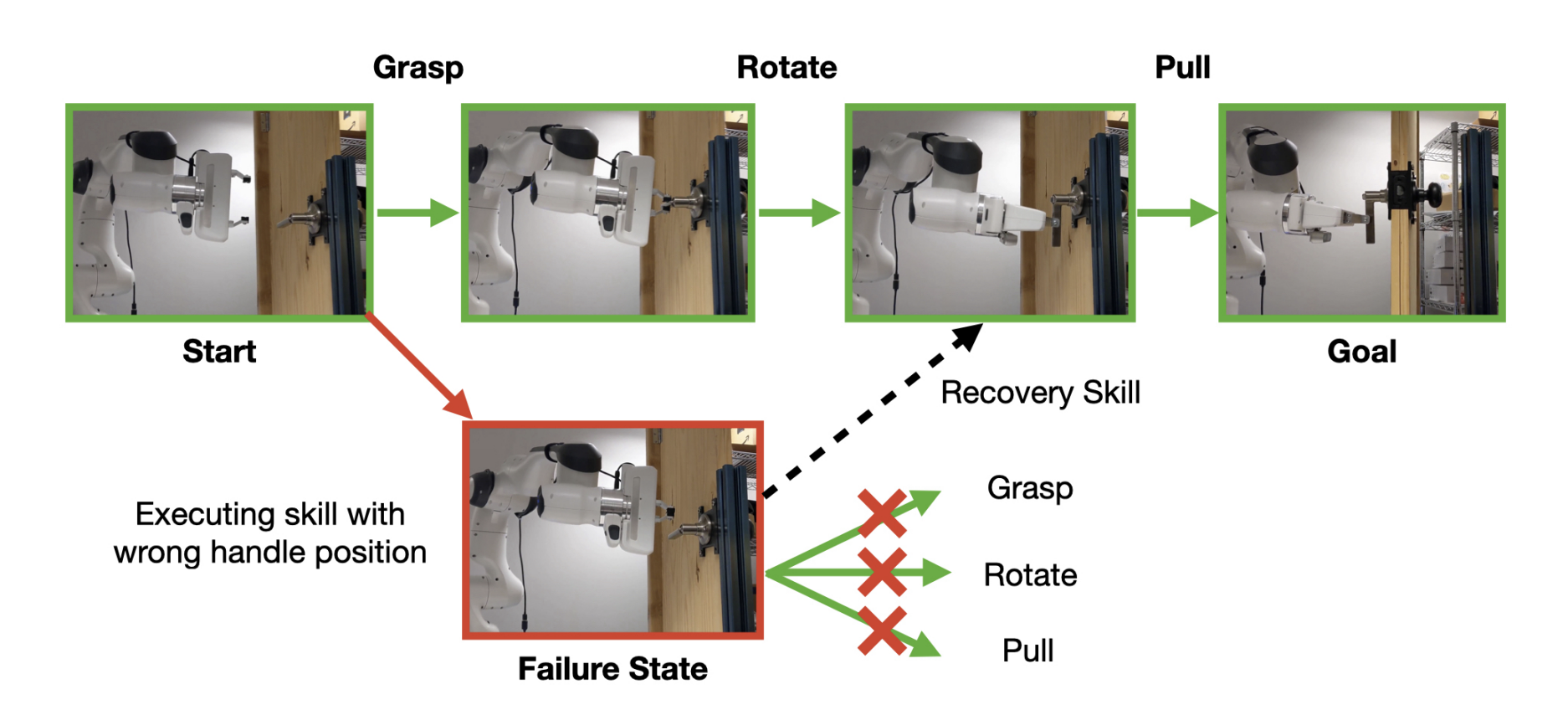

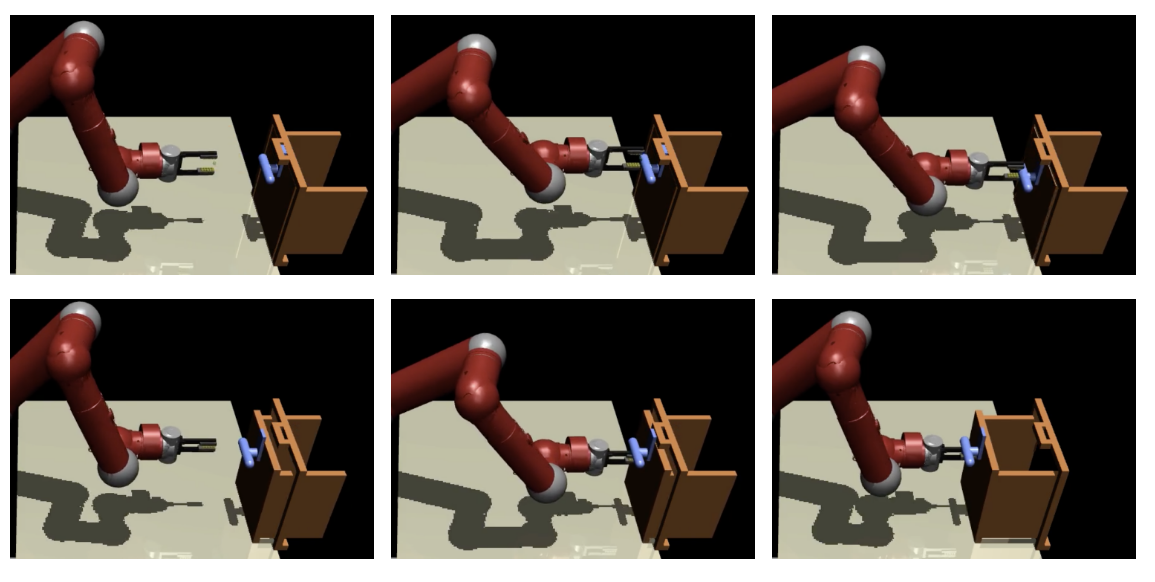

RecoveryChaining: Learning Local Recovery Policies for Robust Manipulation

Shivam Vats and Devesh K. Jha and Maxim Likhachev and Oliver Kroemer and Diego Romeres

@inproceedings{vats2025recoverychaining,

title={RecoveryChaining: Learning Local Recovery Policies for Robust Manipulation},

author={Vats, Shivam and Jha, Devesh K. and Likhachev, Maxim and Kroemer, Oliver and Romeres, Diego},

booktitle={IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)},

pages={9776--9783},

year={2025},

organization={IEEE}

}

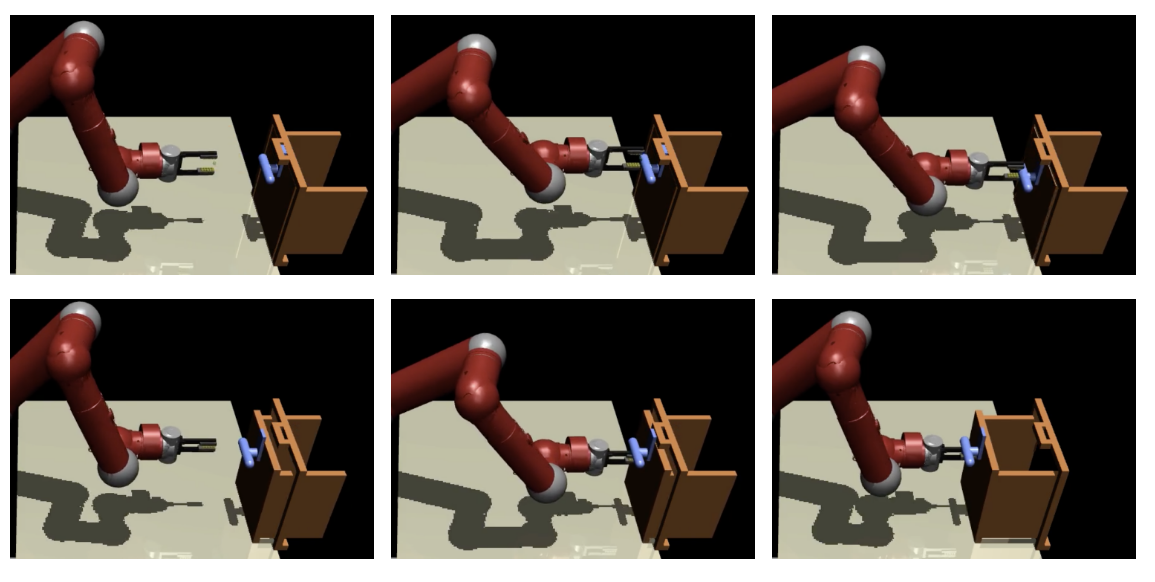

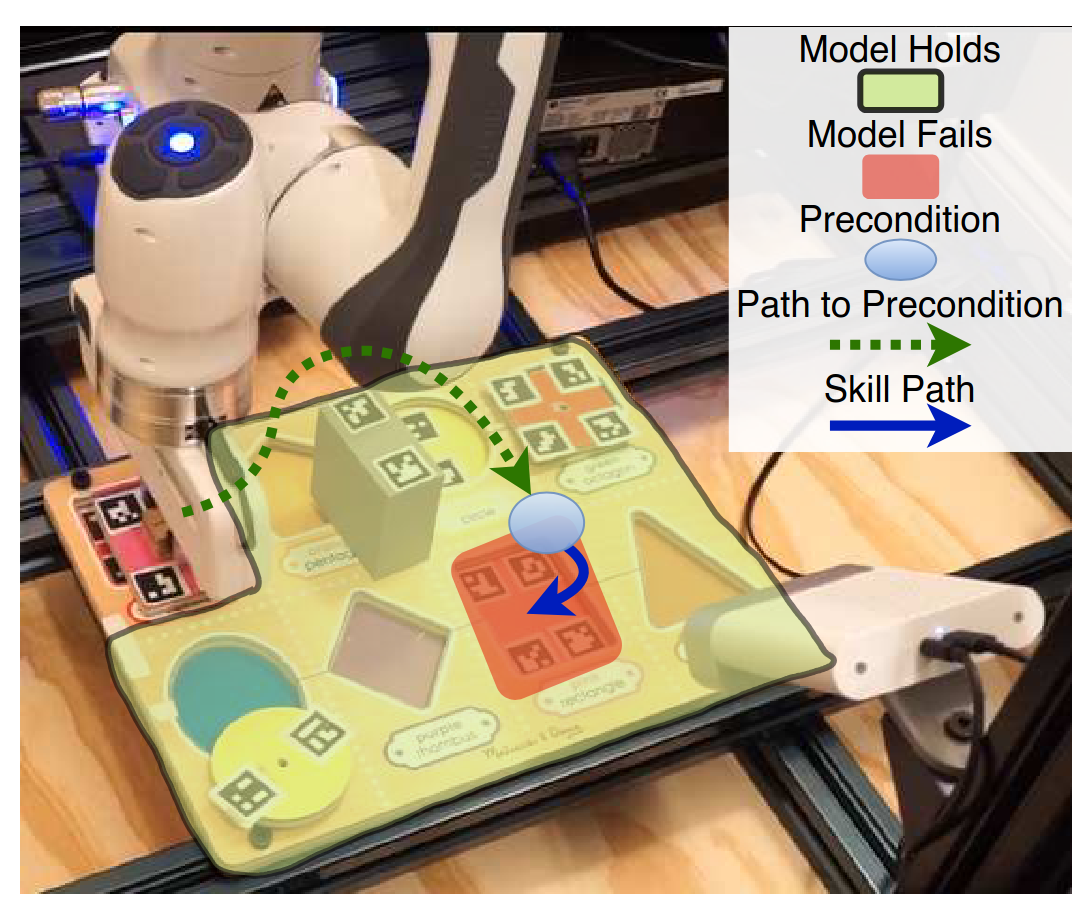

Model-based planners and controllers can efficiently optimize diverse objectives and generalize to long-horizon manipulation tasks, but they often fail due to model inaccuracies or unexpected disturbances. This paper introduces RecoveryChaining, a hierarchical reinforcement learning framework that learns local recovery policies triggered when a failure is detected based on sensory observations. The recovery policy uses a hybrid action space combining primitive robot actions with nominal options drawn from existing model-based controllers, enabling the system to determine how to recover, when to switch back to a nominal controller, and which controller to choose — even under sparse rewards. RecoveryChaining is evaluated on several multi-step manipulation tasks with sparse rewards, showing significantly more robust recovery policies than baseline methods, and the learned policies successfully transfer from simulation to a physical robot.

IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2025

|

|

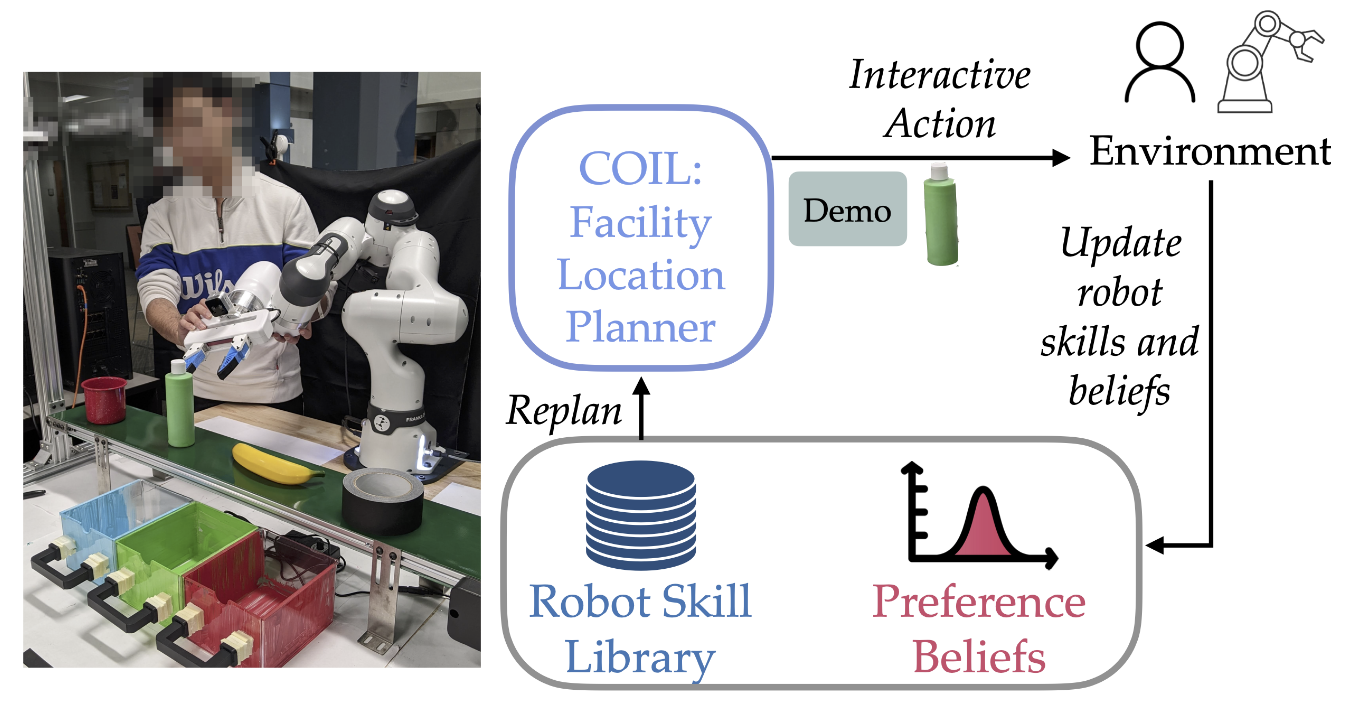

Optimal Interactive Learning on the Job via Facility Location Planning

Shivam Vats and Michelle Zhao and Patrick Callaghan and Mingxi Jia and Maxim Likhachev and Oliver Kroemer and George Konidaris

@inproceedings{vats2025optimalinteractive,

title={Optimal Interactive Learning on the Job via Facility Location Planning},

author={Vats, Shivam and Zhao, Michelle and Callaghan, Patrick and Jia, Mingxi and Likhachev, Maxim and Kroemer, Oliver and Konidaris, George},

booktitle={Robotics: Science \& Systems (R:SS)},

year={2025}

}

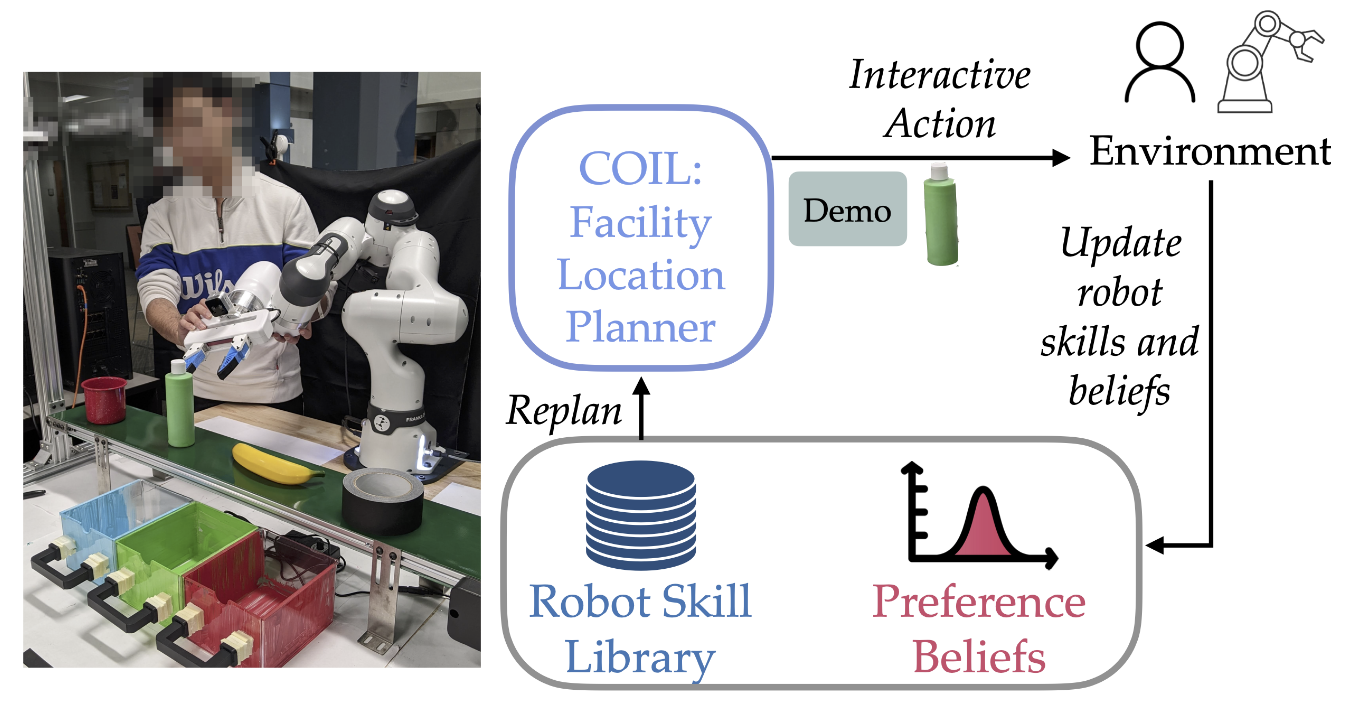

Collaborative robots must continually adapt to novel tasks and user preferences without overburdening human collaborators. Prior interactive robot learning methods typically focus on single-task scenarios and fall short in sustained, multi-task collaboration. This paper proposes COIL (Cost-Optimal Interactive Learning), a multi-task interaction planner that minimizes human effort across a sequence of tasks by strategically selecting among three query types: skill queries, preference queries, and help queries. When user preferences are known, the COIL optimization reduces to an uncapacitated facility location (UFL) problem, enabling bounded-suboptimal planning in polynomial time using established approximation algorithms. The formulation is extended to handle uncertainty in user preferences via a one-step belief space planner that incorporates the same efficient approximation routines. Simulated and real robot experiments on manipulation tasks demonstrate that the approach significantly reduces human work while maintaining task success.

Robotics: Science & Systems (R:SS), 2025

|

|

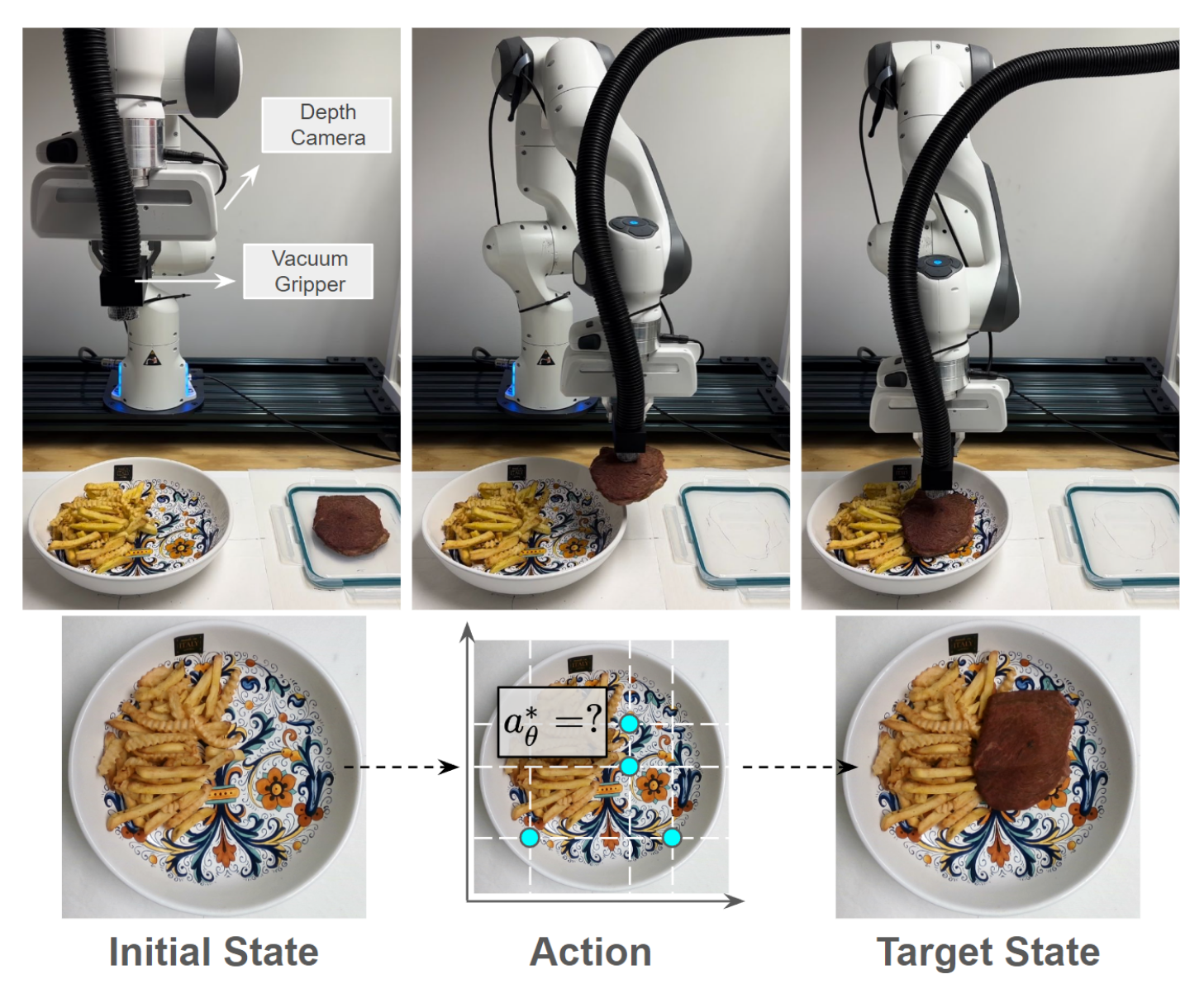

SonicBoom: Contact Localization Using Array of Microphones

Moonyoung Lee and Uksang Yoo and Jean Oh and Jeffrey Ichnowski and George Kantor and Oliver Kroemer

IEEE Robotics and Automation Letters (RA-L), 2025

|

|

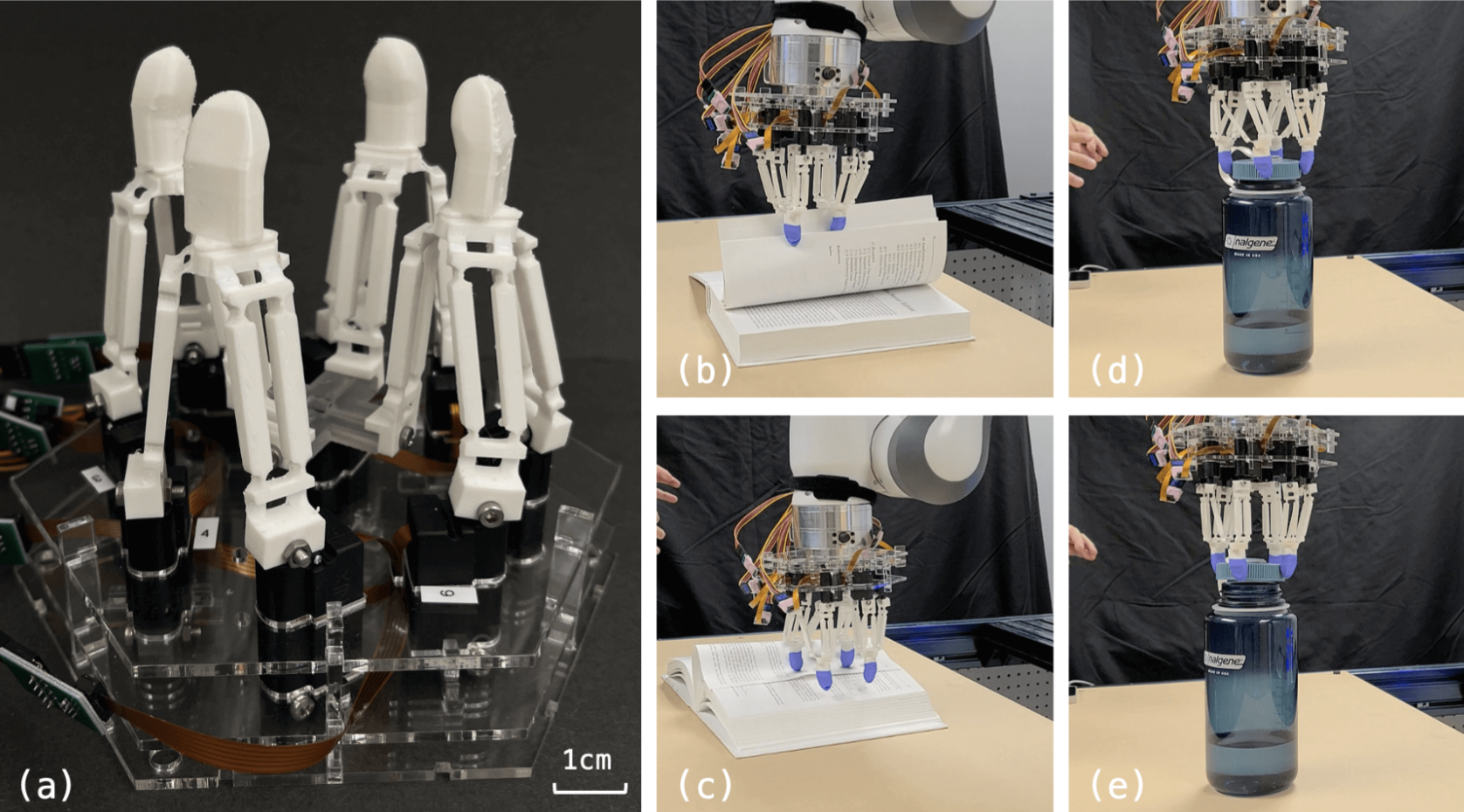

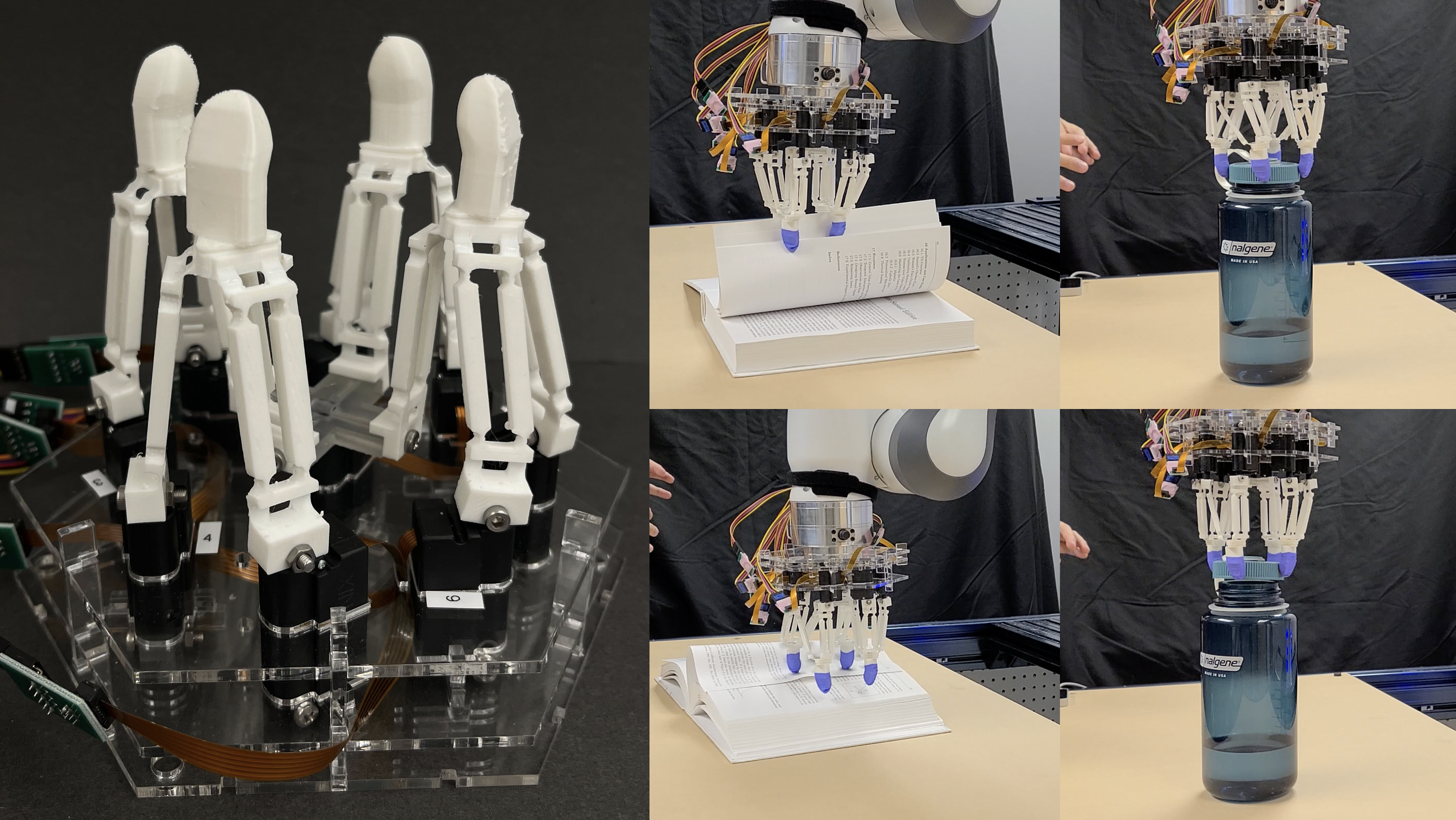

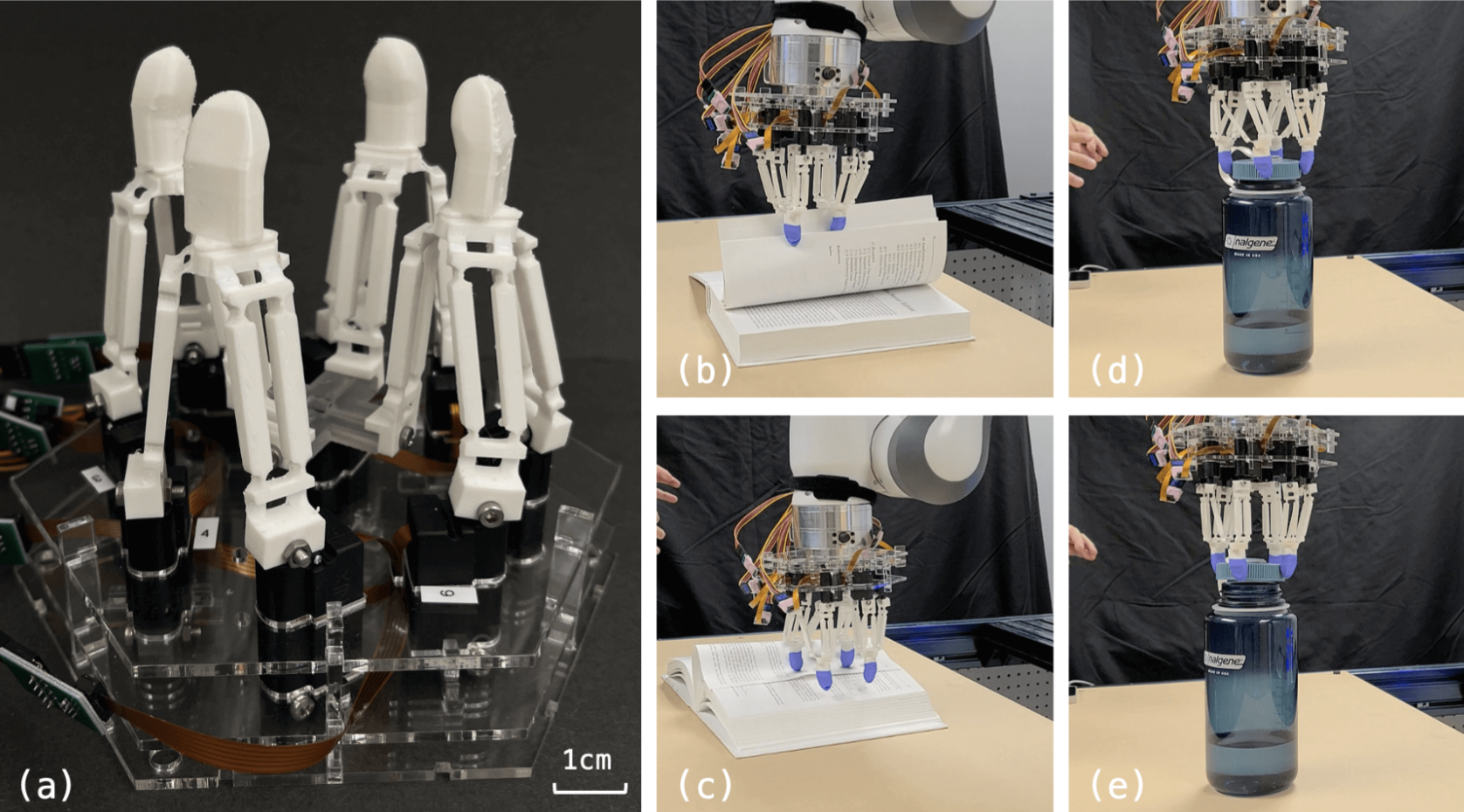

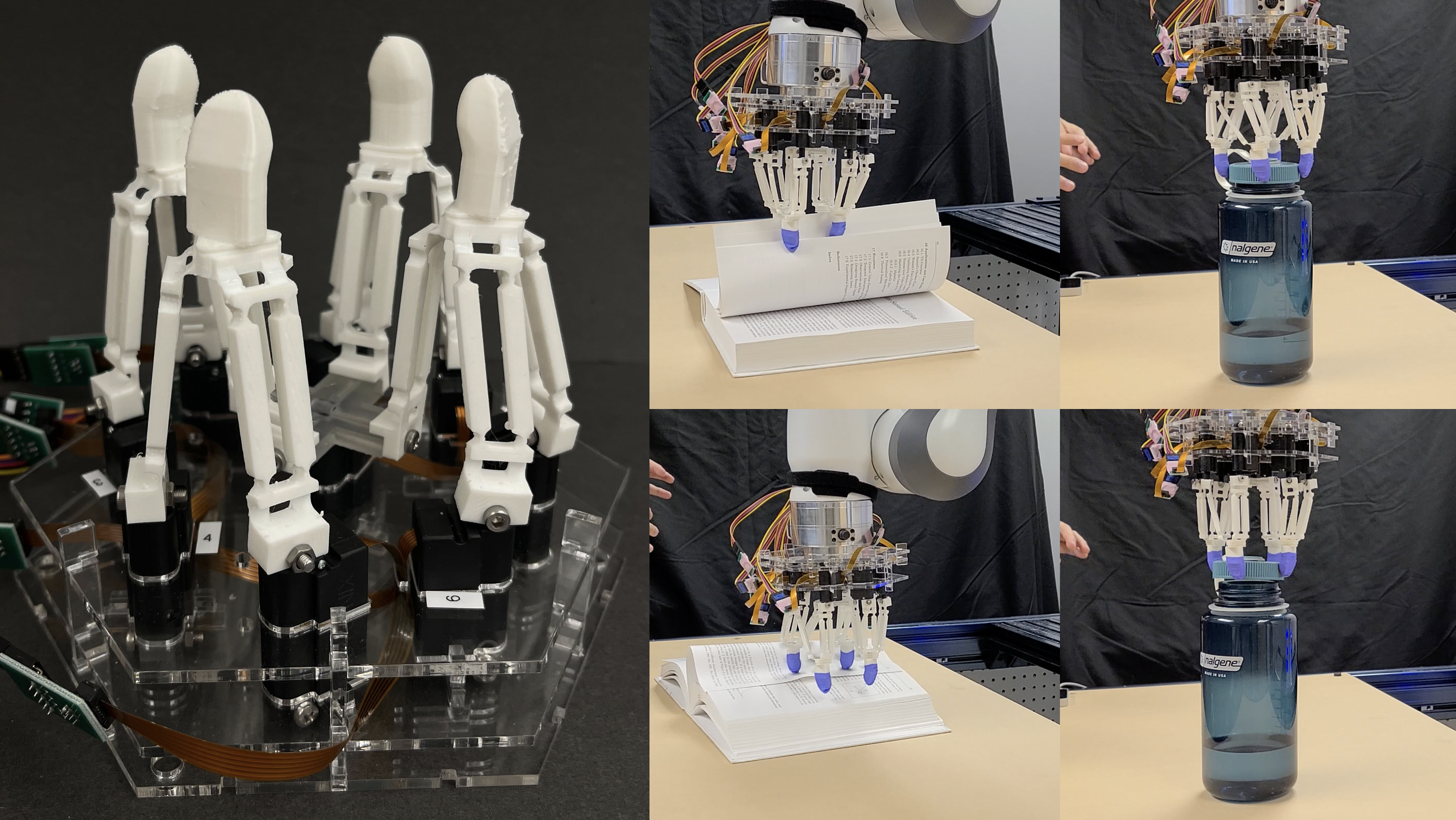

DELTAHANDS: A Synergistic Dexterous Hand Framework Based on Delta Robots

Zilin Si and Kevin Zhang and Oliver Kroemer and F. Zeynep Temel

@article{si2024deltahands,

title={DELTAHANDS: A Synergistic Dexterous Hand Framework Based on Delta Robots},

author={Si, Zilin and Zhang, Kevin and Kroemer, Oliver and Temel, F. Zeynep},

journal={IEEE Robotics and Automation Letters},

volume={9},

number={4},

pages={2301--2308},

year={2024},

publisher={IEEE},

doi={10.1109/LRA.2024.3456789}

}

Dexterous robotic manipulation in unstructured environments can greatly benefit from hand designs that are both highly dexterous and easy to manufacture and control. This paper presents DELTAHANDS, a synergistic dexterous hand framework built using soft delta robots that are modular, reconfigurable, and cost-effective. By leveraging delta robot kinematics and actuation synergies, the framework reduces control complexity while preserving dexterity. We characterize the delta robots’ kinematics, force profiles, and workspace, and validate the design through simulation and real-world grasping and teleoperation tasks that include cloth folding, cap opening, and object arrangement. The open-source framework supports easy hand reconfiguration for various manipulation tasks.

IEEE Robotics and Automation Letters (RA-L), 2024

|

|

Enhancing Dexterity in Robotic Manipulation via Hierarchical Contact Exploration

Xianyi Cheng and Sarvesh Patil and Zeynep Temel and Oliver Kroemer and Matthew T. Mason

IEEE Robotics and Automation Letters (RA-L), 2024

|

|

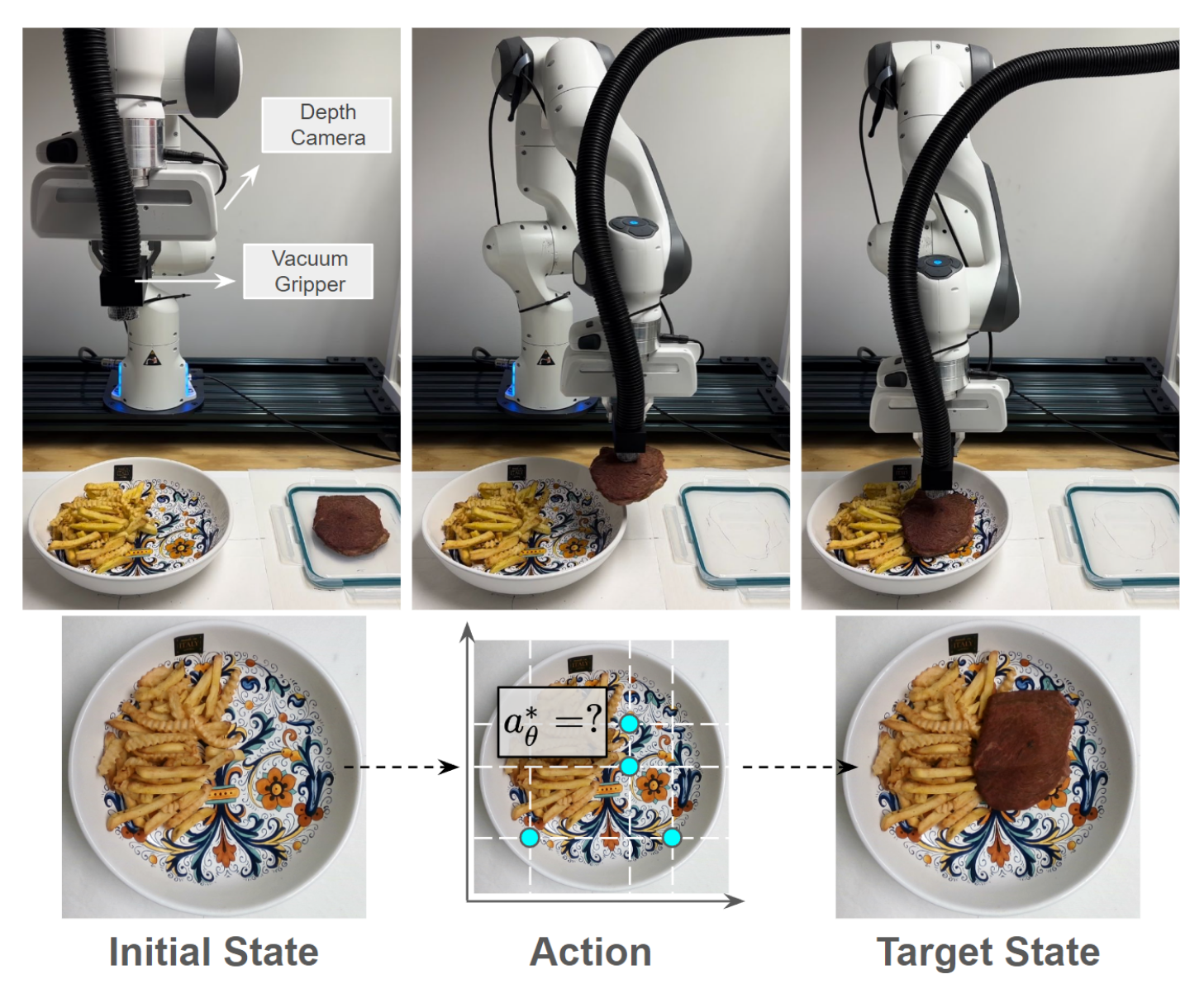

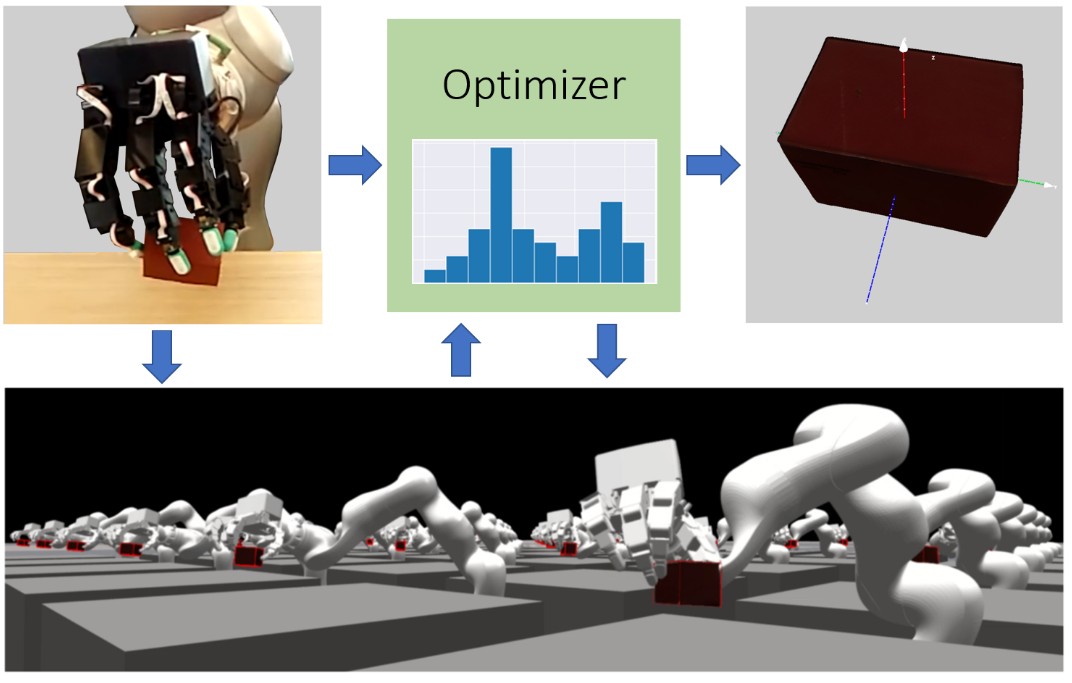

Leveraging Simulation-Based Model Preconditions for Fast Action Parameter Optimization with Multiple Models

M. Yunus Seker and Oliver Kroemer

@inproceedings{seker2024leveragingsimulationbasedmodelpreconditions,

title={Leveraging Simulation-Based Model Preconditions for Fast Action Parameter Optimization with Multiple Models},

author={M. Yunus Seker and Oliver Kroemer},

year={2024},

journal={arXiv preprint arXiv:2403.11313},

}

Optimizing robotic action parameters is a significant challenge for manipulation tasks that demand high levels of precision and generalization. Using a model-based approach, the robot must quickly reason about the outcomes of different actions using a predictive model to find a set of parameters that will have the desired effect. The model may need to capture the behaviors of rigid and deformable objects, as well as objects of various shapes and sizes. Predictive models often need to trade-off speed for prediction accuracy and generalization. This paper proposes a framework that leverages the strengths of multiple predictive models, including analytical, learned, and simulation-based models, to enhance the efficiency and accuracy of action parameter optimization. Our approach uses Model Deviation Estimators (MDEs) to determine the most suitable predictive model for any given state-action parameters, allowing the robot to select models to make fast and precise predictions. We extend the MDE framework by not only learning sim-to-real MDEs, but also sim-to-sim MDEs. Our experiments show that these sim-to-sim MDEs provide significantly faster parameter optimization as well as a basis for efficiently learning sim-to-real MDEs through finetuning. The ease of collecting sim-to-sim training data also allows the robot to learn MDEs based directly on visual inputs and local material properties.

IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Oct 2024

|

|

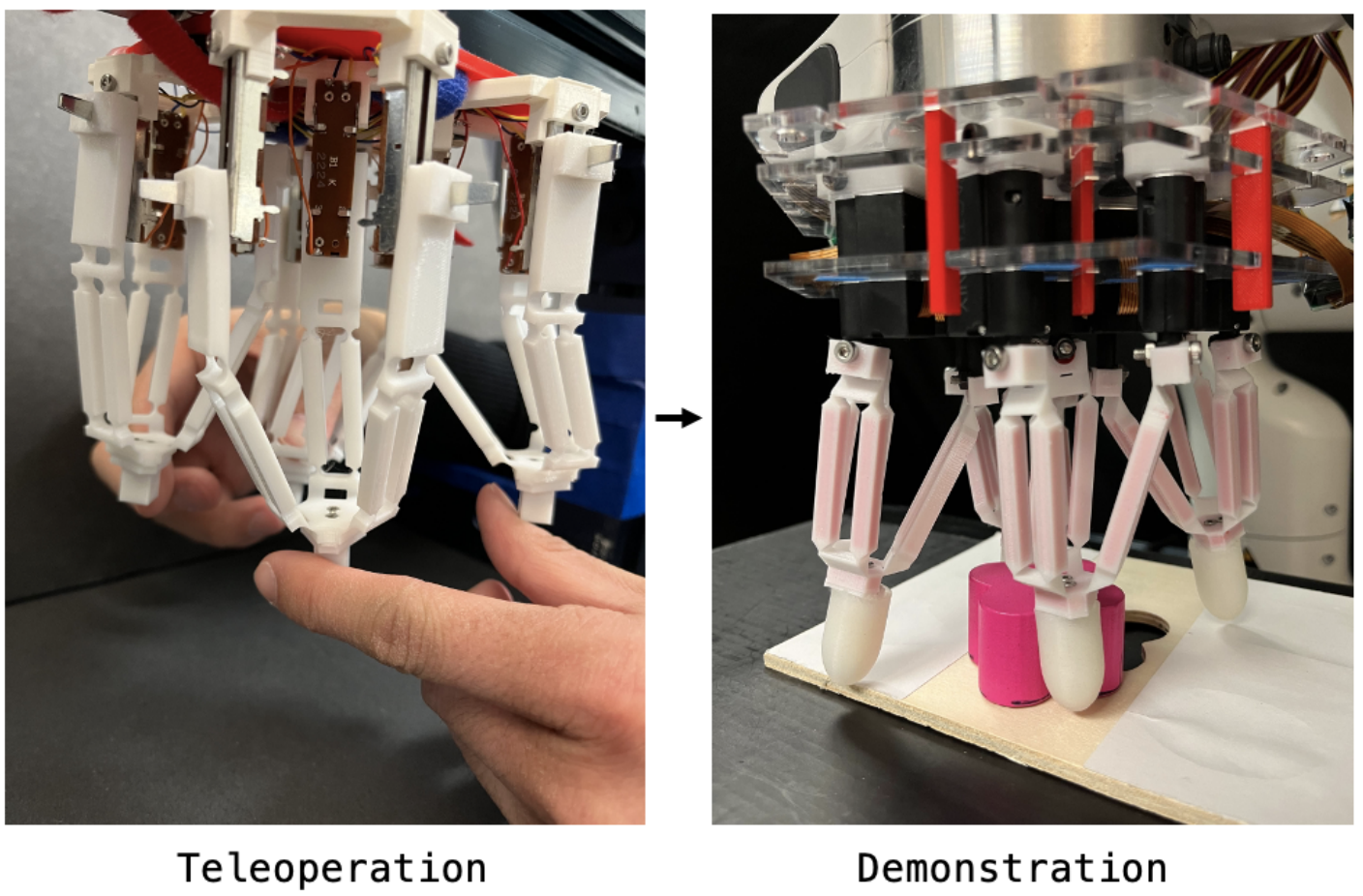

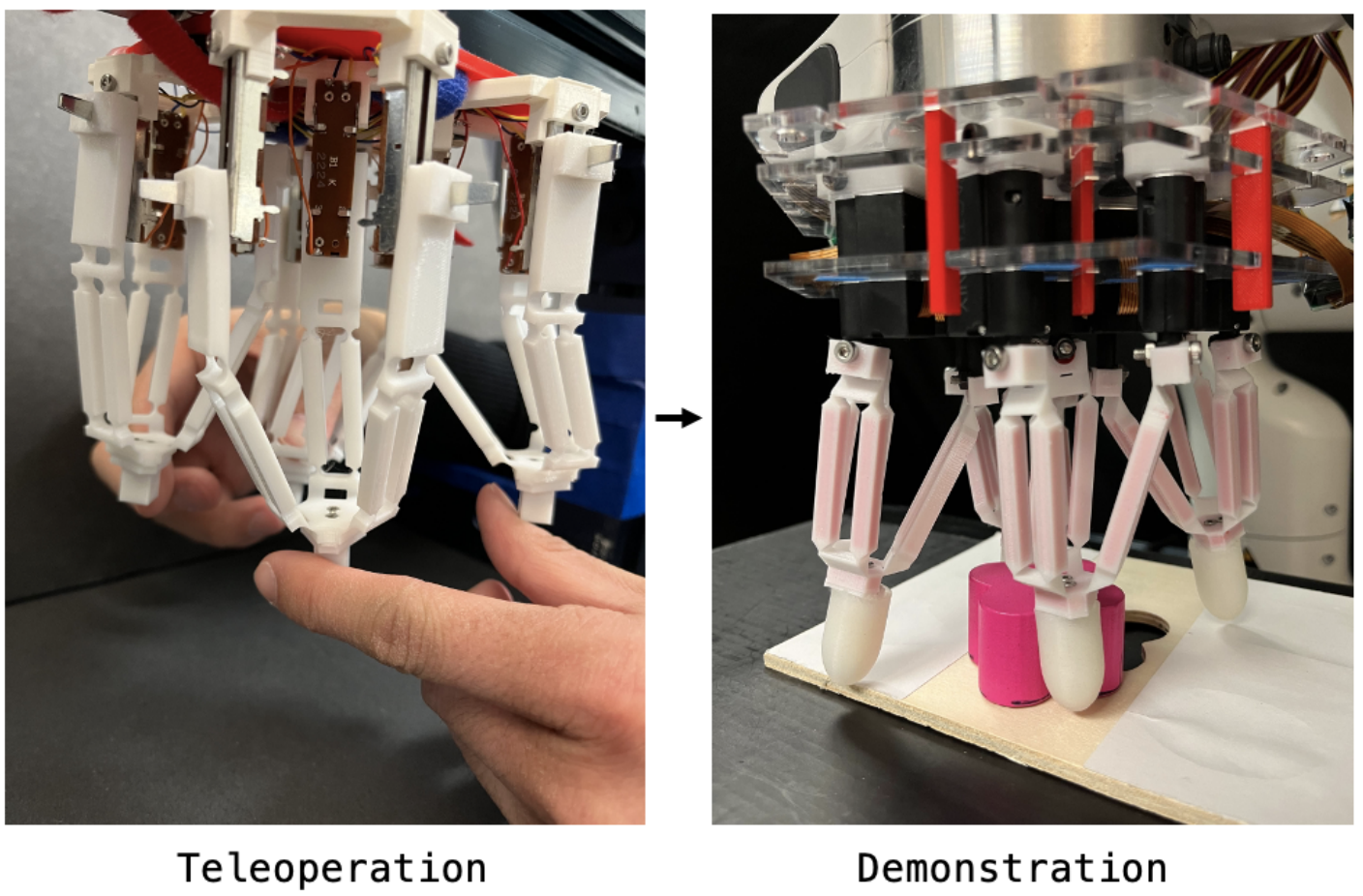

Tilde: Teleoperation for Dexterous In-Hand Manipulation Learning with a DeltaHand

Zilin Si*, Kevin Lee Zhang*, Zeynep Temel, and Oliver Kroemer

@inproceedings{si2024tilde,

title={Tilde: Teleoperation for Dexterous In-Hand Manipulation Learning with a DeltaHand},

author={Si, Zilin and Zhang, Kevin Lee and Temel, Zeynep and Kroemer, Oliver},

journal={arXiv preprint arXiv:2405.18804},

year={2024}

}

Dexterous robotic manipulation remains a challenging domain due to its strict demands for precision and robustness on both hardware and software. While dexterous robotic hands have demonstrated remarkable capabilities in complex tasks, efficiently learning adaptive control policies for hands still presents a significant hurdle given the high dimensionalities of hands and tasks. To bridge this gap, we propose Tilde, an imitation learning-based in-hand manipulation system on a dexterous DeltaHand. It leverages 1) a low-cost, configurable, simple-to-control, soft dexterous robotic hand, DeltaHand, 2) a user-friendly, precise, real-time teleoperation interface, TeleHand, and 3) an efficient and generalizable imitation learning approach with diffusion policies. Our proposed TeleHand has a kinematic twin design to the DeltaHand that enables precise one-to-one joint control of the DeltaHand during teleoperation. This facilitates efficient high-quality data collection of human demonstrations in the real world. To evaluate the effectiveness of our system, we demonstrate the fully autonomous closed-loop deployment of diffusion policies learned from demonstrations across seven dexterous manipulation tasks with an average 90% success rate.

Robotics: Science and Systems (RSS), July 2024 - Best paper award at 2nd Workshop on Dexterous Manipulation - Design, Perception and Control, RSS 2024

|

|

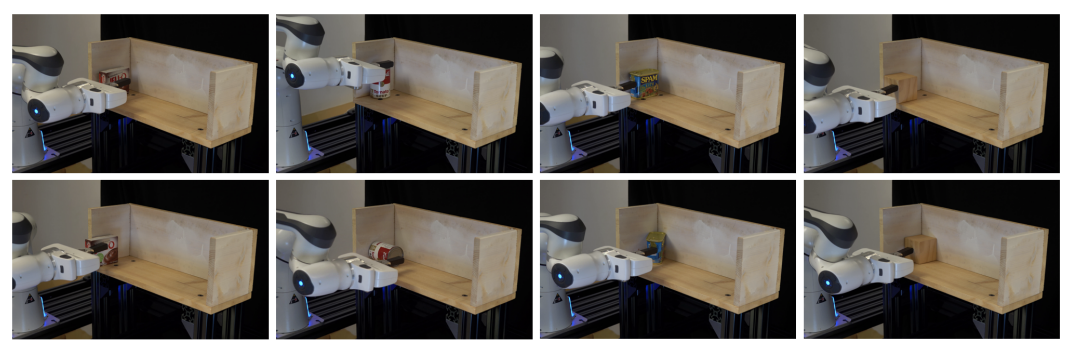

Towards Autonomous Crop Monitoring: Inserting Sensors in Cluttered Environments

Moonyoung Lee, Aaron Berger, Dominic Guri, Kevin Zhang, Lisa Coffey, George Kantor, and Oliver Kroemer

@inproceedings{lee2024towards,

title={Towards Autonomous Crop Monitoring: Inserting Sensors in Cluttered Environments},

author={Lee, Moonyoung and Berger, Aaron and Guri, Dominic and Zhang, Kevin and Coffey, Lisa and Kantor, George and Kroemer, Oliver},

journal={IEEE Robotics and Automation Letters},

year={2024},

publisher={IEEE}

}

Monitoring crop nutrients can aid farmers in optimizing fertilizer use. Many existing robots rely on vision-based phenotyping, however, which can only indirectly estimate nutrient deficiencies once crops have undergone visible color changes. We present a contact-based phenotyping robot platform that can directly insert nitrate sensors into cornstalks to proactively monitor macronutrient levels in crops. This task is challenging because inserting such sensors requires sub-centimeter precision in an environment which contains high levels of clutter, lighting variation, and occlusion. To address these challenges, we develop a robust perception-action pipeline to grasp stalks, and create a custom robot gripper which mechanically aligns the sensor before inserting it into the stalk. Through experimental validation on 48 unique stalks in a cornfield in Iowa, we demonstrate our platform's capability of detecting a stalk with 94% success.

IEEE Robotics and Automation Letters (RA-L), June 2024

|

|

Towards Robotic Tree Manipulation: Leveraging Graph Representations

Chung Hee Kim, Moonyoung Lee, Oliver Kroemer, and George Kantor

@inproceedings{kim2024towards,

title={Towards robotic tree manipulation: Leveraging graph representations},

author={Kim, Chung Hee and Lee, Moonyoung and Kroemer, Oliver and Kantor, George},

booktitle={2024 IEEE International Conference on Robotics and Automation (ICRA)},

pages={11884--11890},

year={2024},

organization={IEEE}

}

There is growing interest in automating agricultural tasks that require intricate and precise interaction with specialty crops, such as trees and vines. However, developing robotic solutions for crop manipulation remains a difficult challenge due to complexities involved in modeling their deformable behavior. In this study, we present a framework for learning the deformation behavior of tree-like crops under contact interaction. Our proposed method involves encoding the state of a spring-damper modeled tree crop as a graph. This representation allows us to employ graph networks to learn both a forward model for predicting resulting deformations, and a contact policy for inferring actions to manipulate tree crops. We conduct a comprehensive set of experiments in a simulated environment and demonstrate generalizability of our method on previously unseen trees.

International Conference on Robotics and Automation (ICRA), May 2024

|

|

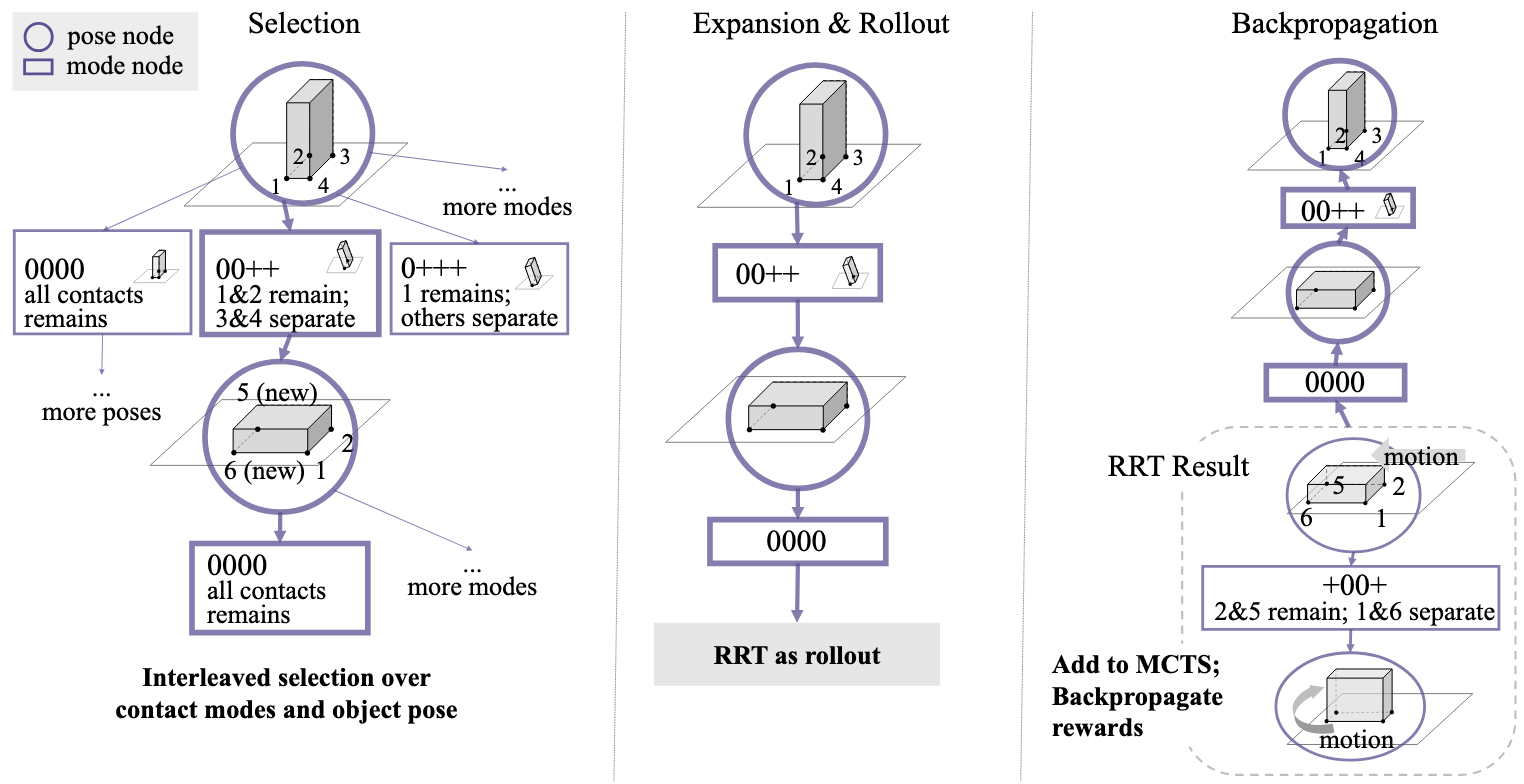

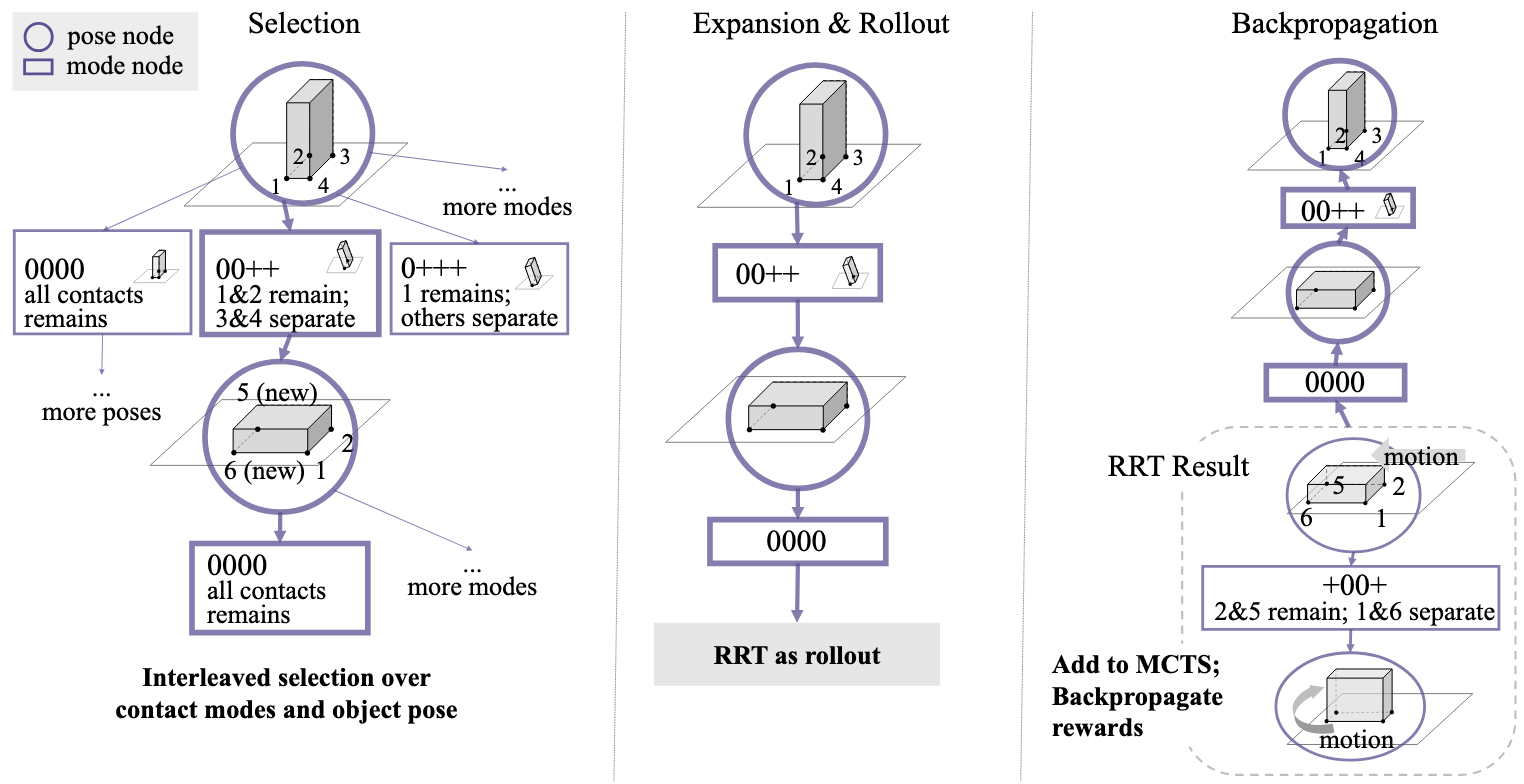

Enhancing Dexterity in Robotic Manipulation via Hierarchical Contact Exploration

Xianyi Cheng, Sarvesh Patil, F. Zeynep Temel, Oliver Kroemer, Matthew T. Mason

@ARTICLE{cheng2024hidex,

author={Cheng, Xianyi and Patil, Sarvesh and Temel, Zeynep and Kroemer, Oliver and Mason, Matthew T.},

journal={IEEE Robotics and Automation Letters},

title={Enhancing Dexterity in Robotic Manipulation via Hierarchical Contact Exploration},

year={2024},

volume={9},

number={1},

pages={390-397},

doi={10.1109/LRA.2023.3333699}}

Planning robot dexterity is challenging due to the non-smoothness introduced by contacts, intricate fine motions, and ever-changing scenarios. We present a hierarchical planning framework for dexterous robotic manipulation (HiDex). This framework explores in-hand and extrinsic dexterity by leveraging contacts. It generates rigid-body motions and complex contact sequences. Our framework is based on Monte-Carlo Tree Search and has three levels: 1) planning object motions and environment contact modes; 2) planning robot contacts; 3) path evaluation and control optimization. This framework offers two main advantages. First, it allows efficient global reasoning over high-dimensional complex space created by contacts. It solves a diverse set of manipulation tasks that require dexterity, both intrinsic (using the fingers) and extrinsic (also using the environment), mostly in seconds. Second, our framework allows the incorporation of expert knowledge and customizable setups in task mechanics and models. It requires minor modifications to accommodate different scenarios and robots. Hence, it provides a flexible and generalizable solution for various manipulation tasks. As examples, we analyze the results on 7 hand configurations and 15 scenarios. We demonstrate 8 tasks on two robot platforms.

IEEE Robotics and Automation Letters (RA-L), June 2024

|

|

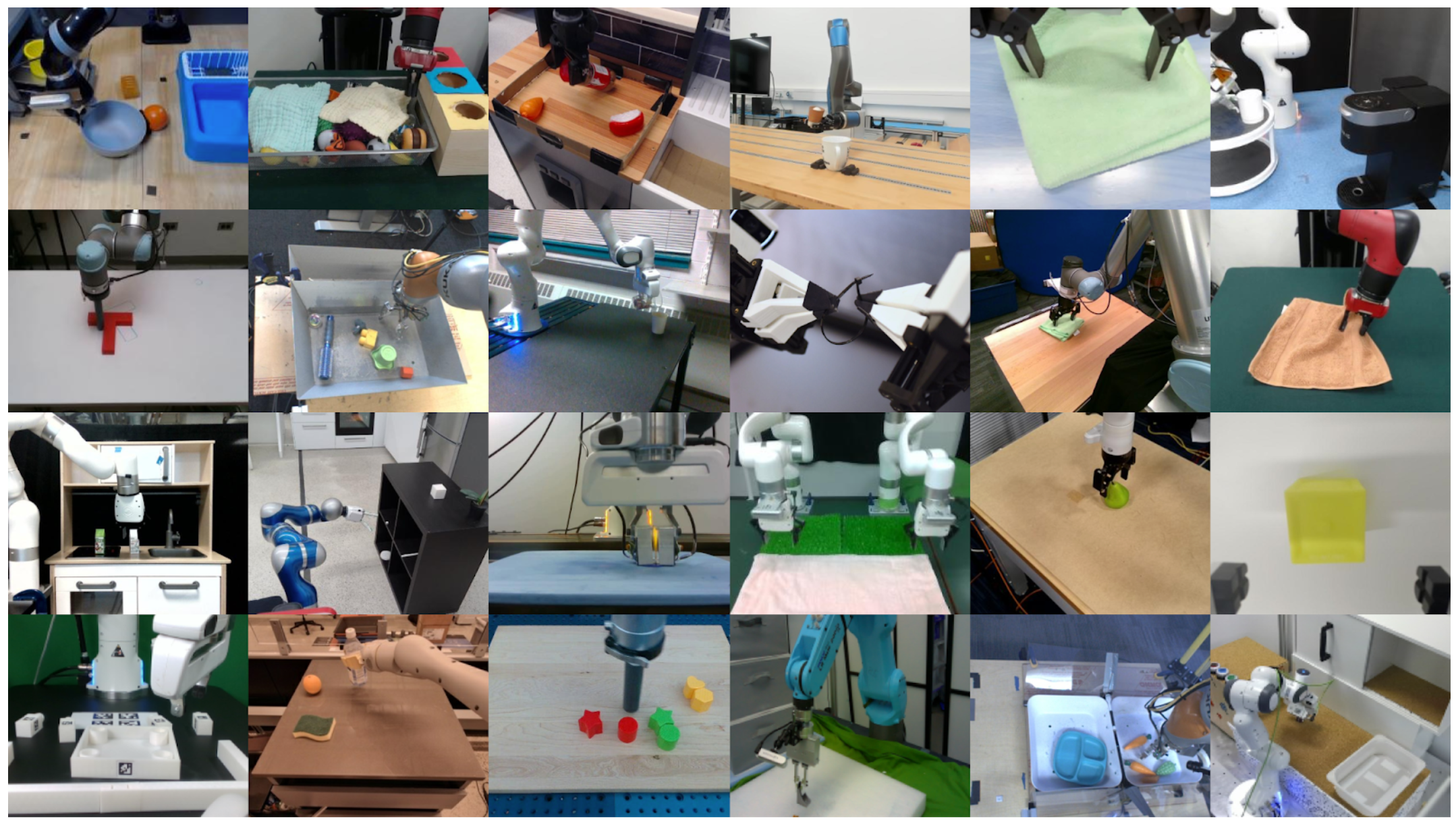

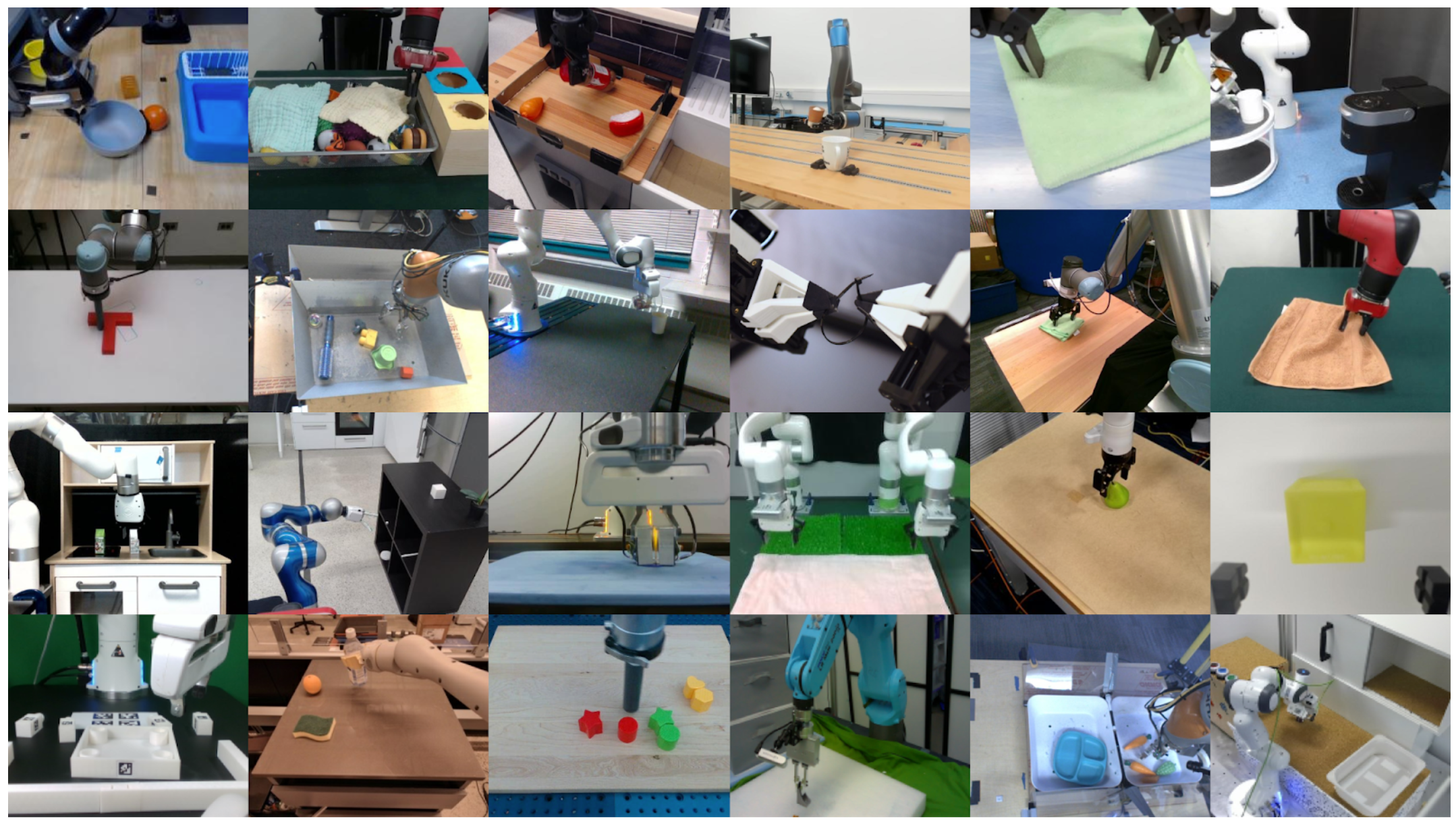

Open X-Embodiment: Robotic Learning Datasets and RT-X Models

Open X-Embodiment Collaboration, Jacky Liang, Kevin Zhang, Mohit Sharma, Oliver Kroemer, and 287 others

@inproceedings{padalkar2023open,

title={Open x-embodiment: Robotic learning datasets and rt-x models},

author={Padalkar, Abhishek and Pooley, Acorn and Jain, Ajinkya and Bewley, Alex and Herzog, Alex and Irpan, Alex and Khazatsky, Alexander and Rai, Anant and Singh, Anikait and Brohan, Anthony and others},

journal={https://arxiv.org/abs/2310.08864},

year={2024}

}

Large, high-capacity models trained on diverse datasets have shown remarkable successes on efficiently tackling downstream applications. In domains from NLP to Computer Vision, this has led to a consolidation of pretrained models, with general pretrained backbones serving as a starting point for many applications. Can such a consolidation happen in robotics? Conventionally, robotic learning methods train a separate model for every application, every robot, and even every environment. Can we instead train “generalist” X-robot policy that can be adapted efficiently to new robots, tasks, and environments? In this paper, we provide datasets in standardized data formats and models to make it possible to explore this possibility in the context of robotic manipulation, alongside experimental results that provide an example of effective X-robot policies. We assemble a dataset from 22 different robots collected through a collaboration between 21 institutions, demonstrating 527 skills (160266 tasks). We show that a high-capacity model trained on this data, which we call RT-X, exhibits positive transfer and improves the capabilities of multiple robots by leveraging experience from other platforms.

International Conference on Robotics and Automation (ICRA), May 2024 - Best Paper at ICRA 2024

|

|

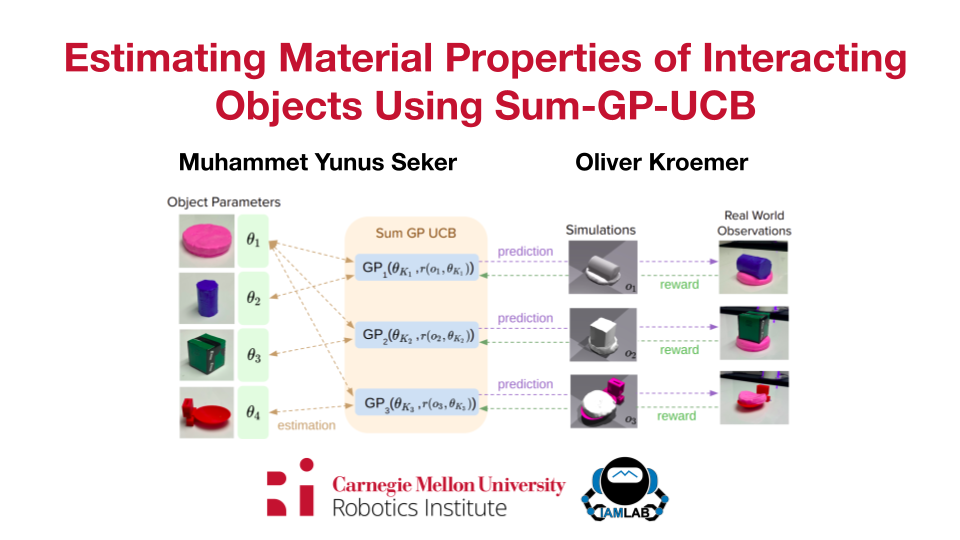

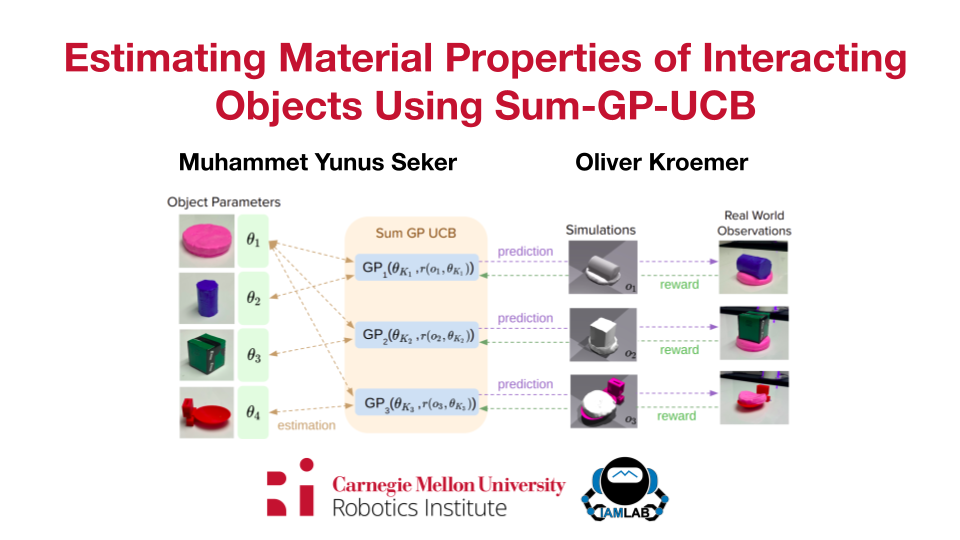

Estimating Material Properties of Interacting Objects Using Sum-GP-UCB

M. Yunus Seker and Oliver Kroemer

@INPROCEEDINGS{10610129,

author={Seker, M. Yunus and Kroemer, Oliver},

booktitle={2024 IEEE International Conference on Robotics and Automation (ICRA)},

title={Estimating Material Properties of Interacting Objects Using Sum-GP-UCB},

year={2024},

volume={},

number={},

pages={16684-16690},

keywords={Incremental learning;Bayes methods;Object recognition;Optimization;Robots;Material properties},

doi={10.1109/ICRA57147.2024.10610129}

}

Robots need to estimate the material and dynamic properties of objects from observations in order to simulate them accurately. We present a Bayesian optimization approach to identifying the material property parameters of objects based on a set of observations. Our focus is on estimating these properties based on observations of scenes with different sets of interacting objects. We propose an approach that exploits the structure of the reward function by modeling the reward for each observation separately and using only the parameters of the objects in that scene as inputs. The resulting lower-dimensional models generalize better over the parameter space, which in turn results in a faster optimization. To speed up the optimization process further, and reduce the number of simulation runs needed to find good parameter values, we also propose partial evaluations of the reward function, wherein the selected parameters are only evaluated on a subset of real world evaluations. The approach was successfully evaluated on a set of scenes with a wide range of object interactions, and we showed that our method can effectively perform incremental learning without resetting the rewards of the gathered observations.

International Conference on Robotics and Automation (ICRA), May 2024

|

|

DELTAHANDS: A Synergistic Dexterous Hand Framework Based on Delta Robots

Zilin Si, Kevin Lee Zhang, Oliver Kroemer, and Zeynep Temel

@article{si2024deltahands,

title={DELTAHANDS: A Synergistic Dexterous Hand Framework Based on Delta Robots},

author={Si, Zilin and Zhang, Kevin and Kroemer, Oliver and Temel, F Zeynep},

journal={IEEE Robotics and Automation Letters},

year={2024},

publisher={IEEE}

}

Dexterous robotic manipulation in unstructured environments can aid in everyday tasks such as cleaning and caretaking. Anthropomorphic robotic hands are highly dexterous and theoretically well-suited for working in human domains, but their complex designs and dynamics often make them difficult to control. By contrast, parallel-jaw grippers are easy to control and are used extensively in industrial applications, but they lack the dexterity for various kinds of grasps and in-hand manipulations. In this work, we present DELTAHANDS, a synergistic dexterous hand framework with Delta robots. The DELTAHANDS are soft, easy to reconfigure, simple to manufacture with low-cost off-the-shelf materials, and possess high degrees of freedom that can be easily controlled. DELTAHANDS' dexterity can be adjusted for different applications by leveraging actuation synergies, which can further reduce the control complexity, overall cost, and energy consumption. We characterize the Delta robots' kinematics accuracy, force profiles, and workspace range to assist with hand design. Finally, we evaluate the versatility of DELTAHANDS by grasping a diverse set of objects and by using teleoperation to complete three dexterous manipulation tasks: cloth folding, cap opening, and cable arrangement.

IEEE Robotics and Automation Letters (RA-L), Feb 2024

|

|

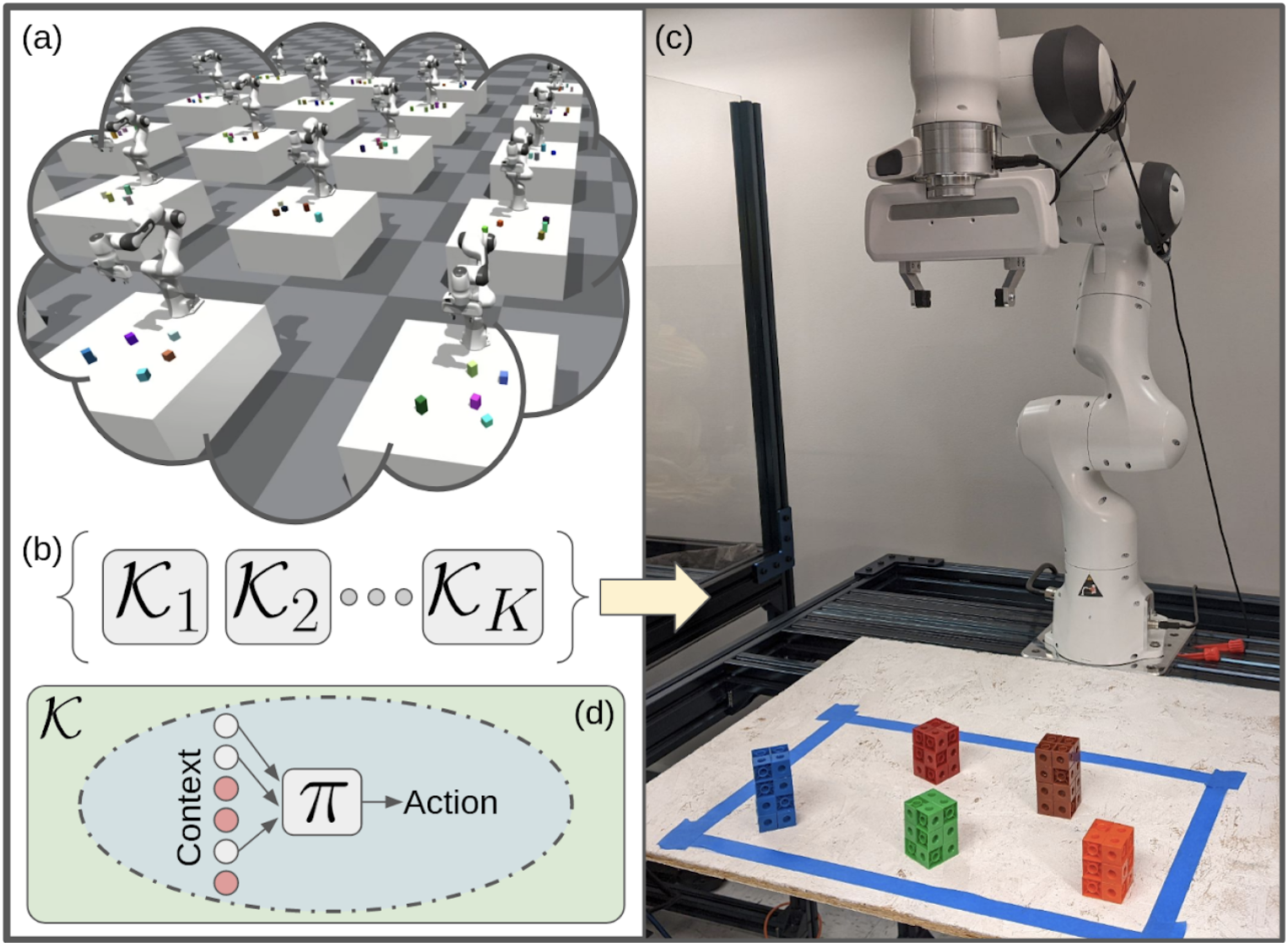

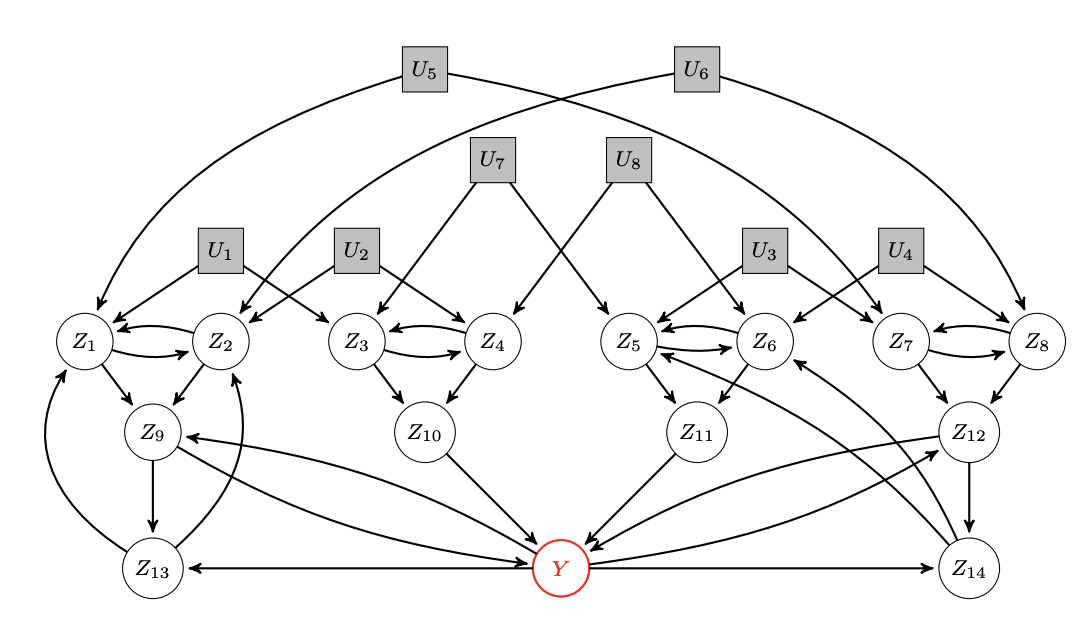

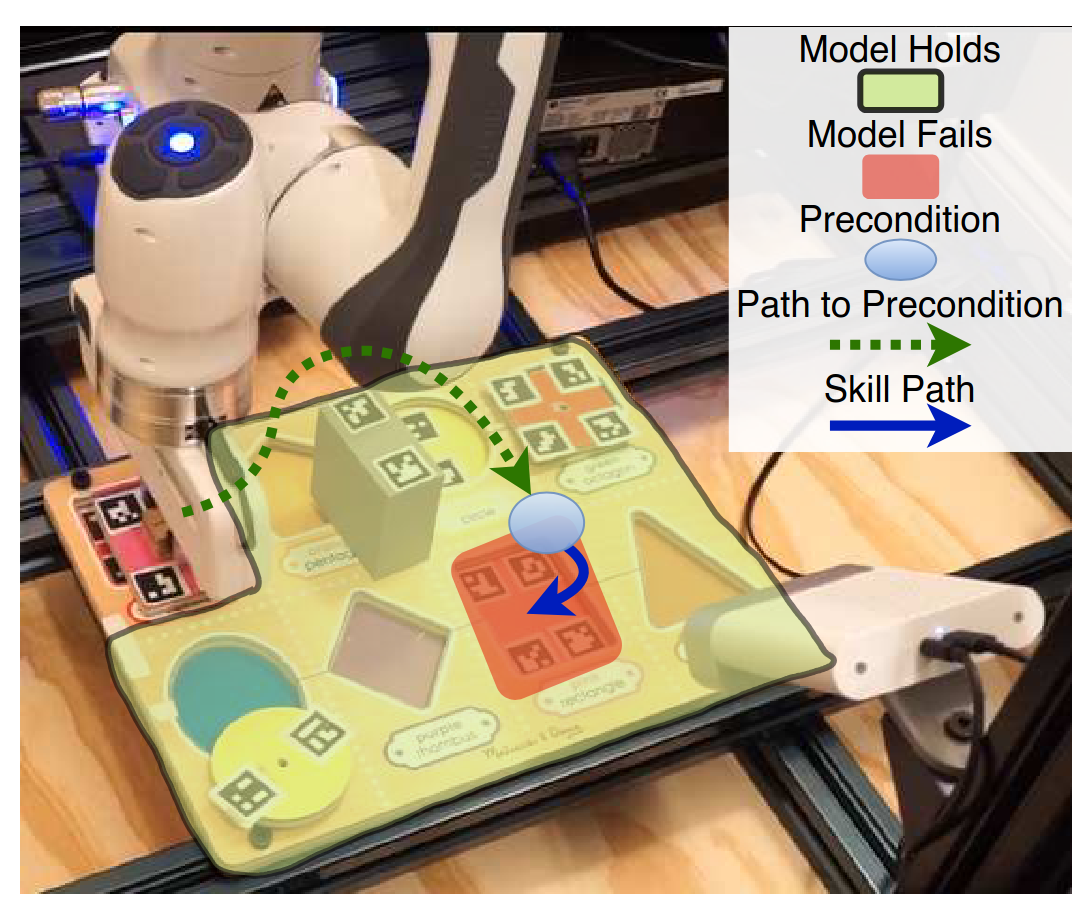

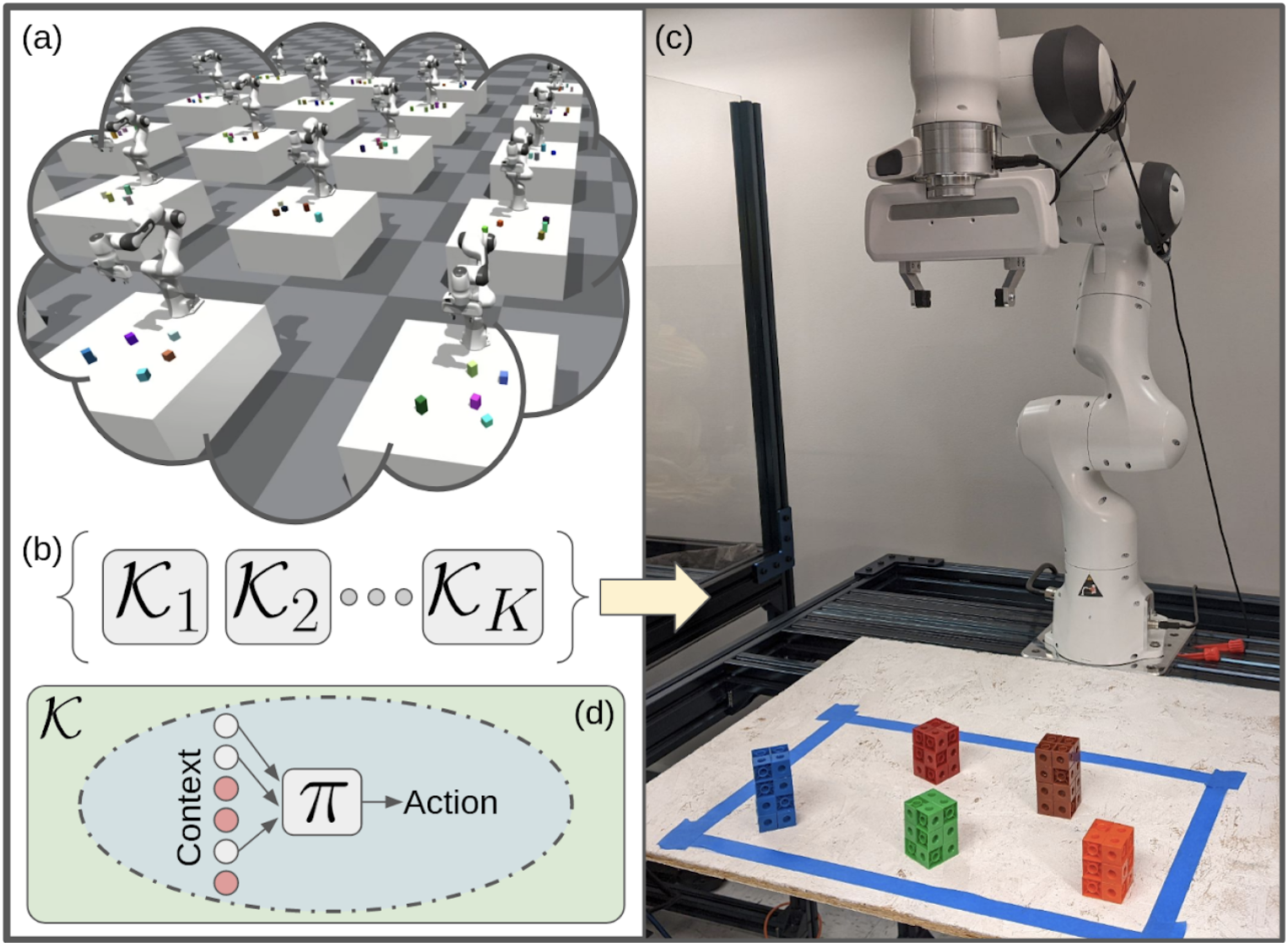

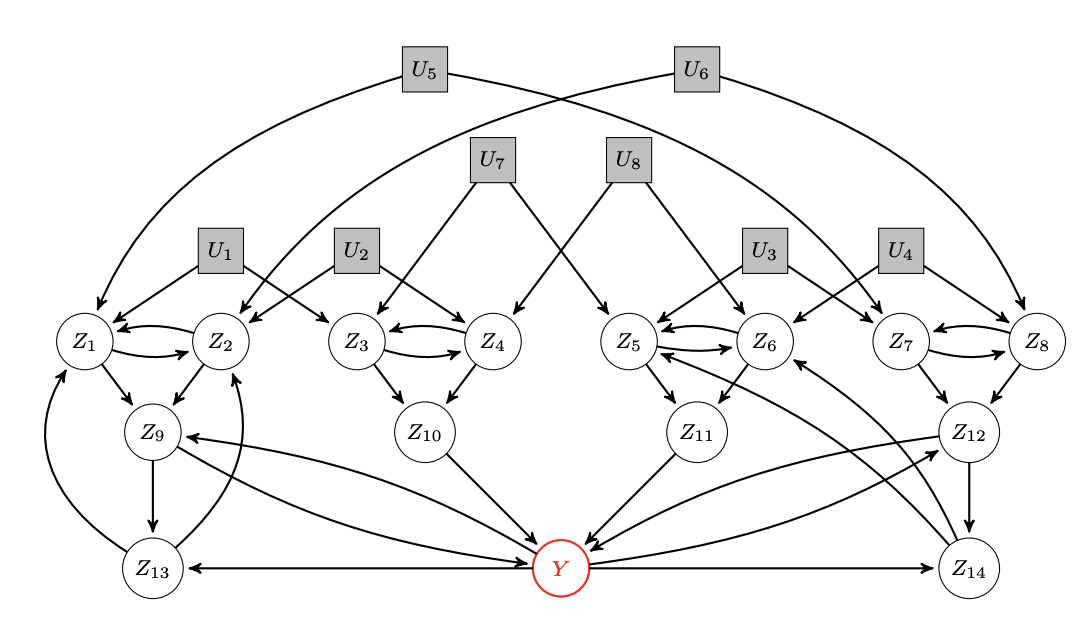

SCALE: Causal Learning and Discovery of Robot Manipulation Skills using Simulation

Tabitha Edith Lee, Shivam Vats, Siddharth Girdhar, and Oliver Kroemer

@article{Lee-Vats-2023-138873,

title={SCALE: Causal Learning and Discovery of Robot Manipulation Skills using Simulation},

author={Tabitha Edith Lee and Shivam Vats and Siddharth Girdhar and Oliver Kroemer},

journal={Proceedings of (CoRL) Conference on Robot Learning},

year={2023}

}

We propose SCALE, an approach for discovering and learning a diverse set of interpretable robot skills from a limited dataset. Rather than learning a single skill which may fail to capture all the modes in the data, we first identify the different modes via causal reasoning and learn a separate skill for each of them. Our main insight is to associate each mode with a unique set of causally relevant context variables that are discovered by performing causal interventions in simulation. This enables data partitioning based on the causal processes that generated the data, and then compressed skills that ignore the irrelevant variables can be trained. We model each robot skill as a Regional Compressed Option, which extends the options framework by associating a causal process and its relevant variables with the option. Modeled as the skill Data Generating Region, each causal process is local in nature and hence valid over only a subset of the context space. We demonstrate our approach for two representative manipulation tasks: block stacking and peg-in-hole insertion under uncertainty. Our experiments show that our approach yields diverse skills that are compact, robust to domain shifts, and suitable for sim-to-real transfer.

Conference on Robot Learning (CoRL), Nov 2023

|

|

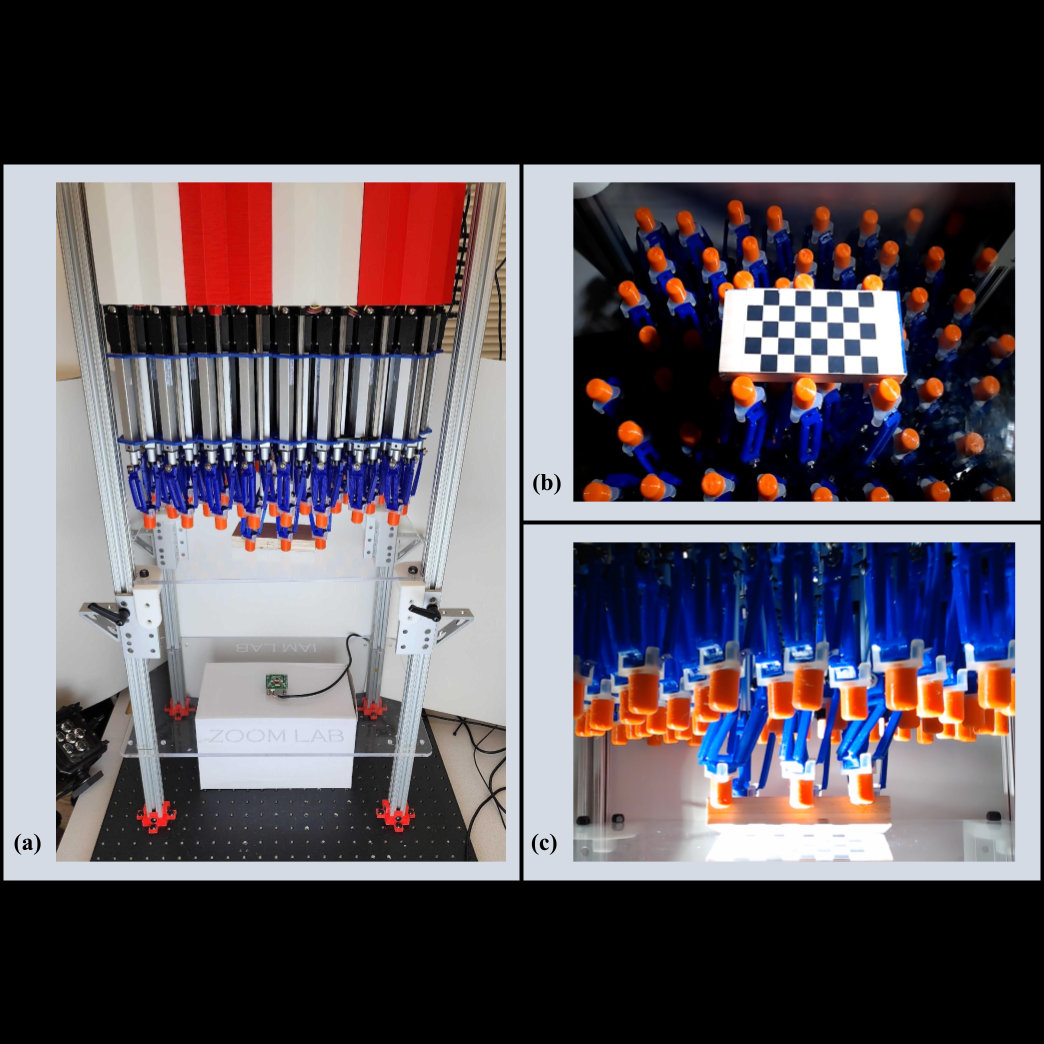

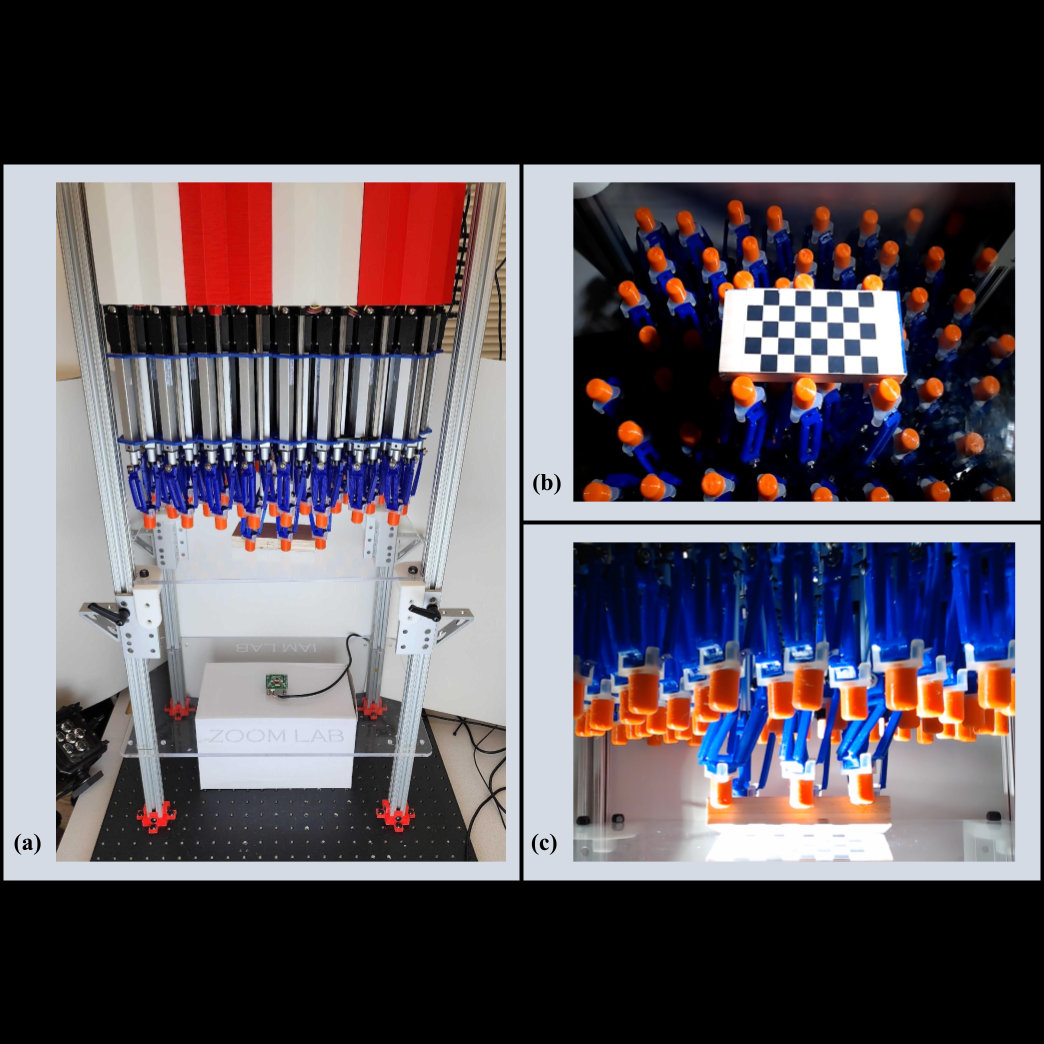

Linear Delta Arrays for Compliant Dexterous Distributed Manipulation

Sarvesh Patil, Tony Tao, Tess Hellebrekers, Oliver Kroemer, F. Zeynep Temel

@inproceedings{Patil_2023,

title={Linear Delta Arrays for Compliant Dexterous Distributed Manipulation},

url={http://dx.doi.org/10.1109/ICRA48891.2023.10160578},

DOI={10.1109/icra48891.2023.10160578},

booktitle={2023 IEEE International Conference on Robotics and Automation (ICRA)},

publisher={IEEE},

author={Patil, Sarvesh and Tao, Tony and Hellebrekers, Tess and Kroemer, Oliver and Temel, F. Zeynep},

year={2023},

month=may}

This paper presents a new type of distributed dexterous manipulator: delta arrays. Our delta array setup consists of 64 linearly-actuated delta robots with 3D-printed compliant linkages. Through the design of the individual delta robots, the modular array structure, and distributed communication and control, we study a wide range of in-plane and out-of-plane manipulations, as well as prehensile manipulations among subsets of neighboring delta robots. We also demonstrate dexterous manipulation capabilities of the delta array using reinforcement learning while leveraging the compliance to not break the end-effectors. Our evaluations show that the resulting 192 DoF compliant robot is capable of performing various coordinated distributed manipulations of a variety of objects, including translation, alignment, prehensile squeezing, lifting, and grasping.

International Conference on Robotics and Automation (ICRA), May 2023

|

|

Efficient Recovery Learning using Model Predictive Meta-Reasoning

Shivam Vats, Maxim Likhachev, and Oliver Kroemer

@article{Vats-2023-135449,

author = {Shivam Vats and Maxim Likhachev and Oliver Kroemer},

title = {Efficient Recovery Learning using Model Predictive Meta-Reasoning},

journal = {Proceedings of (ICRA) International Conference on Robotics and Automation},

year = {2023},

month = {May},

}

Operating under real world conditions is challenging due to the possibility of a wide range of failures induced by execution errors and state uncertainty. In relatively benign settings, such failures can be overcome by retrying or executing one of a small number of hand-engineered recovery strategies. By contrast, contact-rich sequential manipulation tasks, like opening doors and assembling furniture, are not amenable to exhaustive hand-engineering. To address this issue, we present a general approach for robustifying manipulation strategies in a sample-efficient manner. Our approach incrementally improves robustness by first discovering the failure modes of the current strategy via exploration in simulation and then learning additional recovery skills to handle these failures. To ensure efficient learning, we propose an online algorithm called Meta-Reasoning for Skill Learning (MetaReSkill) that monitors the progress of all recovery policies during training and allocates training resources to recoveries that are likely to improve the task performance the most. We use our approach to learn recovery skills for door-opening and evaluate them both in simulation and on a real robot with little fine-tuning. Compared to open-loop execution, our experiments show that even a limited amount of recovery learning improves task success substantially from 71% to 92.4% in simulation and from 75% to 90% on a real robot.

International Conference on Robotics and Automation (ICRA), May 2023

|

|

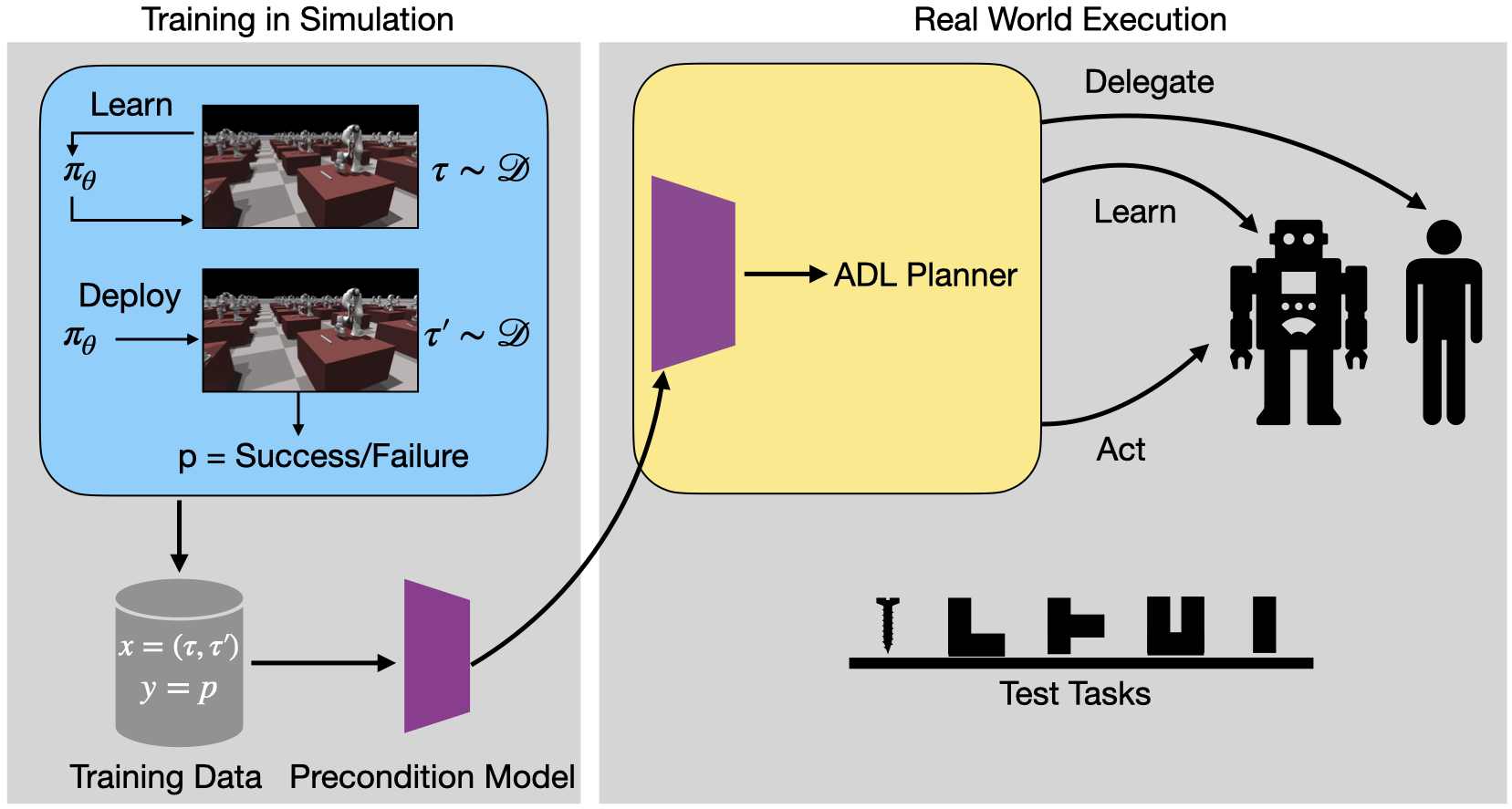

Synergistic Scheduling of Learning and Allocation of Tasks in Human-Robot Teams

Shivam Vats, Oliver Kroemer and Maxim Likhachev

@inproceedings{vats2022synergistic,

title={Synergistic Scheduling of Learning and Allocation of Tasks in Human-robot Teams},

author={Vats, Shivam and Kroemer, Oliver and Likhachev, Maxim},

booktitle={2022 International Conference on Robotics and Automation (ICRA)},

pages={2789--2795},

year={2022},

organization={IEEE}

}

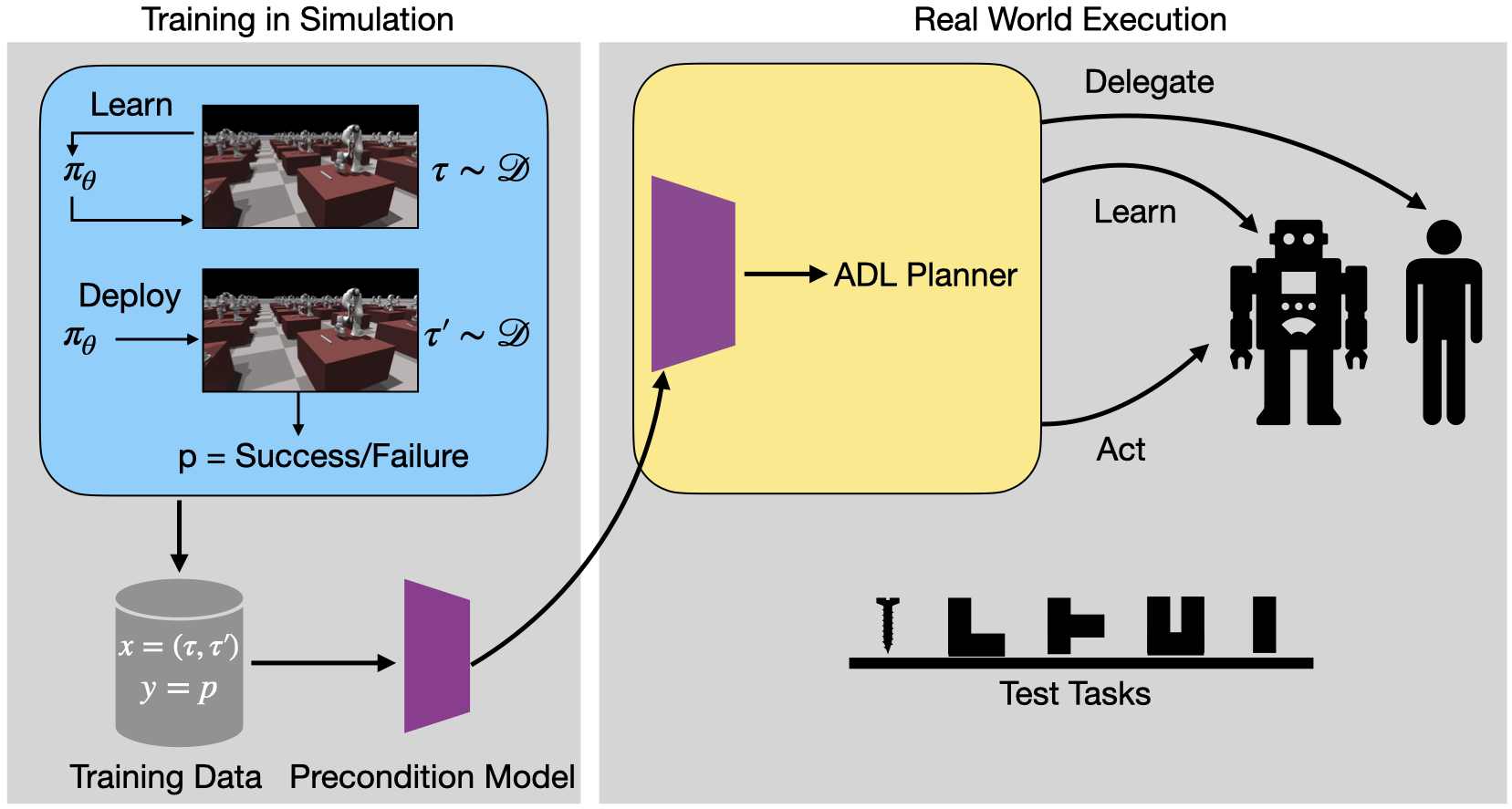

We consider the problem of completing a set of n tasks with a human-robot team using minimum effort. In many domains, teaching a robot to be fully autonomous can be counterproductive if there are finitely many tasks to be done. Rather, the optimal strategy is to weigh the cost of teaching a robot and its benefit -- how many new tasks it allows the robot to solve autonomously. We formulate this as a planning problem where the goal is to decide what tasks the robot should do autonomously (act), what tasks should be delegated to a human (delegate) and what tasks the robot should be taught (learn) so as to complete all the given tasks with minimum effort. This planning problem results in a search tree that grows exponentially with n -- making standard graph search algorithms intractable. We address this by converting the problem into a mixed integer program that can be solved efficiently using off-the-shelf solvers with bounds on solution quality. To predict the benefit of learning, we propose a precondition prediction classifier. Given two tasks, this classifier predicts whether a skill trained on one will transfer to the other. Finally, we evaluate our approach on peg insertion and Lego stacking tasks, both in simulation and real-world, showing substantial savings in human effort.

International Conference on Robotics and Automation (ICRA), May 2022 - Outstanding Interaction Paper Finalist at ICRA 2022

|

|

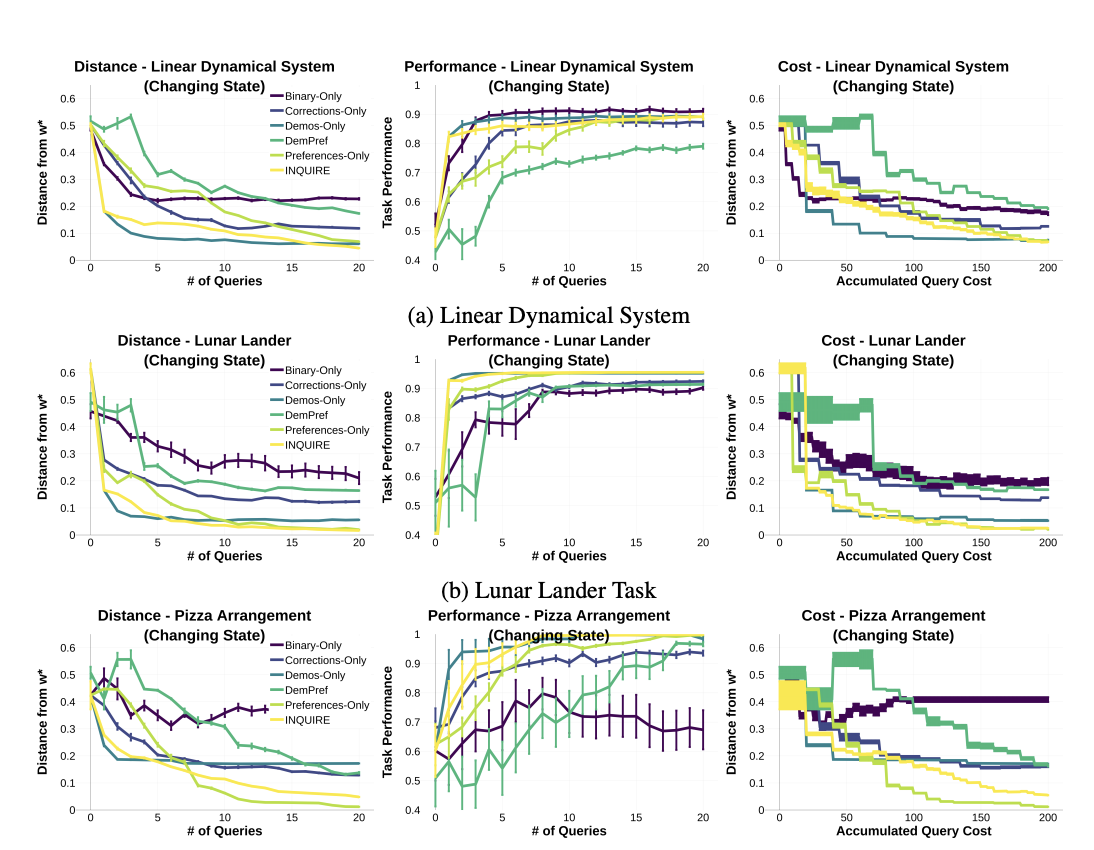

INQUIRE: INteractive Querying for User-aware Informative REasoning

Tesca Fitzgerald, Pallavi Koppol, Patrick Callaghan, Russell Q. Wong, Reid Simmons, Oliver Kroemer, and Henny Admoni

@article{si2024deltahands,

title={INQUIRE: INteractive Querying for User-aware Informative REasoning},

author={Tesca Fitzgerald, Pallavi Koppol, Patrick Callaghan, Russell Q. Wong, Reid Simmons, Oliver Kroemer, and Henny Admoni},

journal={Proceedings of (CoRL) Conference on Robot Learning},

year={2022}

}

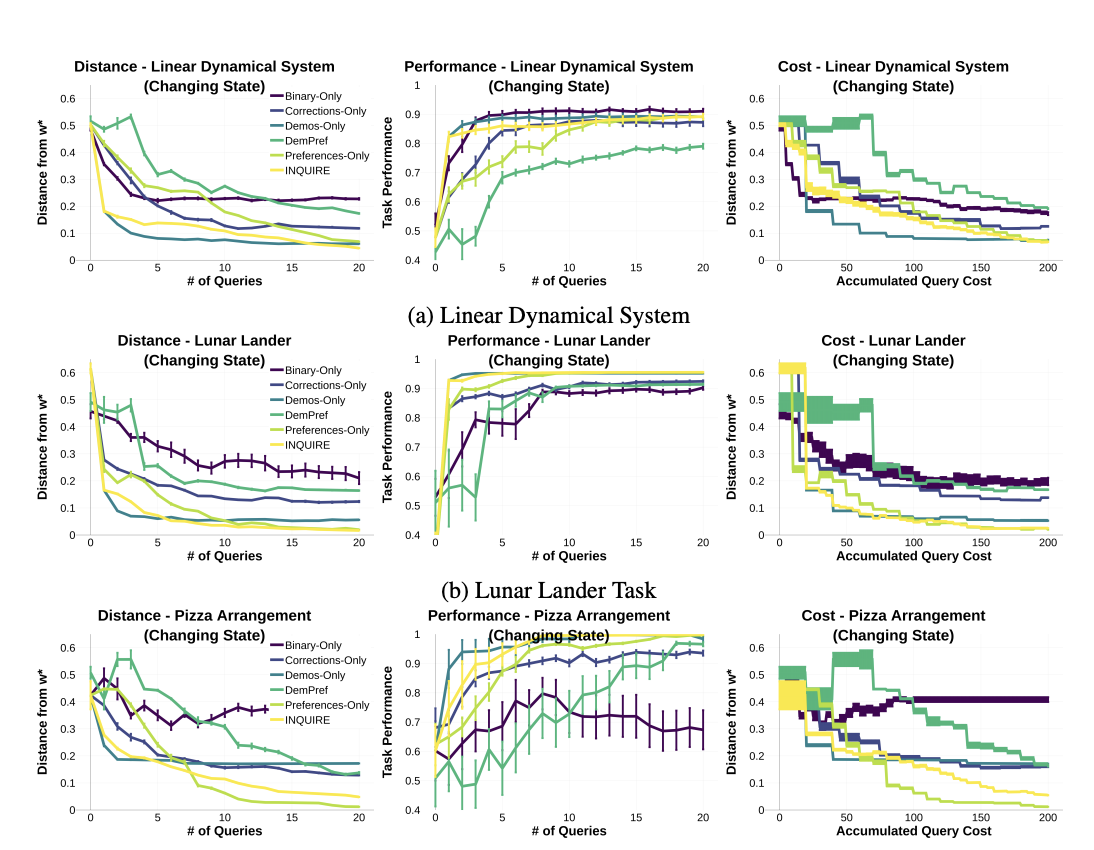

Research on Interactive Robot Learning has yielded several modalities for querying a human for training data, including demonstrations, preferences, and corrections. While prior work in this space has focused on optimizing the robot’s queries within each interaction type, there has been little work on optimizing over the selection of the interaction type itself. We present INQUIRE, the first algorithm to implement and optimize over a generalized representation of information gain across multiple interaction types. Our evaluations show that INQUIRE can dynamically optimize its interaction type (and respective optimal query) based on its current learning status and the robot’s state in the world, resulting in more robust performance across tasks in comparison to state-of-the-art baseline methods. Additionally, INQUIRE allows for customizable cost metrics to bias its selection of interaction types, enabling this algorithm to be tailored to a robot’s particular deployment domain and formulate cost-aware, informative queries.

Conference on Robot Learning (CoRL), Dec 2022

|

|

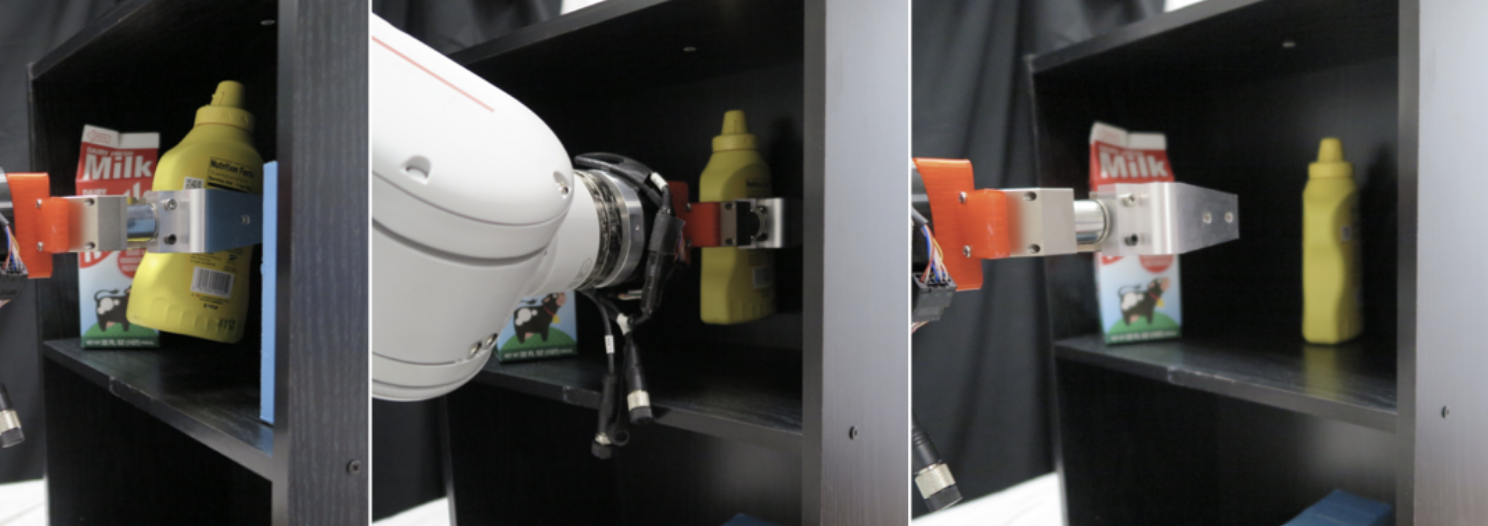

Learning Preconditions of Hybrid Force-Velocity Controllers for Contact-Rich Manipulation

Jacky Liang, Xianyi Cheng, and Oliver Kroemer

@article{Liang-2022-134572,

title={Learning Preconditions of Hybrid Force-Velocity Controllers for Contact-Rich Manipulation},

author={Jacky Liang, Xianyi Cheng, and Oliver Kroemer},

journal={Proceedings of (CoRL) Conference on Robot Learning},

year={2022}

}

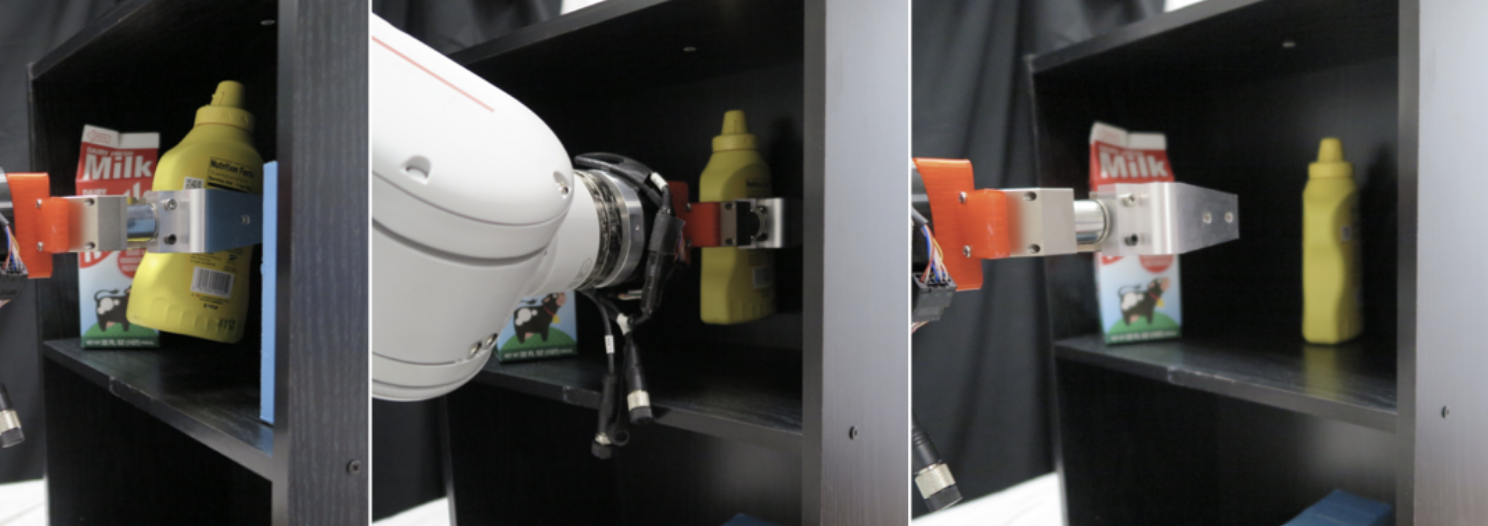

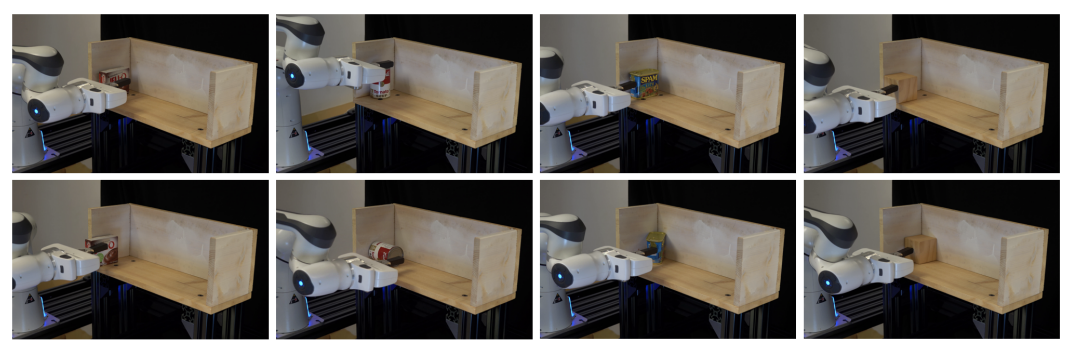

Robots need to manipulate objects in constrained environments like shelves and cabinets when assisting humans in everyday settings like homes and offices. These constraints make manipulation difficult by reducing grasp accessibility, so robots need to use non-prehensile strategies that leverage object-environment contacts to perform manipulation tasks. To tackle the challenge of planning and controlling contact-rich behaviors in such settings, this work uses Hybrid Force-Velocity Controllers (HFVCs) as the skill representation and plans skill sequences with learned preconditions. While HFVCs naturally enable robust and compliant contact-rich behaviors, solvers that synthesize them have traditionally relied on precise object models and closed-loop feedback on object pose, which are difficult to obtain in constrained environments due to occlusions. We first relax HFVCs’ need for precise models and feedback with our HFVC synthesis framework, then learn a point-cloud-based precondition function to classify where HFVC executions will still be successful despite modeling inaccuracies. Finally, we use the learned precondition in a search-based task planner to complete contact-rich manipulation tasks in a shelf domain. Our method achieves a task success rate of 73.2%, outperforming the 51.5% achieved by a baseline without the learned precondition. While the precondition function is trained in simulation, it can also trans- fer to a real-world setup without further fine-tuning. See supplementary materials and videos at https://sites.google.com/view/constrained-manipulation/.

Conference on Robot Learning (CoRL), Dec 2022

|

|

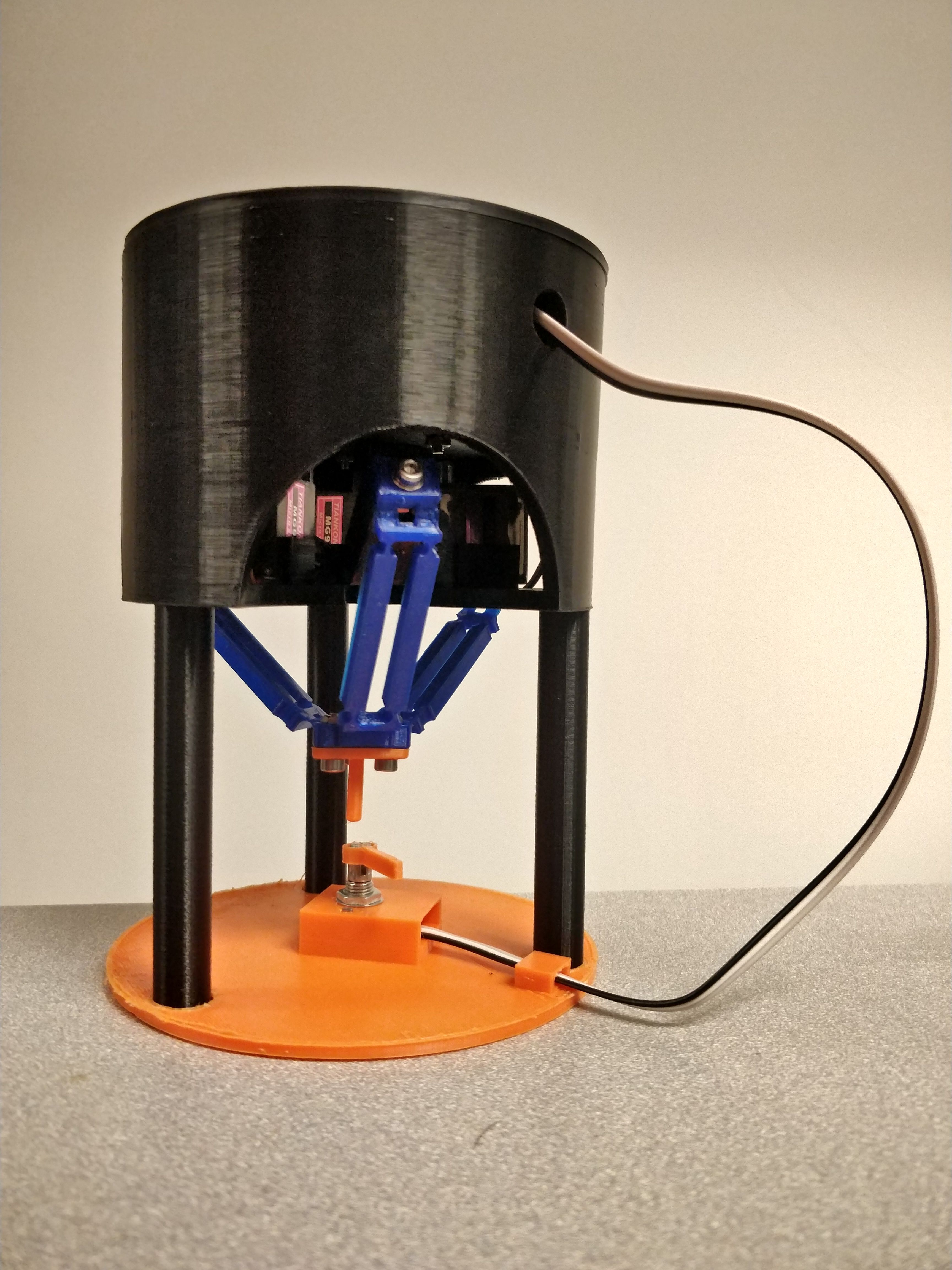

DeltaZ: An Accessible Compliant Delta Robot Manipulator for Research and Education

Sarvesh Patil*, Samuel C. Alvares*, Pragna Mannam, Oliver Kroemer, F. Zeynep Temel

@inproceedings{Patil_2022,

title={DeltaZ: An Accessible Compliant Delta Robot Manipulator for Research and Education},

volume={22},

url={http://dx.doi.org/10.1109/IROS47612.2022.9981257},

DOI={10.1109/iros47612.2022.9981257},

booktitle={2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)},

publisher={IEEE},

author={Patil, Sarvesh and Alvares, Samuel C. and Mannam, Pragna and Kroemer, Oliver and Temel, F. Zeynep},

year={2022},

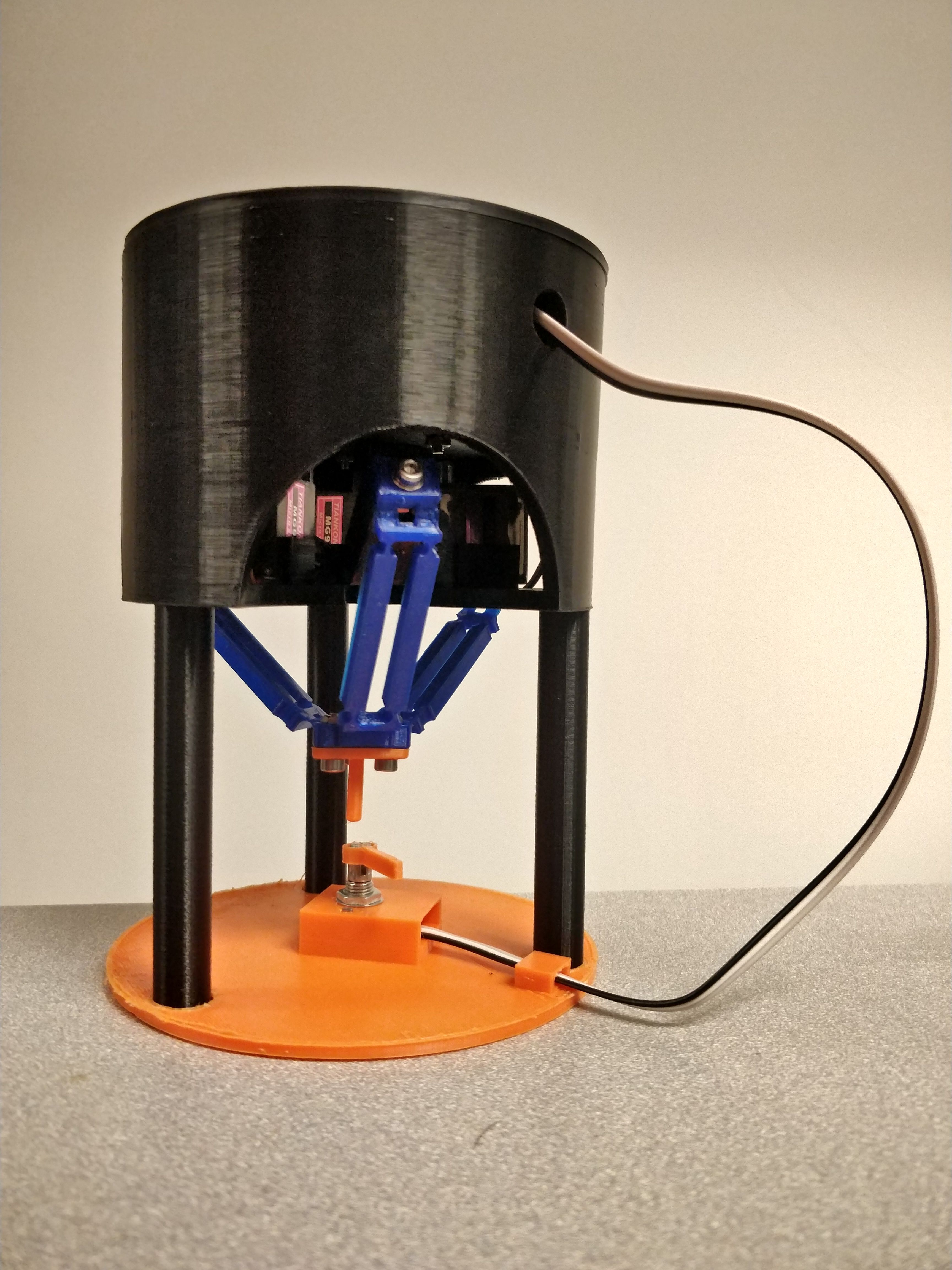

month=oct, pages={13213–13219} }

This paper presents the DeltaZ robot, a centimeter-scale, low-cost, delta-style robot that allows for a broad range of capabilities and robust functionalities. Current technologies allow DeltaZ to be 3D-printed from soft and rigid materials so that it is easy to assemble and maintain, and lowers the barriers to utilize. Functionality of the robot stems from its three translational degrees of freedom and a closed form kinematic solution which makes manipulation problems more intuitive compared to other manipulators. Moreover, the low cost of the robot presents an opportunity to democratize manipulators for a research setting. We also describe how the robot can be used as a reinforcement learning benchmark. Open-source 3D-printable designs and code are available to the public.

International Conference on Intelligent Robots and Systems (IROS), Oct 2022

|

|

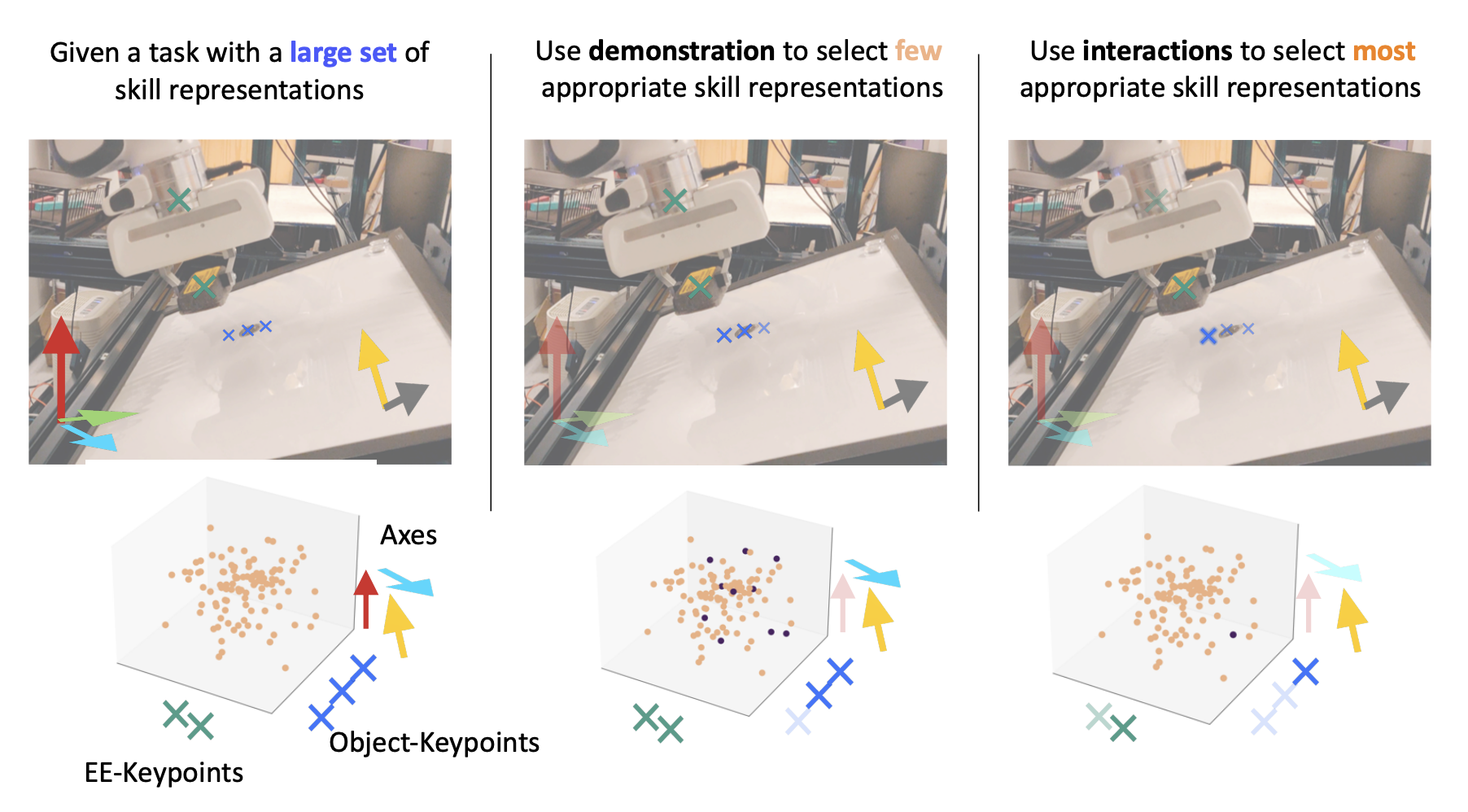

Efficiently Learning Manipulations by Selecting Structured Skill Representations

Mohit Sharma and Oliver Kroemer

@article{Liang-2022-134572,

title={Efficiently Learning Manipulations by Selecting Structured Skill Representations},

author={Mohit Sharma and Oliver Kroemer},

journal={Proceedings of (IROS) IEEE/RSJ International Conference on Intelligent Robots and Systems},

year={2022},

month={October},

publisher={IEEE}

}

A key challenge in learning to perform manipu- lation tasks is selecting a suitable skill representation. While specific skill representations are often easier to learn, they are often only suitable for a narrow set of tasks. In most prior works, roboticists manually provide the robot with a suitable skill representation to use e.g. a neural network or DMPs. By contrast, we propose to allow the robot to select the most appropriate skill representation for the underlying task. Given the large space of skill representations, we utilize a single demonstration to select a small set of potential task-relevant representations. This set is then further refined using reinforcement learning to select the most suitable skill representation. Experiments in both simulation and real world show how our proposed approach leads to improved sample efficiency and enables directly learning on the real robot.

International Conference on Intelligent Robots and Systems (IROS), Oct 2022

|

|

Learning by Doing: Controlling a Dynamical System using Causality, Control, and Reinforcement Learning

Sebastian Weichwald, Søren Wengel Mogensen, Tabitha Edith Lee, Dominik Baumann, Oliver Kroemer, Isabelle Guyon, Sebastian Trimpe, Jonas Peters, and Niklas Pfister

@article{Weichwald-2022-135091,

title={Learning by Doing: Controlling a Dynamical System using Causality, Control, and Reinforcement Learning},

author={Sebastian Weichwald, Søren Wengel Mogensen, Tabitha Edith Lee, Dominik Baumann, Oliver Kroemer, Isabelle Guyon, Sebastian Trimpe, Jonas Peters, and Niklas Pfister},

journal={Proceedings of (NeurIPS) Neural Information Processing Systems},

year={2022},

month={July},

volume = {176},

pages = {246 - 258},

publisher = {Machine Learning Research}

}

Questions in causality, control, and reinforcement learning go beyond the classical machine learning task of prediction under i.i.d. observations. Instead, these fields consider the problem of learning how to actively perturb a system to achieve a certain effect on a response variable. Arguably, they have complementary views on the problem: In control, one usually aims to first identify the system by excitation strategies to then apply model-based design techniques to control the system. In (non-model-based) reinforcement learning, one directly optimizes a reward. In causality, one focus is on identifiability of causal structure. We believe that combining the different views might create synergies and this competition is meant as a first step toward such synergies. The participants had access to observational and (offline) interventional data generated by dynamical systems. Track CHEM considers an open-loop problem in which a single impulse at the beginning of the dynamics can be set, while Track ROBO considers a closed-loop problem in which control variables can be set at each time step. The goal in both tracks is to infer controls that drive the system to a desired state. Code is open-sourced (https://github.com/LearningByDoingCompetition/learningbydoing-comp) to reproduce the winning solutions of the competition and to facilitate trying out new methods on the competition tasks.

Neural Information Processing Systems (NeurlIPS), July 2022

|

|

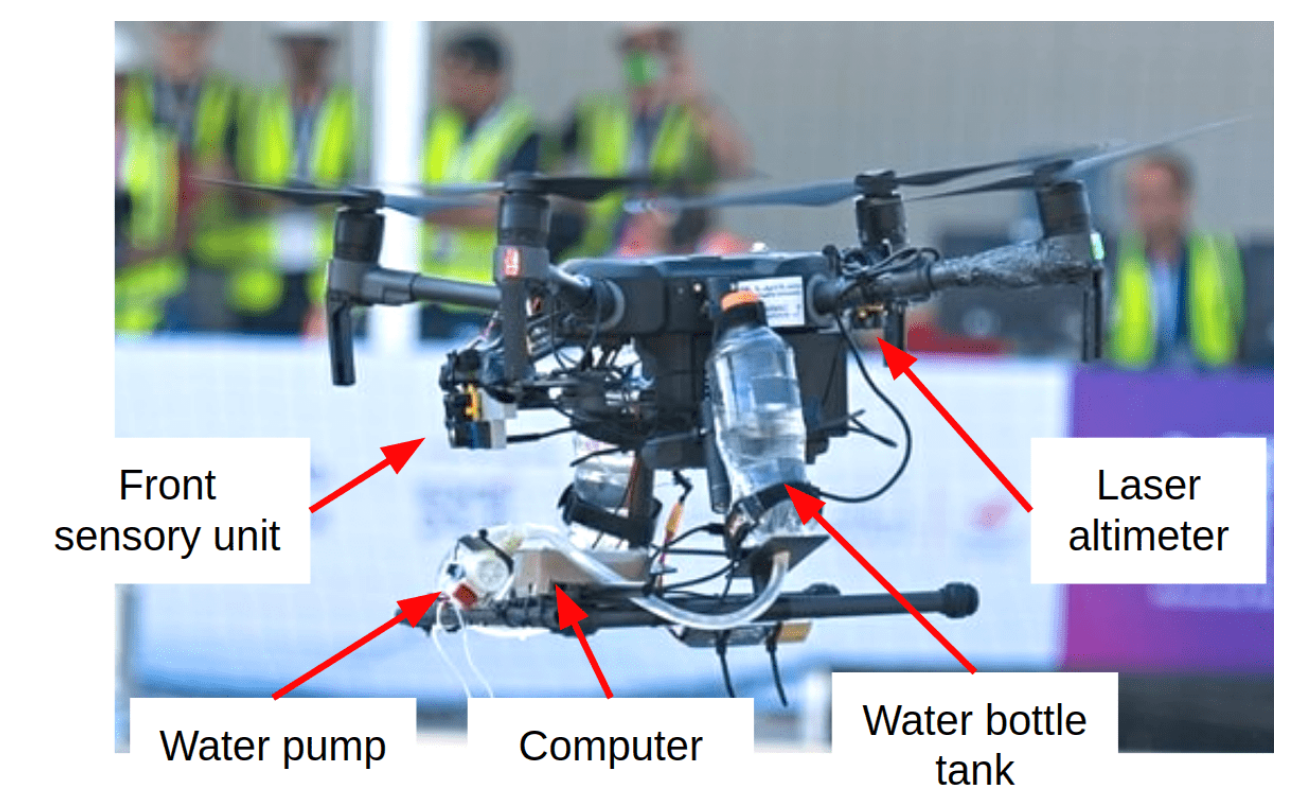

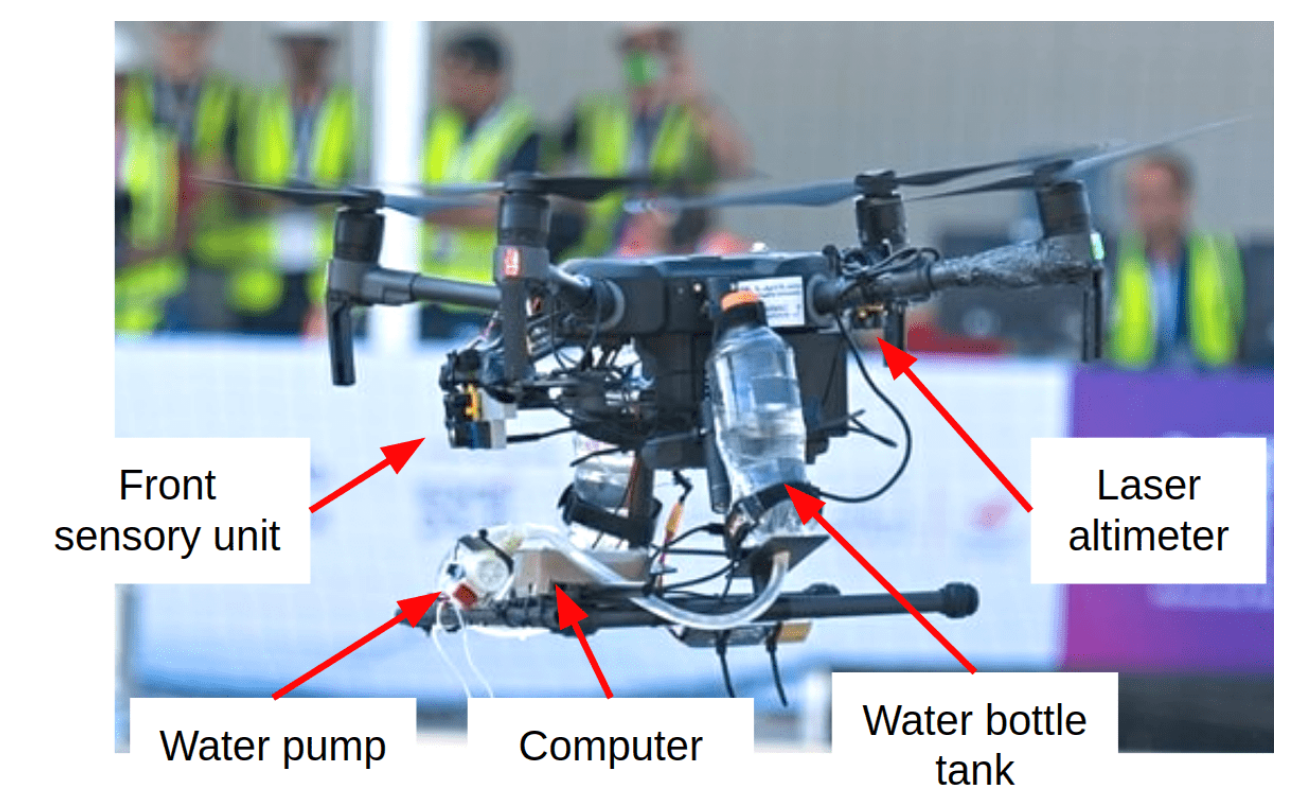

Mission-level Robustness with Rapidly-deployed, Autonomous Aerial Vehicles by Carnegie Mellon Team Tartan at MBZIRC 2020

Anish Bhattacharya, Akshit Gandhi, Lukas Merkle, Rohan Tiwari, Karun Warrior, Stanley Winata, Andrew Saba, Kevin Zhang, Oliver Kroemer, and Sebastian Scherer

@article{Bhattacharya-2021-128844,

author = {Anish Bhattacharya and Akshit Gandhi and Lukas Merkle and Rohan Tiwari and Karun Warrior and Stanley Winata and Andrew Saba and Kevin Zhang and Oliver Kroemer and Sebastian Scherer},

title = {Mission-level Robustness with Rapidly-deployed, Autonomous Aerial Vehicles by Carnegie Mellon Team Tartan at MBZIRC 2020},

journal = {Field Robotics},

vol = {2},

year = {2022},

month = {March},

pages = {172 - 200},

}

For robotics systems to be used in high risk, real-world situations, they have to be quickly deployable and robust to environmental changes, under-performing hardware, and mission subtask failures. Robots are often designed to consider a single sequence of mission events, with complex algorithms lowering individual subtask failure rates under some critical con- straints. Our approach is to leverage common techniques in vision and control and encode robustness into mission structure through outcome monitoring and recovery strategies, aided by a system infrastructure that allows for quick mission deployments under tight time con- straints and no central communication. We also detail lessons in rapid field robotics devel- opment and testing. Systems were developed and evaluated through real-robot experiments at an outdoor test site in Pittsburgh, Pennsylvania, USA, as well as in the 2020 Mohamed Bin Zayed International Robotics Challenge. All competition trials were completed in fully autonomous mode without RTK-GPS. Our system led to 4th place in Challenge 2 and 7th place in the Grand Challenge, and achievements like popping five balloons (Challenge 1), successfully picking and placing a block (Challenge 2), and dispensing the most water autonomously with a UAV of all teams onto an outdoor, real fire (Challenge 3).

Field Robotics Special Issue: The Mohamed Bin Zayed International Robotics Challenge (MBZIRC) 2020, March 2022

|

|

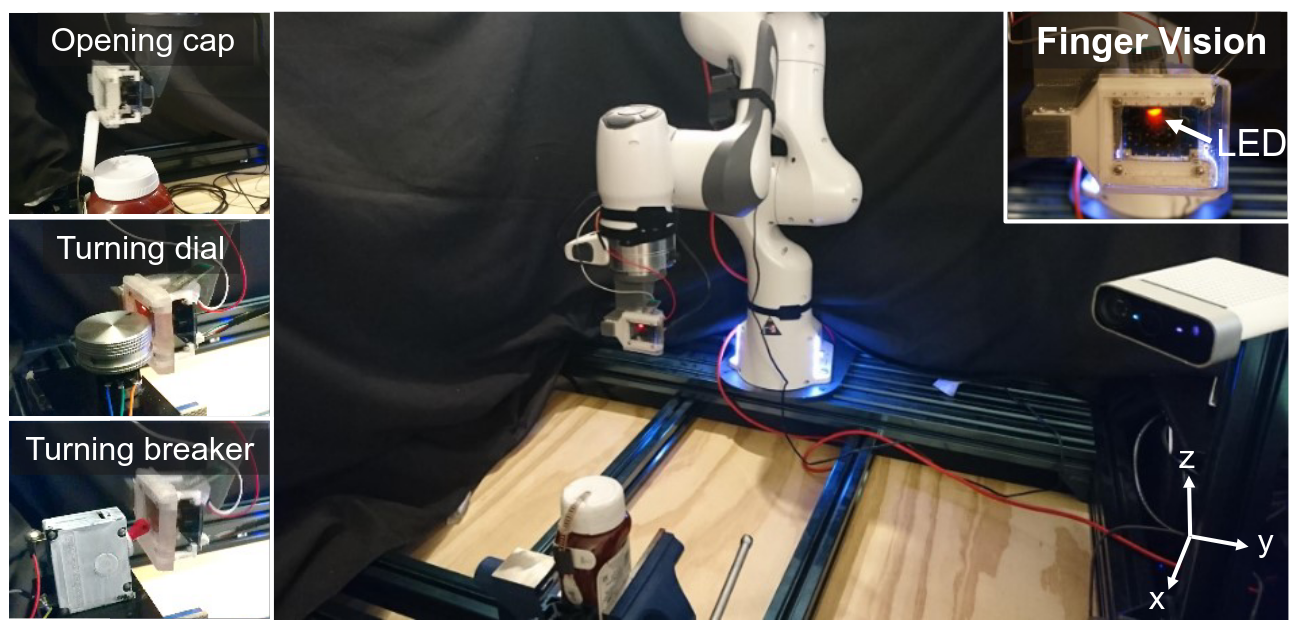

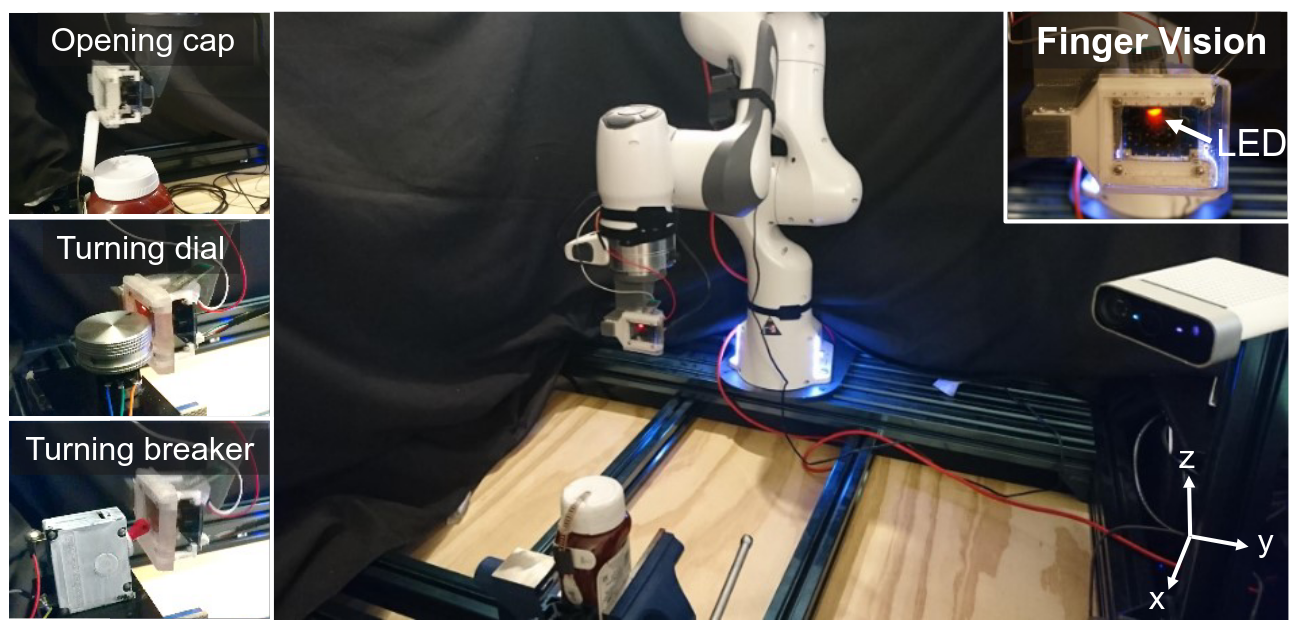

Policy Blending and Recombination for Multimodal Contact-Rich Tasks

Tetsuya Narita and Oliver Kroemer

@article{Narita-2021-128850,

author = {Tetsuya Narita and Oliver Kroemer},

title = {Policy Blending and Recombination for Multimodal Contact-Rich Tasks},

journal = {IEEE Robotics and Automation Letters},

year = {2021},

month = {April},

volume = {6},

number = {2},

pages = {2721 - 2728},

}

Multimodal information such as tactile, proximity and force sensing is essential for performing stable contact-rich manipulations. However, coupling multimodal information and motion control still remains a challenging topic. Rather than learning a monolithic skill policy that takes in all feedback signals at all times, skills should be divided into phases and learn to only use the sensor signals applicable to that phase. This makes learning the primitive policies for each phase easier, and allows the primitive policies to be more easily reused among different skills. However, stopping and abruptly switching between each primitive policy results in longer execution times and less robust behaviours. We therefore propose a blending approach to seamlessly combining the primitive policies into a reliable combined control policy. We evaluate both time-based and state-based blending approaches. The resulting approach was successfully evaluated in simulation and on a real robot, with an augmented finger vision sensor, on: opening a cap, turning a dial and flipping a breaker tasks. The evaluations show that the blended policies with multimodal feedback can be easily learned and reliably executed.

IEEE Robotics and Automation Letters (RA-L), April 2021 - Best Manipulation Paper Finalist at ICRA 2021

|

|

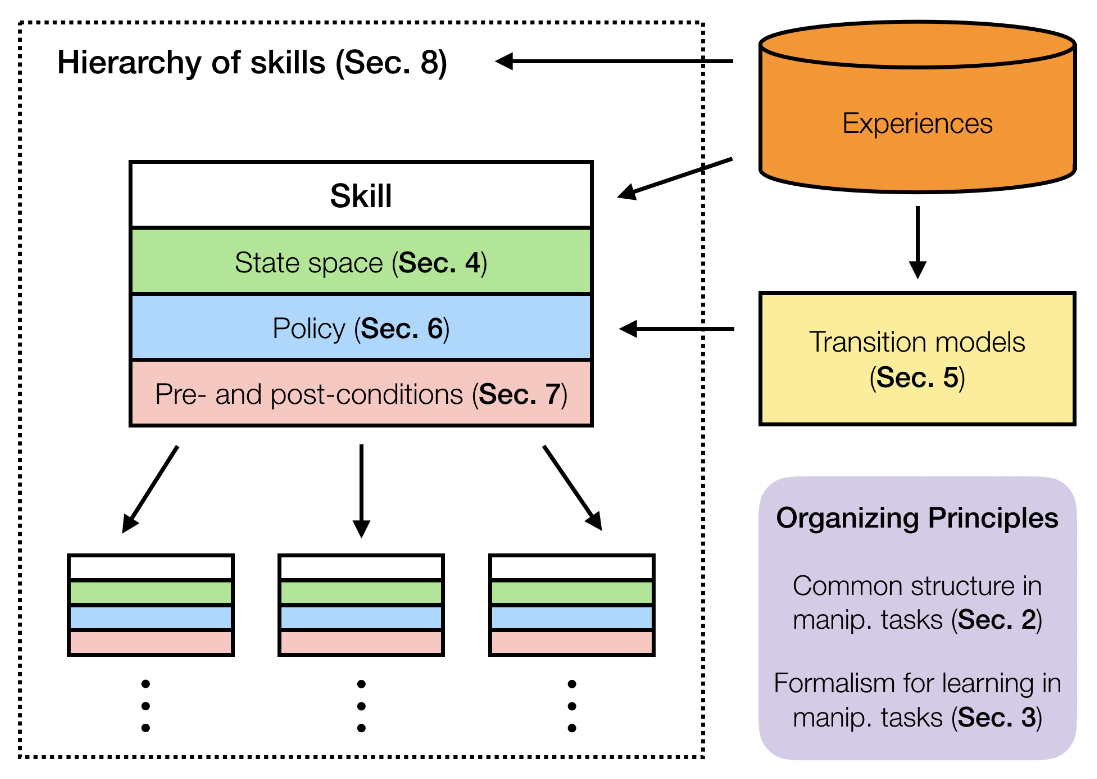

A Review of Robot Learning for Manipulation: Challenges, Representations, and Algorithms

Oliver Kroemer, Scott Niekum, and George Konidaris

@article{Kroemer-2021-128846,

author = {Oliver Kroemer and Scott Niekum and George Konidaris},

title = {A Review of Robot Learning for Manipulation: Challenges, Representations, and Algorithms},

journal = {Journal of Machine Learning Research},

year = {2021},

month = {January},

volume = {22},

number = {30},

pages = {1 - 82},

}

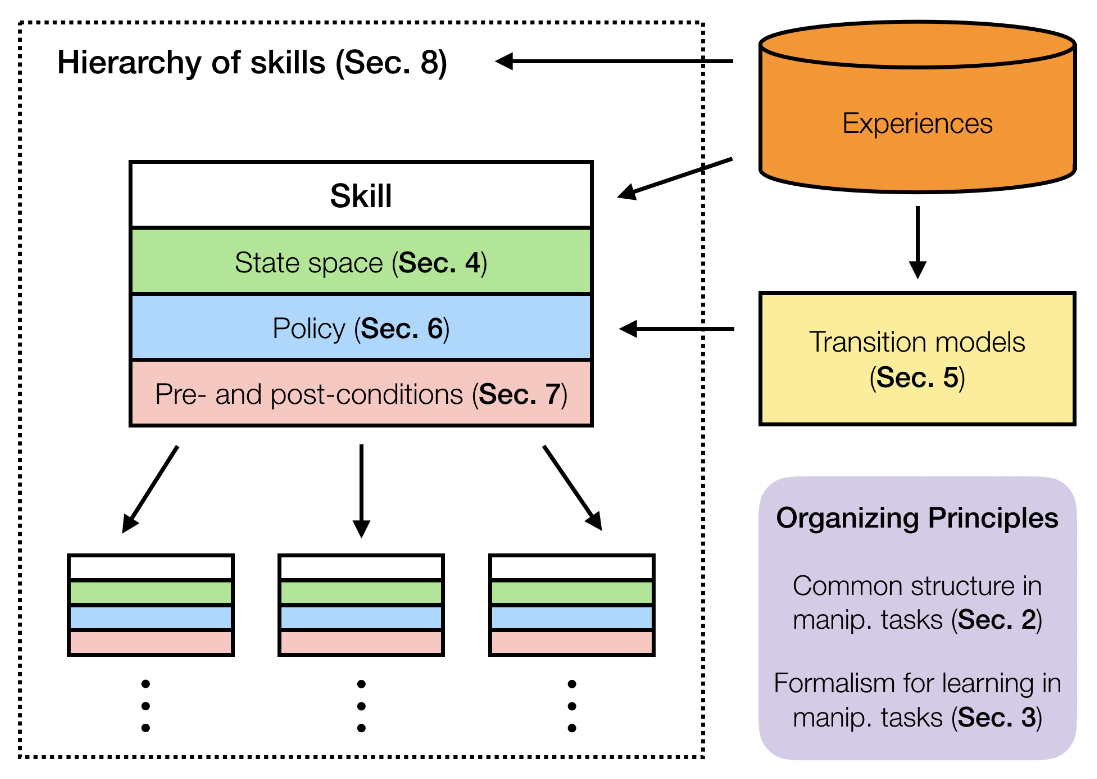

A key challenge in intelligent robotics is creating robots that are capable of directly in- teracting with the world around them to achieve their goals. The last decade has seen substantial growth in research on the problem of robot manipulation, which aims to exploit the increasing availability of affordable robot arms and grippers to create robots capable of directly interacting with the world to achieve their goals. Learning will be central to such autonomous systems, as the real world contains too much variation for a robot to expect to have an accurate model of its environment, the objects in it, or the skills required to manipulate them, in advance. We aim to survey a representative subset of that research which uses machine learning for manipulation. We describe a formalization of the robot manipulation learning problem that synthesizes existing research into a single coherent framework and highlight the many remaining research opportunities and challenges.

Journal of Machine Learning Research, Jan 2021

|

|

A Review of Tactile Information: Perception and Action Through Touch

Qiang Li, Oliver Kroemer, Zhe Su, Filipe Veiga, Mohsen Kaboli, and Helge Ritter

@article{Li-2020-128848,

author = {Qiang Li and Oliver Kroemer and Zhe Su and Filipe Veiga and Mohsen Kaboli and Helge Ritter},

title = {A Review of Tactile Information: Perception and Action Through Touch},

journal = {IEEE Transactions on Robotics},

year = {2020},

month = {December},

volume = {30},

number = {6},

pages = {1619 - 1634},

}

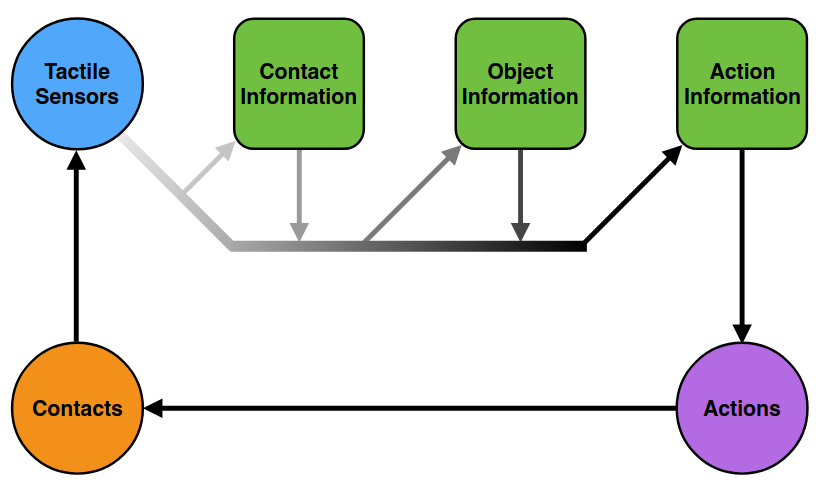

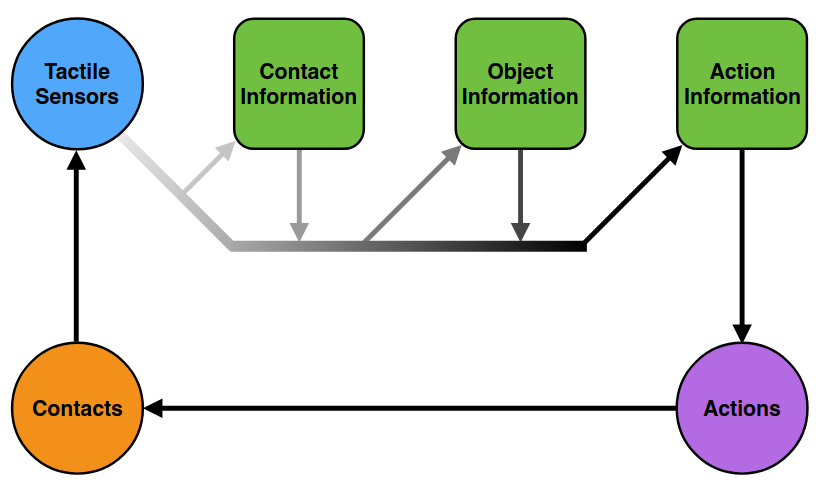

Tactile sensing is a key sensor modality for robots interacting with their surroundings. These sensors provide a rich and diverse set of data signals that contain detailed information collected from contacts between the robot and its environment. The data is however not limited to individual contacts and can be used to extract a wide range of information about the objects in the environment as well as the actions of the robot during the interactions. In this paper, we provide an overview of tactile information and its applications in robotics. We present a hierarchy consisting of raw, contact, object, and action levels to structure the tactile information, with higher-level information often building upon lower-level information. We discuss different types of information that can be extracted at each level of the hierarchy. The paper also includes an overview of different types of robot applications and the types of tactile information that they employ. Finally we end the article with a discussion for future tactile applications which are still beyond the current capabilities of robots.

IEEE Transactions on Robotics, Dec 2020

|

|

Localization and Force-Feedback with Soft Magnetic Stickers for Precise Robot Manipulation

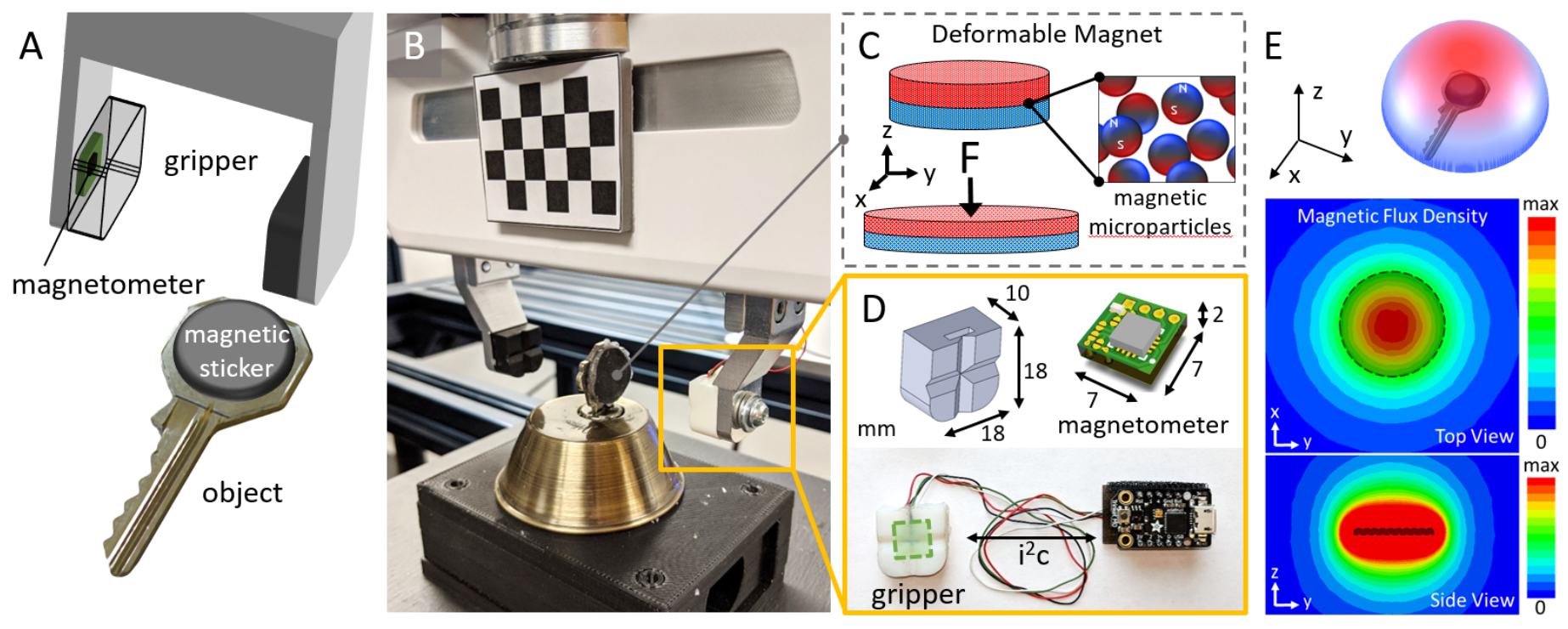

Tess Hellebrekers, Kevin Zhang, Manuela Veloso, Oliver Kroemer, and Carmel Majidi

@inproceedings{9341281,

author={Hellebrekers, Tess and Zhang, Kevin and Veloso, Manuela and Kroemer, Oliver and Majidi, Carmel},

booktitle={2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)},

title={Localization and Force-Feedback with Soft Magnetic Stickers for Precise Robot Manipulation},

year={2020},

volume={},

number={},

pages={8867-8874},

keywords={Location awareness;Magnetometers;Tools;Robot sensing systems;Soft magnetic materials;Task analysis;Robots},

doi={10.1109/IROS45743.2020.9341281}

}

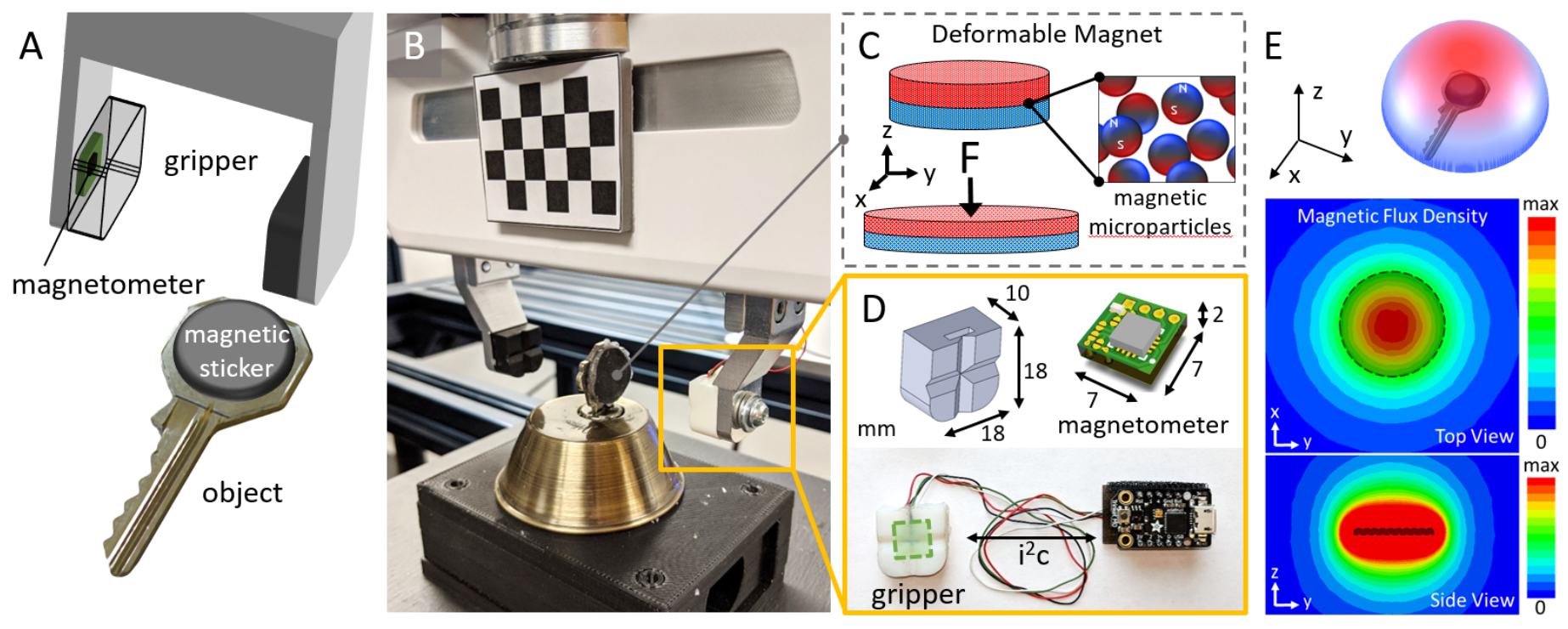

Tactile sensors are used in robot manipulation to reduce uncertainty regarding hand-object pose estimation. However, existing sensor technologies tend to be bulky and provide signals that are difficult to interpret into actionable changes. Here, we achieve wireless tactile sensing with soft and conformable magnetic stickers that can be easily placed on objects within the robot's workspace. We embed a small magnetometer within the robot's fingertip that can localize to a magnetic sticker with sub-mm accuracy and enable the robot to pick up objects in the same place, in the same way, every time. In addition, we utilize the soft magnets' ability to exhibit magnetic field changes upon contact forces. We demonstrate the localization and force-feedback features with a 7-DOF Franka arm on deformable tool use and a key insertion task for applications in home, medical, and food robotics. By increasing the reliability of interaction with common tools, this approach to object localization and force sensing can improve robot manipulation performance for delicate, high-precision tasks.

IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Oct 2020

|

|

Learning Skills to Patch Plans Based on Inaccurate Models

Alex Lagrassa, Steven Lee, and Oliver Kroemer

@inproceedings{9341475,

author={Lagrassa, Alex and Lee, Steven and Kroemer, Oliver},

booktitle={2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)},

title={Learning Skills to Patch Plans Based on Inaccurate Models},

year={2020},

volume={},

number={},

pages={9441-9448},

keywords={Adaptation models;Shape;Switches;Planning;Trajectory;Reliability;Task analysis},

doi={10.1109/IROS45743.2020.9341475}

}

Planners using accurate models can be effective for accomplishing manipulation tasks in the real world, but are typically highly specialized and require significant fine-tuning to be reliable. Meanwhile, learning is useful for adaptation, but can require a substantial amount of data collection. In this paper, we propose a method that improves the efficiency of sub-optimal planners with approximate but simple and fast models by switching to a model-free policy when unexpected transitions are observed. Unlike previous work, our method specifically addresses when the planner fails due to transition model error by patching with a local policy only where needed. First, we use a sub-optimal model-based planner to perform a task until model failure is detected. Next, we learn a local model-free policy from expert demonstrations to complete the task in regions where the model failed. To show the efficacy of our method, we perform experiments with a shape insertion puzzle and compare our results to both pure planning and imitation learning approaches. We then apply our method to a door opening task. Our experiments demonstrate that our patch-enhanced planner performs more reliably than pure planning and with lower overall sample complexity than pure imitation learning.

IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Oct 2020

|

|

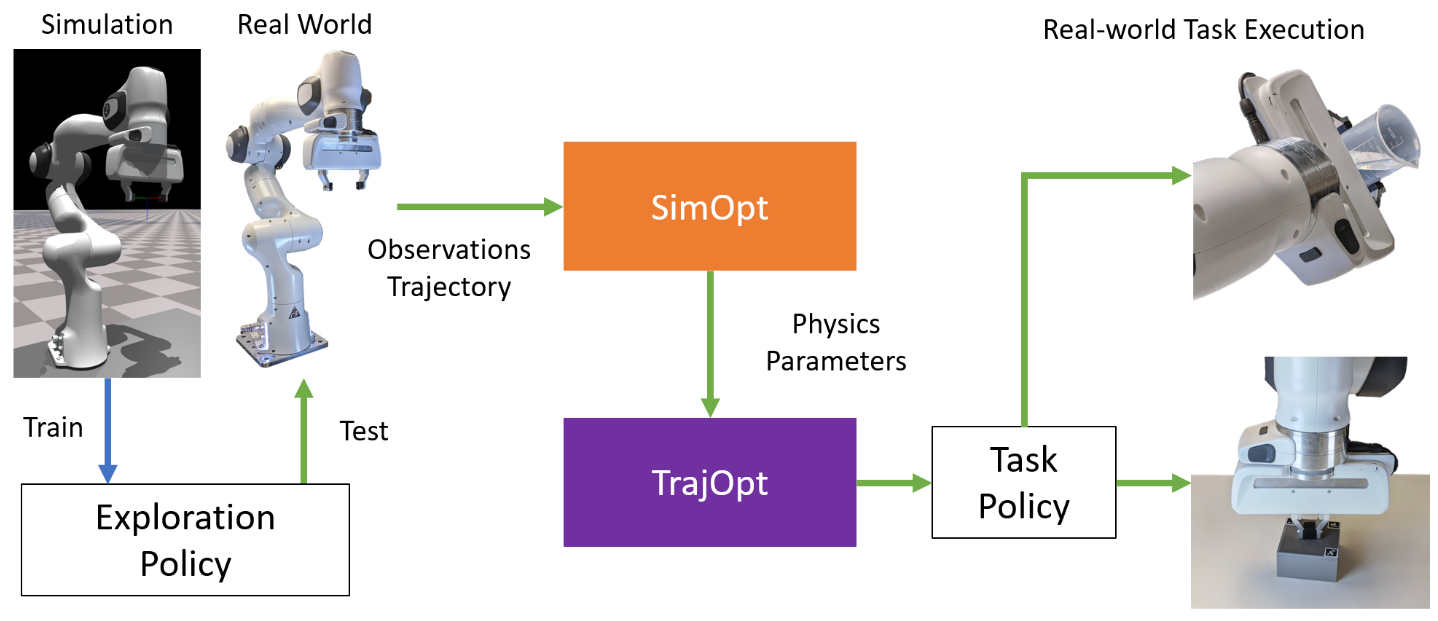

Learning Active Task-Oriented Exploration Policies for Bridging the Sim-to-Real Gap

Jacky Liang, Saumya Saxena, and Oliver Kroemer

@inproceedings{liang2020learning,

title={Learning Active Task-Oriented Exploration Policies for Bridging the Sim-to-Real Gap},

author={Liang, Jacky and Saxena, Saumya and Kroemer, Oliver},

booktitle={Robotics: Science and Systems},

year={2020}

}

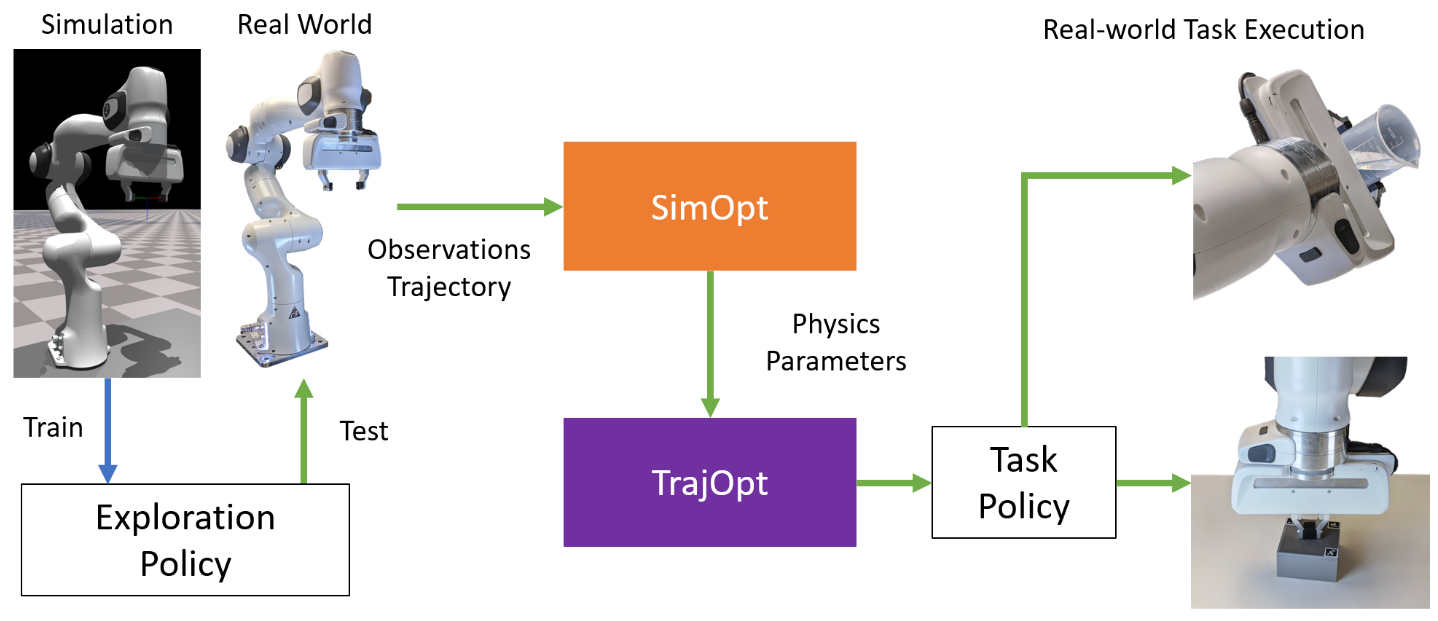

Training robotic policies in simulation suffers from the sim-to-real gap, as simulated dynamics can be different from real-world dynamics. Past works tackled this problem through domain randomization and online system-identification. The former is sensitive to the manually-specified training distribution of dynamics parameters and can result in behaviors that are overly conservative. The latter requires learning policies that concurrently perform the task and generate useful trajectories for system identification. In this work, we propose and analyze a framework for learning exploration policies that explicitly perform task-oriented exploration actions to identify task-relevant system parameters. These parameters are then used by model-based trajectory optimization algorithms to perform the task in the real world. We instantiate the framework in simulation with the Linear Quadratic Regulator as well as in the real world with pouring and object dragging tasks. Experiments show that task-oriented exploration helps model-based policies adapt to systems with initially unknown parameters, and it leads to better task performance than task-agnostic exploration. See videos and supplementary materials at https://sites.google.com/view/task-oriented-exploration/

Robotics: Science and Systems (RSS), July 2020

|

|

In-Hand Object Pose Tracking via Contact Feedback and GPU-Accelerated Robotic Simulation

Jacky Liang, Ankur Handa, Karl Van Wyk, Viktor Makoviychuk, Oliver Kroemer, and Dieter Fox

@inproceedings{9197117,

author={Liang, Jacky and Handa, Ankur and Wyk, Karl Van and Makoviychuk, Viktor and Kroemer, Oliver and Fox, Dieter},

booktitle={2020 IEEE International Conference on Robotics and Automation (ICRA)},

title={In-Hand Object Pose Tracking via Contact Feedback and GPU-Accelerated Robotic Simulation},

year={2020},

volume={},

number={},

pages={6203-6209},

keywords={Pose estimation;Robot sensing systems;Physics;Heuristic algorithms;Cost function},

doi={10.1109/ICRA40945.2020.9197117}

}

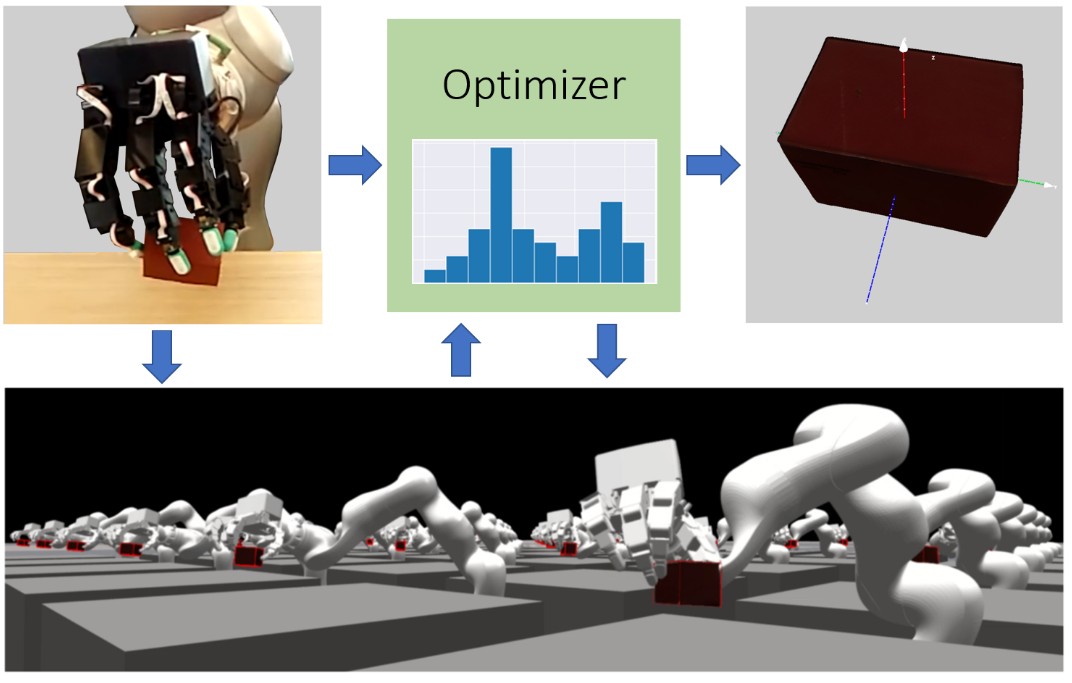

Tracking the pose of an object while it is being held and manipulated by a robot hand is difficult for vision-based methods due to significant occlusions. Prior works have explored using contact feedback and particle filters to localize in-hand objects. However, they have mostly focused on the static grasp setting and not when the object is in motion, as doing so requires modeling of complex contact dynamics. In this work, we propose using GPU-accelerated parallel robot simulations and derivative-free, sample-based optimizers to track in-hand object poses with contact feedback during manipulation. We use physics simulation as the forward model for robot-object interactions, and the algorithm jointly optimizes for the state and the parameters of the simulations, so they better match with those of the real world. Our method runs in real-time (30Hz) on a single GPU, and it achieves an average point cloud distance error of 6mm in simulation experiments and 13mm in the real-world ones.

International Conference on Robotics and Automation (ICRA), May 2020

|

|

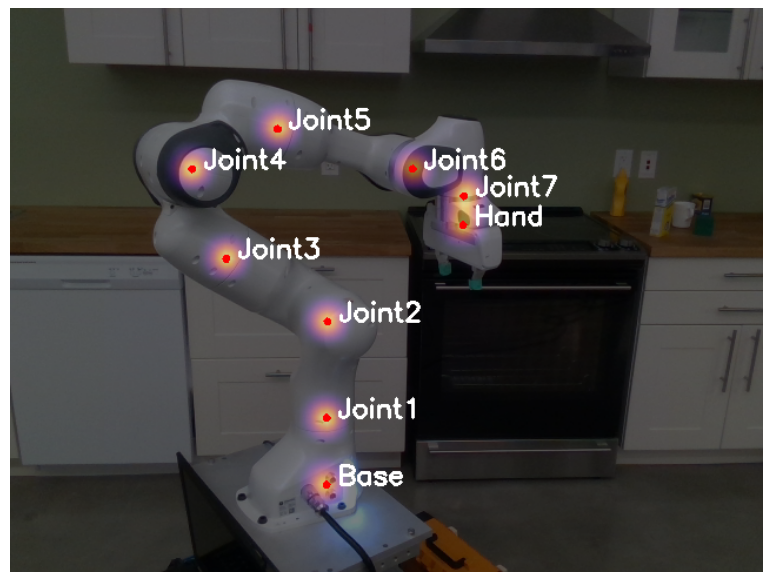

Camera-to-Robot Pose Estimation from a Single Image

Timothy E. Lee, Jonathan Tremblay, Thang To, Jia Cheng, Terry Mosier, Oliver Kroemer, Dieter Fox, and Stan Birchfield

@inproceedings{9196596,

author={Lee, Timothy E. and Tremblay, Jonathan and To, Thang and Cheng, Jia and Mosier, Terry and Kroemer, Oliver and Fox, Dieter and Birchfield, Stan},

booktitle={2020 IEEE International Conference on Robotics and Automation (ICRA)},

title={Camera-to-Robot Pose Estimation from a Single Image},

year={2020},

volume={},

number={},

pages={9426-9432},

keywords={Cameras;Robot vision systems;Robot kinematics;Calibration;Two dimensional displays;Training},

doi={10.1109/ICRA40945.2020.9196596}

}

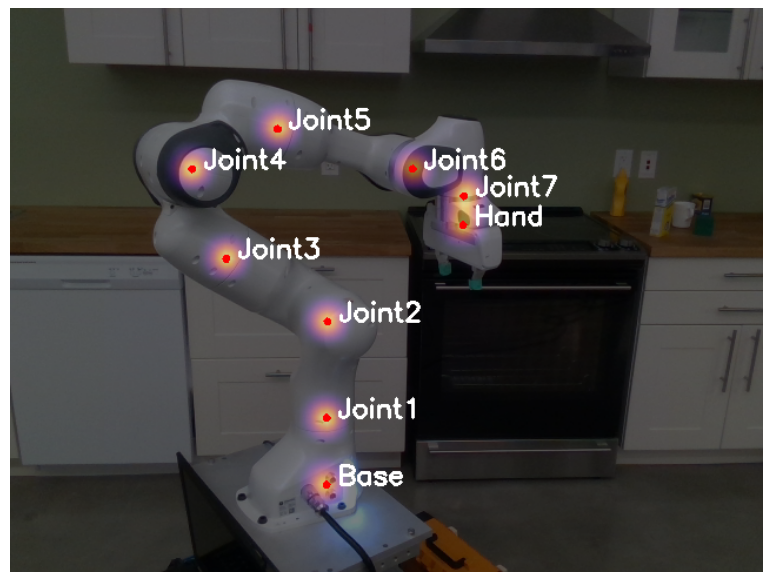

We present an approach for estimating the pose of an external camera with respect to a robot using a single RGB image of the robot. The image is processed by a deep neural network to detect 2D projections of keypoints (such as joints) associated with the robot. The network is trained entirely on simulated data using domain randomization to bridge the reality gap. Perspective-n-point (PnP) is then used to recover the camera extrinsics, assuming that the camera intrinsics and joint configuration of the robot manipulator are known. Unlike classic hand-eye calibration systems, our method does not require an off-line calibration step. Rather, it is capable of computing the camera extrinsics from a single frame, thus opening the possibility of on-line calibration. We show experimental results for three different robots and camera sensors, demonstrating that our approach is able to achieve accuracy with a single frame that is comparable to that of classic off-line hand-eye calibration using multiple frames. With additional frames from a static pose, accuracy improves even further. Code, datasets, and pretrained models for three widely-used robot manipulators are made available.

International Conference on Robotics and Automation (ICRA), May 2020

|

|

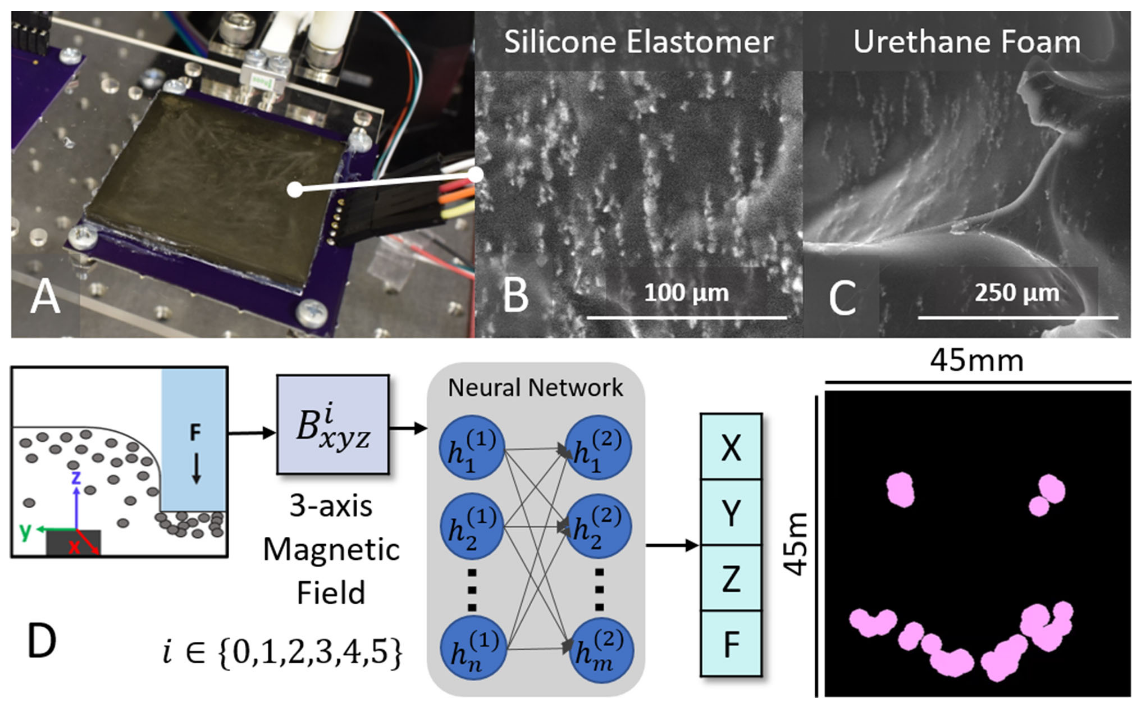

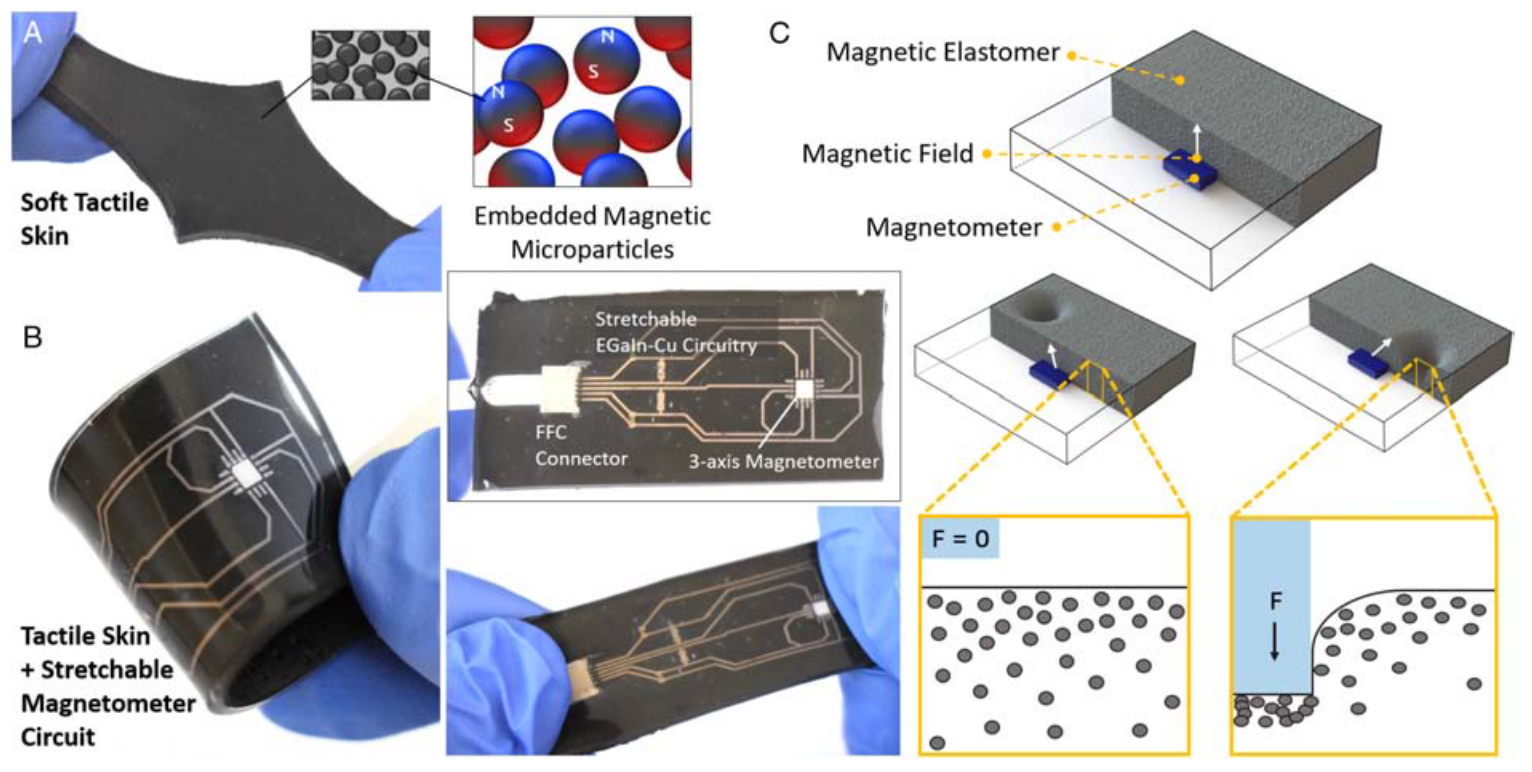

Soft Magnetic Tactile Skin for Continuous Force and Location Estimation Using Neural Networks

Tess Hellebrekers, Nadine Chang, Keene Chin, Michael J Ford, Oliver Kroemer, and Carmel Majidi

@article{9050905,

author={Hellebrekers, Tess and Chang, Nadine and Chin, Keene and Ford, Michael J. and Kroemer, Oliver and Majidi, Carmel},

journal={IEEE Robotics and Automation Letters},

title={Soft Magnetic Tactile Skin for Continuous Force and Location Estimation Using Neural Networks},

year={2020},

volume={5},

number={3},

pages={3892-3898},

keywords={Magnetometers;Soft magnetic materials;Skin;Robot sensing systems;Force;Magnetomechanical effects;Soft sensors and actuators;force and tactile sensing;soft robot materials and design},

doi={10.1109/LRA.2020.2983707}

}

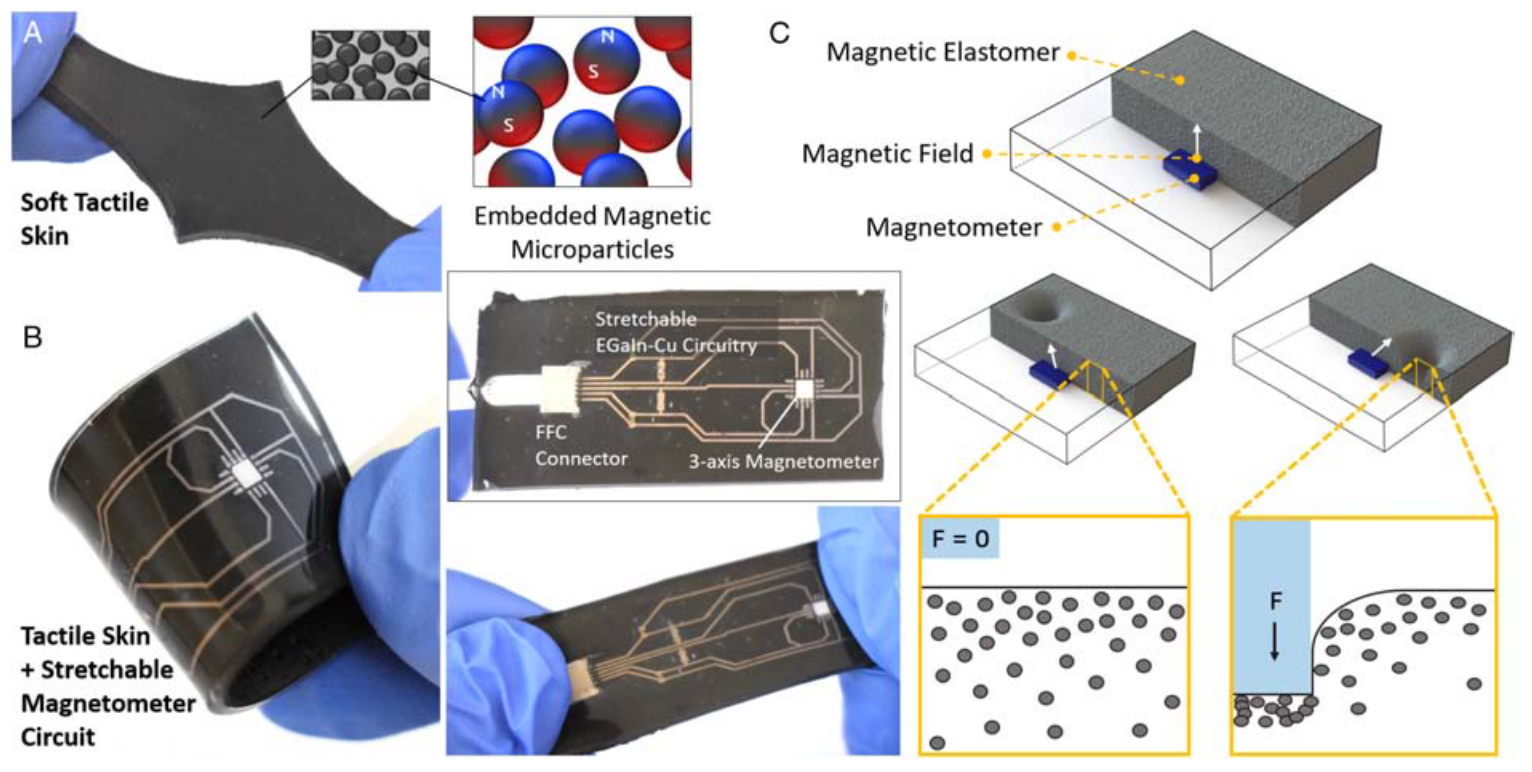

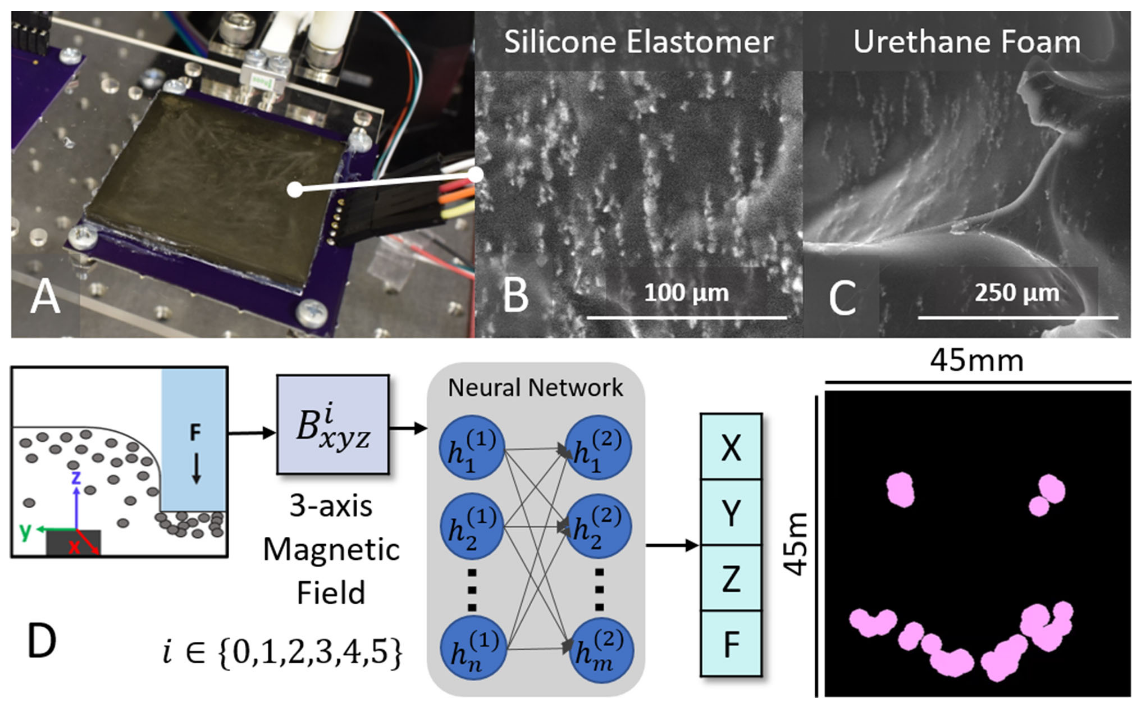

Soft tactile skins can provide an in-depth understanding of contact location and force through a soft and deformable interface. However, widespread implementation of soft robotic sensing skins remains limited due to non-scalable fabrication techniques, lack of customization, and complex integration requirements. In this work, we demonstrate magnetic composites fabricated with two different matrix materials, a silicone elastomer and urethane foam, that can be used as continuous tactile surfaces for single-point contact localization. Building upon previous work, we increased the sensing area from a 15 mm^2 grid to a 40 mm^2 continuous surface. Additionally, new preprocessing methods for the raw magnetic field data, in conjunction with the use of a neural network, enables rapid location and force estimation in free space. We report an average localization of 1 mm^3 for the silicone surface and 2 mm^3 for the urethane foam. Our approach to soft sensing skins addresses the need for tactile soft surfaces that are simple to fabricate and integrate, customizable in shape and material, and usable in both soft and hybrid robotic systems.

IEEE Robotics and Automation Letters (RA-L), Mar 2020

|

|

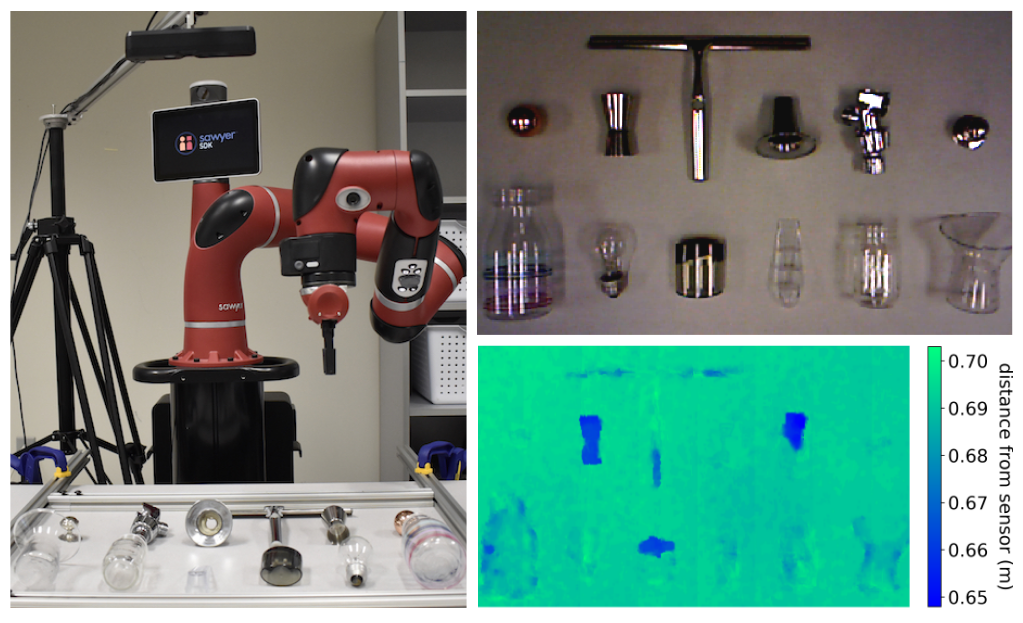

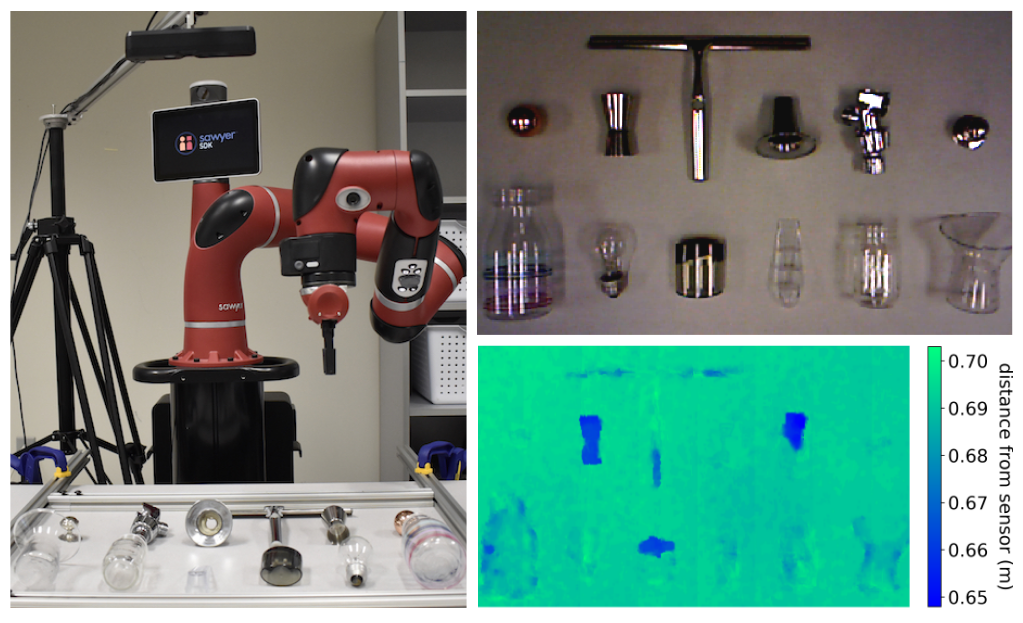

Multi-Modal Transfer Learning for Grasping Transparent and Specular Objects

Thomas Weng, Amith Pallankize, Yimin Tang, Oliver Kroemer, and David Held

@article{9001238,

author={Weng, Thomas and Pallankize, Amith and Tang, Yimin and Kroemer, Oliver and Held, David},

journal={IEEE Robotics and Automation Letters},

title={Multi-Modal Transfer Learning for Grasping Transparent and Specular Objects},

year={2020},

volume={5},

number={3},

pages={3791-3798},

keywords={Grasping;Robot sensing systems;Training;Robustness;Data models;Perception for grasping and manipulation;grasping;RGB-D perception},

doi={10.1109/LRA.2020.2974686}

}

State-of-the-art object grasping methods rely on depth sensing to plan robust grasps, but commercially available depth sensors fail to detect transparent and specular objects. To improve grasping performance on such objects, we introduce a method for learning a multi-modal perception model by bootstrapping from an existing uni-modal model. This transfer learning approach requires only a pre-existing uni-modal grasping model and paired multi-modal image data for training, foregoing the need for ground-truth grasp success labels nor real grasp attempts. Our experiments demonstrate that our approach is able to reliably grasp transparent and reflective objects.

IEEE Robotics and Automation Letters (RA-L), Feb 2020

|

|

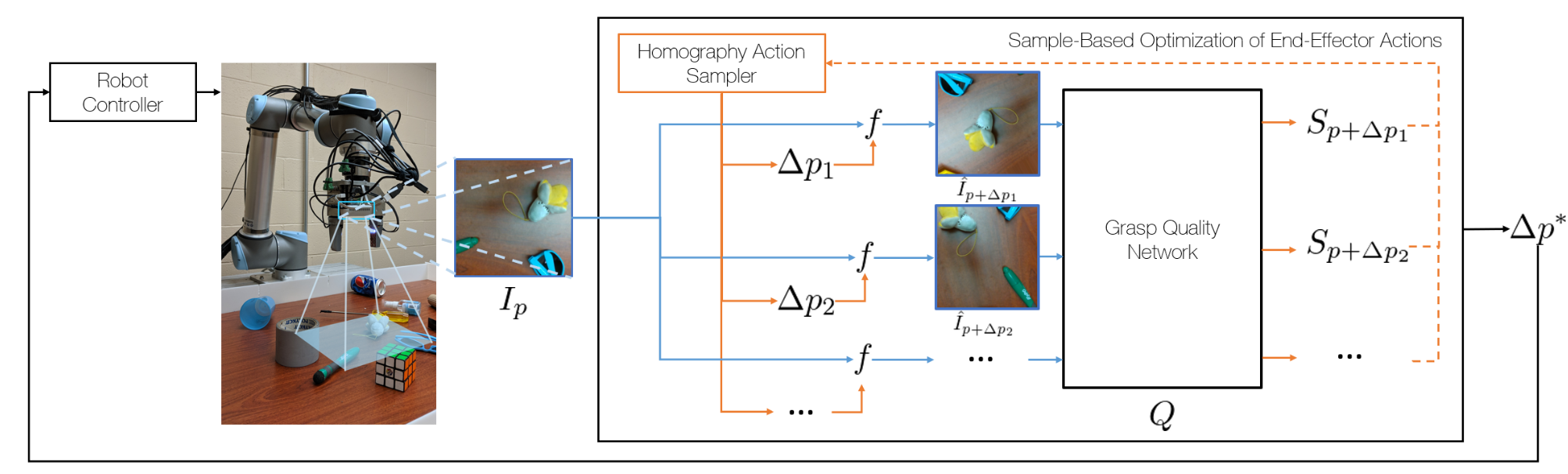

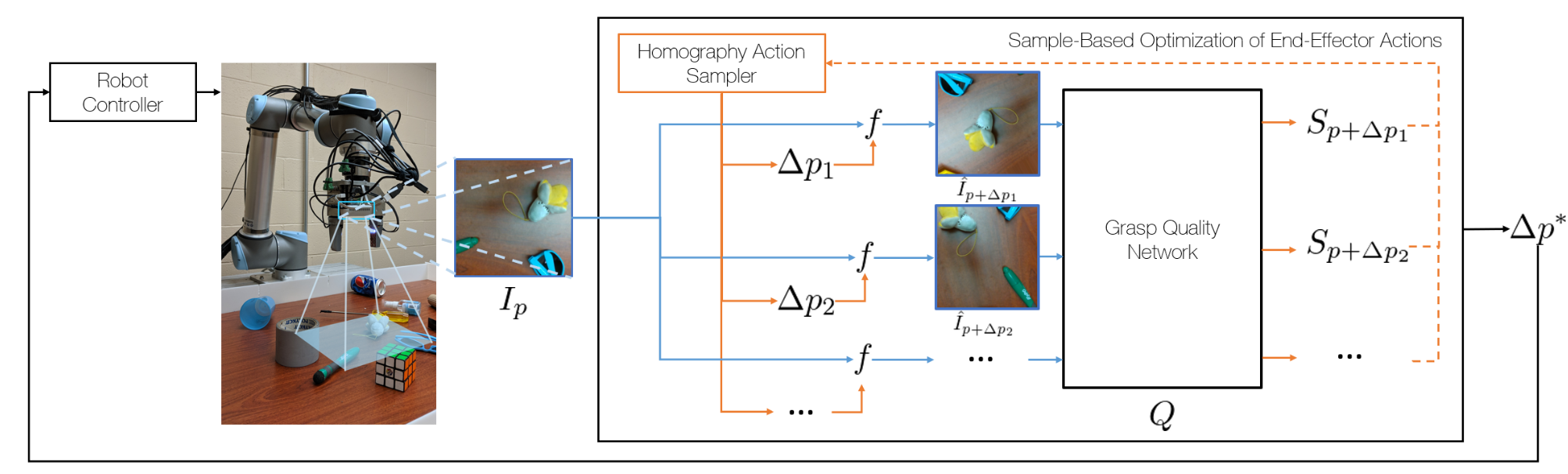

Homography-Based Deep Visual Servoing Methods for Planar Grasps

Austin S. Wang, Wuming Zhang, Daniel Troniak, Jacky Liang, and Oliver Kroemer

@conference{Wang-2019-118447,

author = {Austin S. Wang and Wuming Zhang and Daniel Troniak and Jacky Liang and Oliver Kroemer},

title = {Homography-Based Deep Visual Servoing Methods for Planar Grasps},

booktitle = {Proceedings of (IROS) IEEE/RSJ International Conference on Intelligent Robots and Systems},

year = {2019},

month = {November},

pages = {6570 - 6577},

}

We propose a visual servoing framework for learning to improve grasps of objects. RGB and depth images from grasp attempts are collected using an automated data collection process. The data is then used to train a Grasp Quality Network (GQN) that predicts the outcome of grasps from visual information. A grasp optimization pipeline uses homography models with the trained network to optimize the grasp success rate. We evaluate and compare several algorithms for adjusting the current gripper pose based on the current observation from a gripper-mounted camera to perform visual servoing. Evaluations in both simulated and hardware environments show considerable improvement in grasp robustness with models trained using less than 30K grasp trials. Success rates for grasping novel objects unseen during training increased from 18.5% to 81.0% in simulation, and from 17.8% to 78.0% in the real world.

IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Nov 2019

|

|

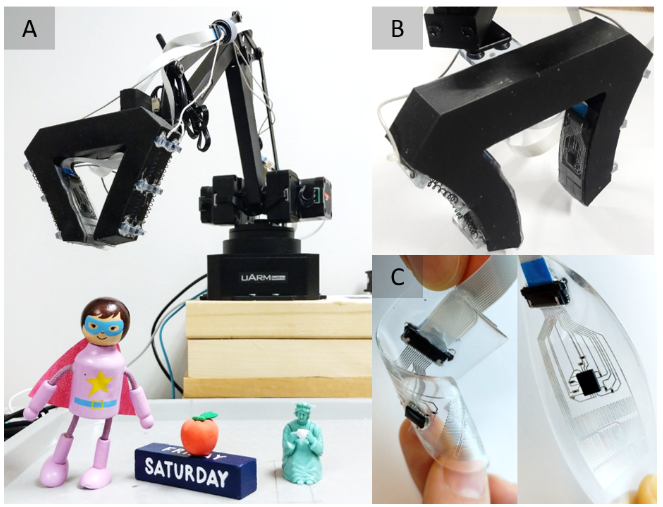

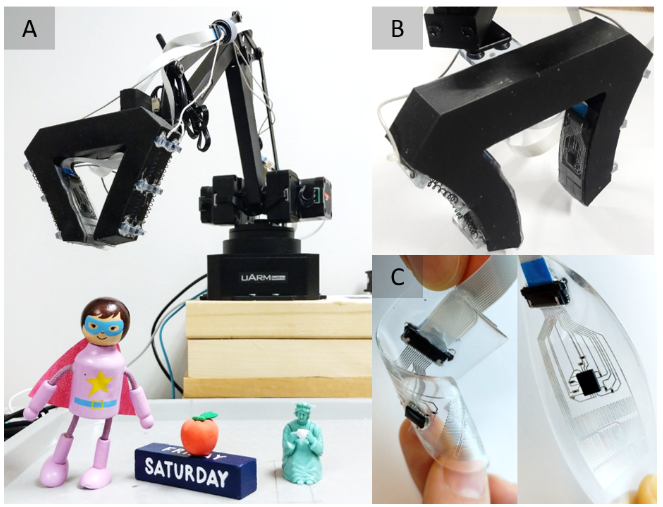

Predicting Grasp Success with a Soft Sensing Skin and Shape-Memory Actuated Gripper

Julian Zimmer, Tess Hellebrekers, Tamim Asfour, Carmel Majidi,and Oliver Kroemer

@conference{Zimmer-2019-118478,

author = {Julian Zimmer and Tess Hellebrekers and Tamim Asfour and Carmel Majidi and Oliver Kroemer},

title = {Predicting Grasp Success with a Soft Sensing Skin and Shape-Memory Actuated Gripper},

booktitle = {Proceedings of (IROS) IEEE/RSJ International Conference on Intelligent Robots and Systems},

year = {2019},

month = {November},

pages = {7120 - 7127},

}

Tactile sensors have been increasingly used to support rigid robot grippers in object grasping and manipulation. However, rigid grippers are often limited in their ability to handle compliant, delicate, or irregularly shaped objects. In recent years, grippers made from soft and flexible materials have become increasingly popular for certain manipulation tasks, e.g., grasping, due to their ability to conform to the object shape without the need for precise control. Although promising, such soft robot grippers currently suffer from the lack of available sensing modalities. In this work, we introduce a soft and stretchable sensing skin and incorporate it into the two fingers of a shape-memory actuated soft gripper. The on-board sensing skin includes a 9-axis inertial measurement unit (IMU) and five discrete pressure sensors per finger. We use this sensorized soft gripper to study grasp success and stability of over 2585 grasps with various objects using several machine learning methods. Our experiments show that LSTMs were the most accurate predictors of grasp success and stability, compared to SVMs, FFNNs, and ST-HMP. We also evaluated the effects on performance of each sensor’s data, and the success rates for individual objects. The results show that the accelerometer data of the IMUs has the largest contribution to the overall grasp prediction, which we attribute to its ability to detect precise movements of the gripper during grasping.

IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Nov 2019

|

|

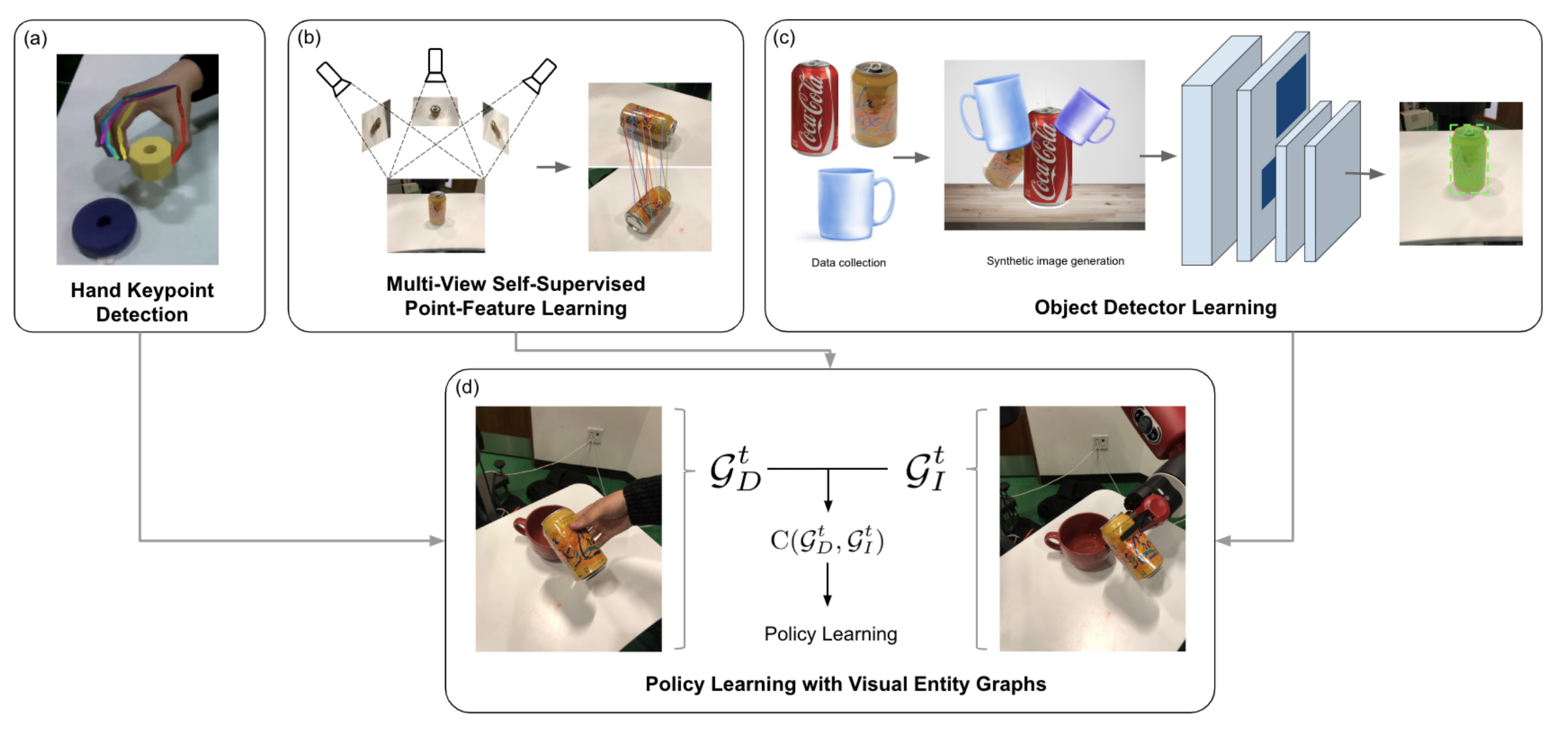

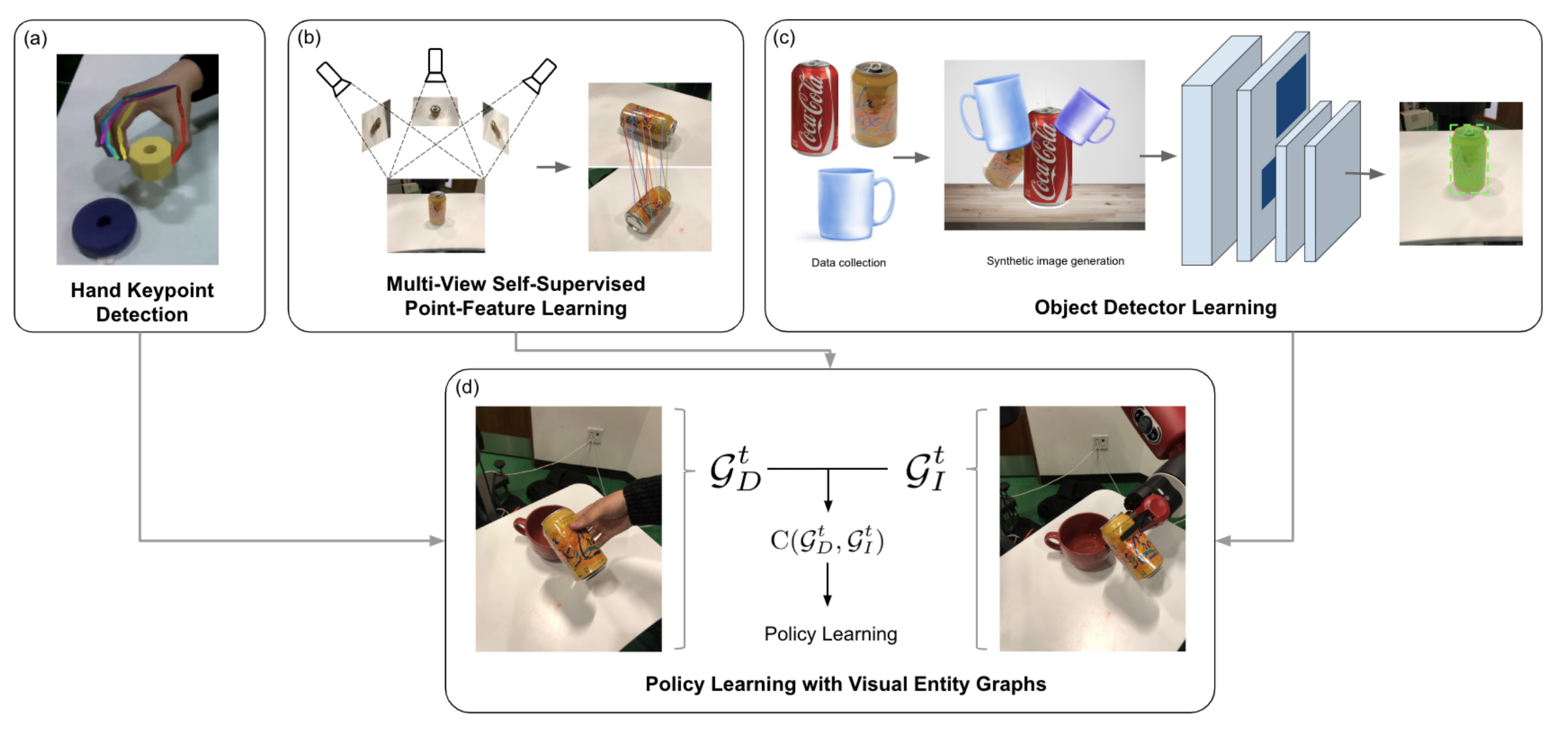

Graph-Structured Visual Imitation

Maximilian Sieb*, Zhou Xian*, Audrey Huang, Oliver Kroemer, and Katerina Fragkiadaki

@conference{Sieb-2019-118476,

author = {Maximilian Sieb and Zhou Xian and Audrey Huang and Oliver Kroemer and Katerina Fragkiadaki},

title = {Graph-Structured Visual Imitation},

booktitle = {Proceedings of (CoRL) Conference on Robot Learning},

year = {2019},

month = {October},

pages = {979 - 989},

}

We cast visual imitation as a visual correspondence problem. Our robotic agent is rewarded when its actions result in better matching of relative spatial configurations for corresponding visual entities detected in its workspace and the teacher’s demonstration. We build upon recent advances in Computer Vision, such as human finger keypoint detectors, object detectors trained on-the- fly with synthetic augmentations, and point detectors supervised by viewpoint changes and learn multiple visual entity detectors for each demonstration with- out human annotations or robot interactions. We empirically show that the pro- posed factorized visual representations of entities and their spatial arrangements drive successful imitation of a variety of manipulation skills within minutes, us- ing a single demonstration and without any environment instrumentation. It is robust to background clutter and can effectively generalize across environment variations between demonstrator and imitator, greatly outperforming unstructured non-factorized full-frame CNN encodings of previous works.

Conference on Robot Learning (CoRL), Oct 2019

|

|

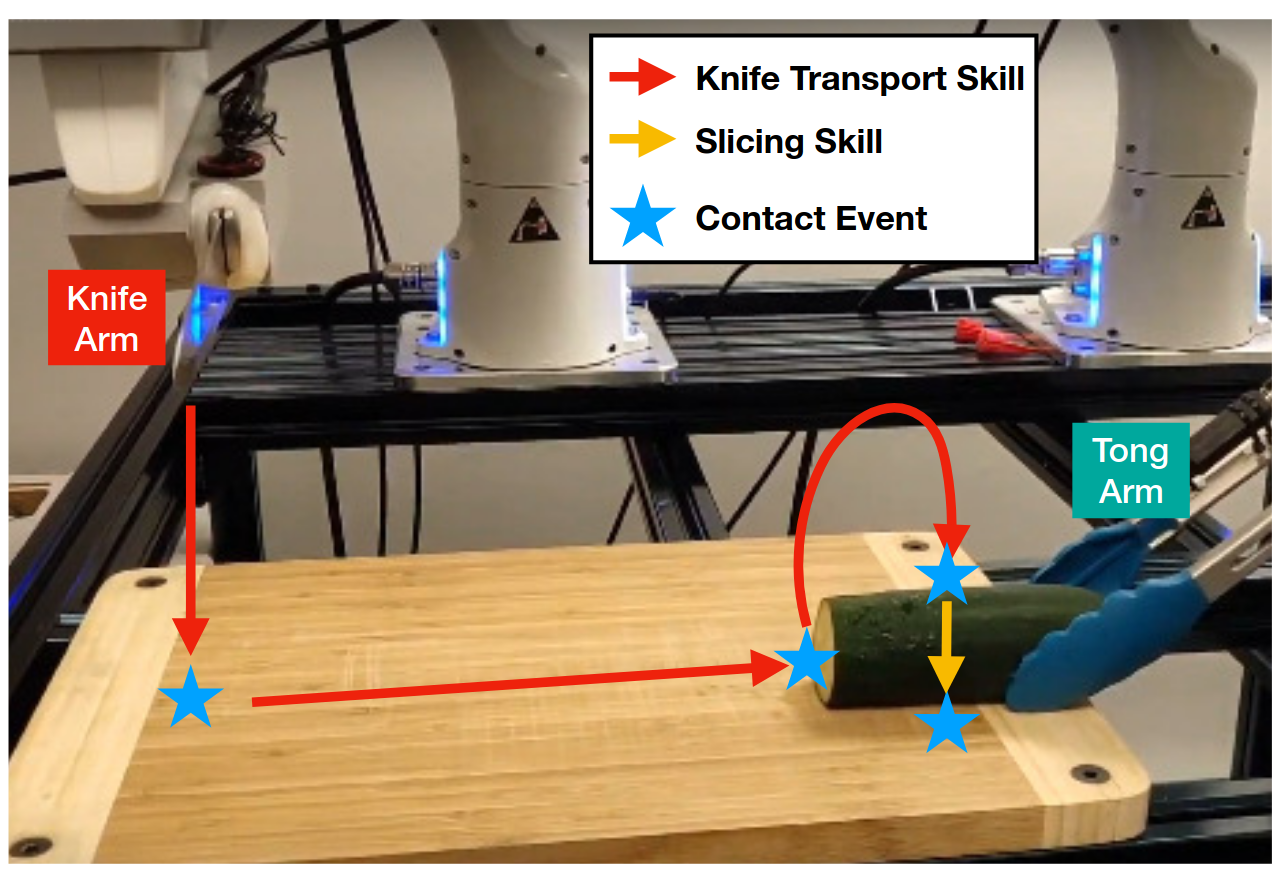

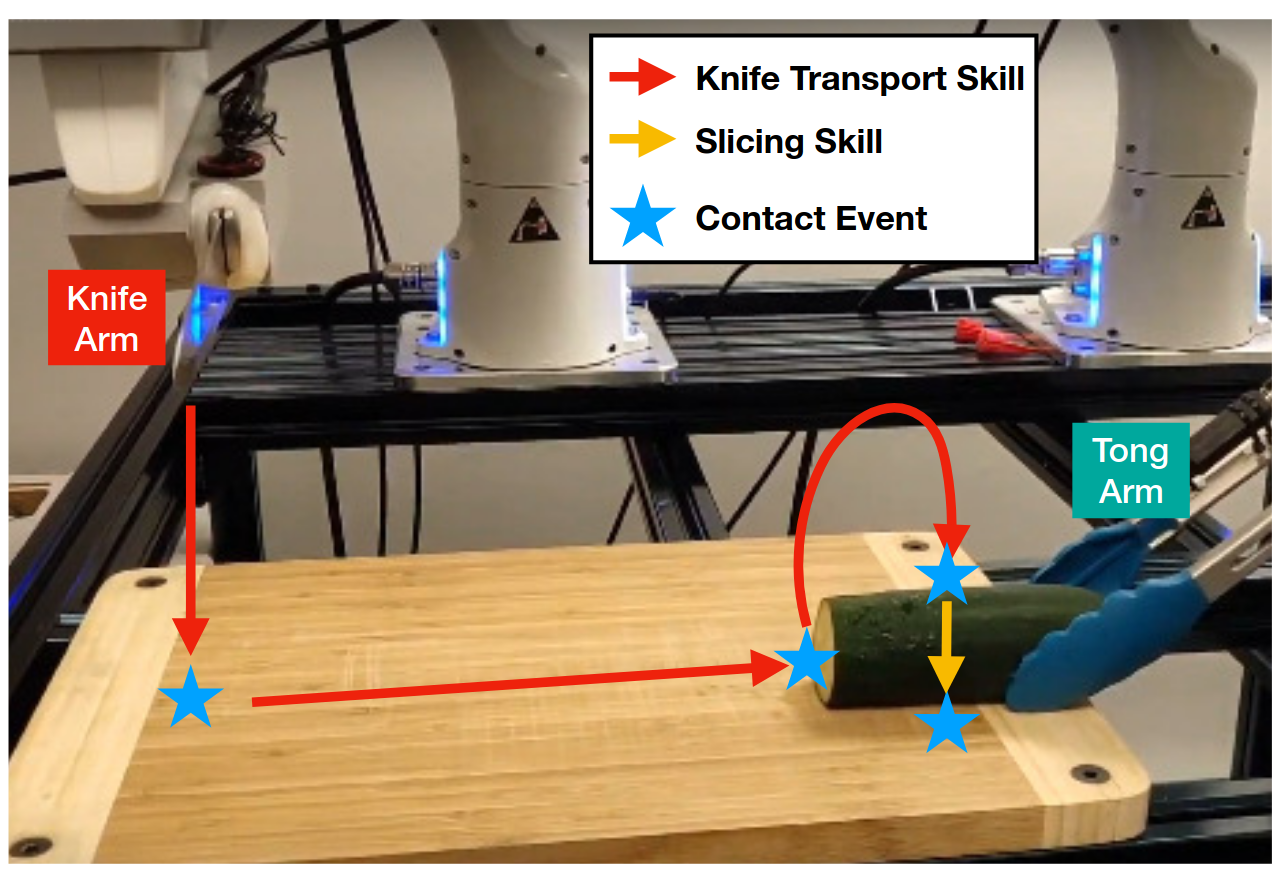

Leveraging Multimodal Haptic Sensory Data for Robust Cutting

Kevin Zhang, Mohit Sharma, Manuela Veloso, and Oliver Kroemer

@conference{Zhang-2019-118480,

author = {Kevin Zhang and Mohit Sharma and Manuela Veloso and Oliver Kroemer},

title = {Leveraging Multimodal Haptic Sensory Data for Robust Cutting},

booktitle = {Proceedings of IEEE-RAS International Conference on Humanoid Robots (Humanoids '19)},

year = {2019},

month = {October},

pages = {409 - 416},

}

Cutting is a common form of manipulation when working with divisible objects such as food, rope, or clay. Cooking in particular relies heavily on cutting to divide food items into desired shapes. However, cutting food is a challenging task due to the wide range of material properties exhibited by food items. Due to this variability, the same cutting motions cannot be used for all food items. Sensations from contact events, e.g., when placing the knife on the food item, will also vary depending on the material properties, and the robot will need to adapt accordingly. In this paper, we propose using vibrations and force-torque feedback from the interactions to adapt the slicing motions and monitor for contact events. The robot learns neural networks for performing each of these tasks and generalizing across different material properties. By adapting and monitoring the skill executions, the robot is able to reliably cut through more than 20 different types of food items and even detect whether certain food items are fresh or old.

IEEE-RAS International Conference on Humanoid Robots (Humanoids), Oct 2019

|

|

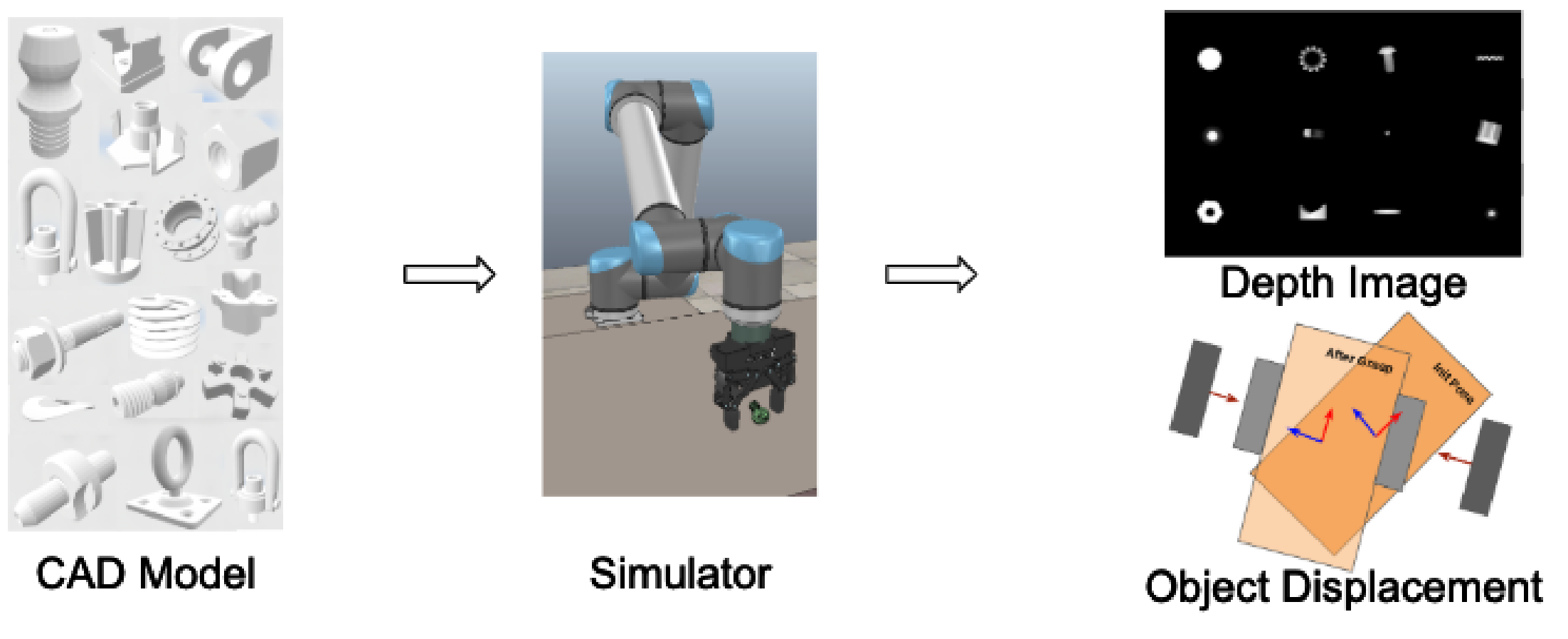

Toward Precise Robotic Grasping by Probabilistic Post-grasp Displacement Estimation

Jialiang Zhao, Jacky Liang, and Oliver Kroemer

@conference{Zhao-2019-116636,

author = {Jialiang Zhao and Jacky Liang and Oliver Kroemer},

title = {Toward Precise Robotic Grasping by Probabilistic Post-grasp Displacement Estimation},

booktitle = {Proceedings of 12th International Conference on Field and Service Robotics (FSR '19)},

year = {2019},

month = {August},

pages = {131 - 144},

publisher = {Springer},

keywords = {robotic grasping, self-supervised learning},

}

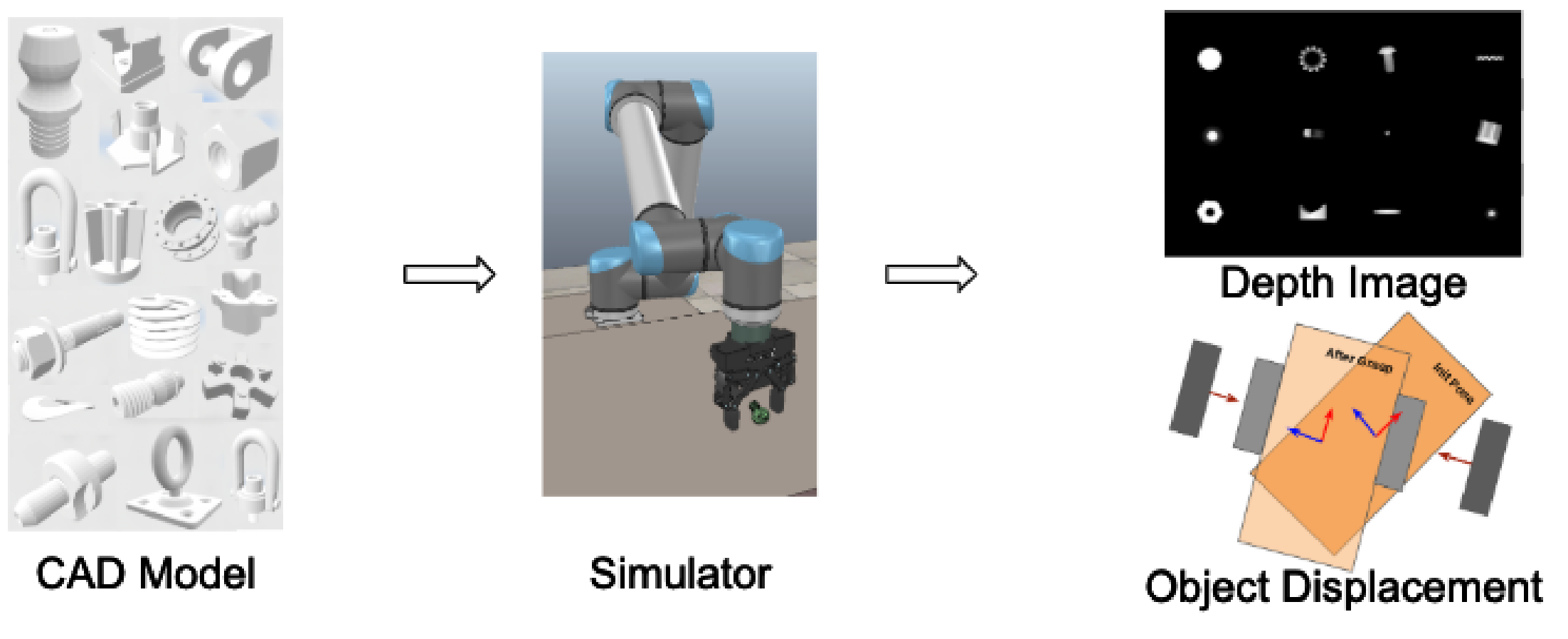

Precise robotic grasping is important for many industrial applications, such as assembly and palletizing, where the location of the object needs to be controlled and known. However, achieving precise grasps is challenging due to noise in sensing and control, as well as unknown object properties. We propose a method to plan robotic grasps that are both robust and precise by training two convolutional neural networks - one to predict the robustness of a grasp and another to predict a distribution of post-grasp object displacements. Our networks are trained with depth images in simulation on a dataset of over 1000 industrial parts and were successfully deployed on a real robot without having to be further fine-tuned. The proposed displacement estimator achieves a mean prediction errors of 0.68cm and 3.42deg on novel objects in real world experiments.

Field and Service Robotics (FSR), Aug 2019

|

|

Soft Magnetic Skin for Continuous Deformation Sensing

Tess Hellebrekers, Oliver Kroemer, and Carmel Majidi

@article{Hellebrekers-2019-118635,

author = {Tess Hellebrekers and Oliver Kroemer and Carmel Majidi},

title = {Soft Magnetic Skin for Continuous Deformation Sensing},

journal = {Advanced Intelligent Systems},

year = {2019},

month = {July},

volume = {1},

number = {4},

}

Recent progress in soft‐matter sensors has shown improved fabrication techniques, resolution, and range. However, scaling up these sensors into an information‐rich tactile skin remains largely limited by designs that require a corresponding increase in the number of wires to support each new sensing node. To address this, a soft tactile skin that can estimate force and localize contact over a continuous 15 mm2 area with a single integrated circuit and four output wires is introduced. The skin is composed of silicone elastomer loaded with randomly distributed magnetic microparticles. Upon deformation, the magnetic particles change position and orientation with respect to an embedded magnetometer, resulting in a change in the net measured magnetic field. Two experiments are reported to calibrate and estimate both location and force of surface contact. The classification algorithms can localize pressure with an accuracy of >98% on both grid and circle pattern. Regression algorithms can localize pressure to a 3 mm2 area on average. This proof‐of‐concept sensing skin addresses the increasing need for a simple‐to‐fabricate, quick‐to‐integrate, and information‐rich tactile surface for use in robotic manipulation, soft systems, and biomonitoring.

Advanced Intelligent Systems, July 2019

|

|

Learning Robust Manipulation Strategies with Multimodal State Transition Models and Recovery Heuristics

Austin S. Wang and Oliver Kroemer

@conference{Wang-2019-112298,

author = {Austin S. Wang and Oliver Kroemer},

title = {Learning Robust Manipulation Strategies with Multimodal State Transition Models and Recovery Heuristics},

booktitle = {Proceedings of (ICRA) International Conference on Robotics and Automation},

year = {2019},

month = {May},

pages = {1309 - 1315},

}

Robots are prone to making mistakes when performing manipulation tasks in unstructured environments. Robust policies are thus needed to not only avoid mistakes but also to recover from them. We propose a framework for increasing the robustness of contact-based manipulations by modeling the task structure and optimizing a policy for selecting skills and recovery skills. A multimodal state transition model is acquired based on the contact dynamics of the task and the observed transitions. A policy is then learned from the model using reinforcement learning. The policy is incrementally improved by expanding the action space by generating recovery skills with a heuristic. Evaluations on three simulated manipulation tasks demonstrate the effectiveness of the framework. The robot was able to complete the tasks despite multiple contact state changes and errors encountered, increasing the success rate averaged across the tasks from 70.0% to 95.3%.

International Conference on Robotics and Automation (ICRA), May 2019

|

|

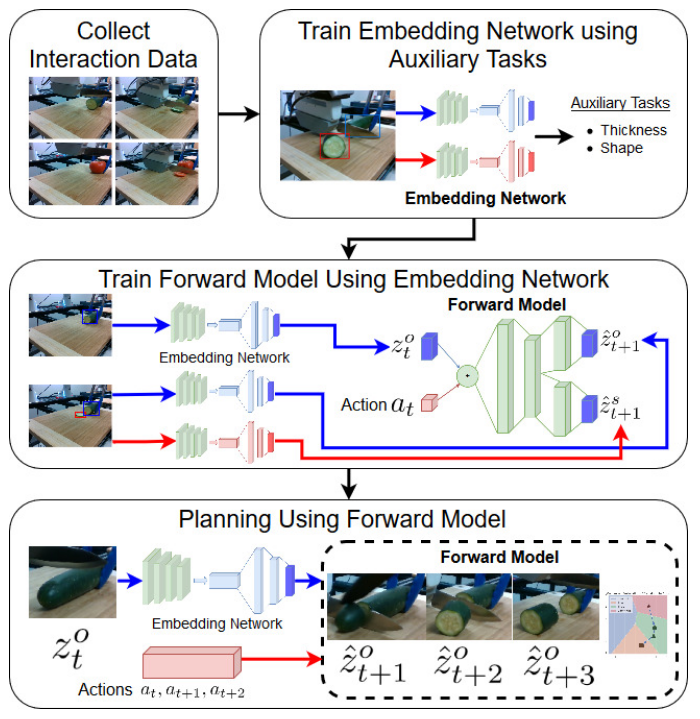

Learning Semantic Embedding Spaces for Slicing Vegetables

Mohit Sharma, Kevin Zhang, and Oliver Kroemer

@article{sharma2019learning,

title={Learning semantic embedding spaces for slicing vegetables},

author={Sharma, Mohit and Zhang, Kevin and Kroemer, Oliver},

journal={arXiv preprint arXiv:1904.00303},

year={2019}

}

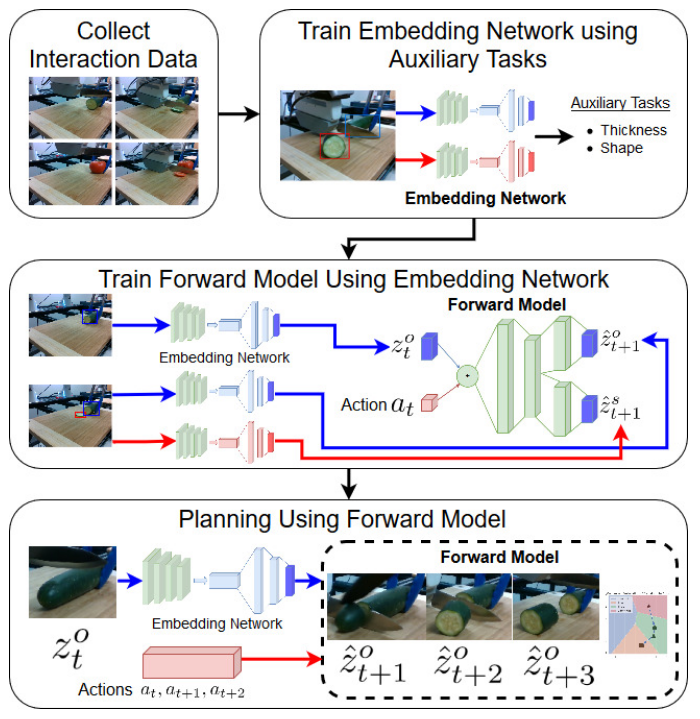

In this work, we present an interaction-based approach to learn semantically rich representations for the task of slicing vegetables. Unlike previous approaches, we focus on object-centric representations and use auxiliary tasks to learn rich representations using a two-step process. First, we use simple auxiliary tasks, such as predicting the thickness of a cut slice, to learn an embedding space which captures object properties that are important for the task of slicing vegetables. In the second step, we use these learned latent embeddings to learn a forward model. Learning a forward model affords us to plan online in the latent embedding space and forces our model to improve its representations while performing the slicing task. To show the efficacy of our approach we perform experiments on two different vegetables: cucumbers and tomatoes. Our experimental evaluation shows that our method is able to capture important semantic properties for the slicing task, such as the thickness of the vegetable being cut. We further show that by using our learned forward model, we can plan for the task of vegetable slicing.

arXiv, Mar 2019

|

|

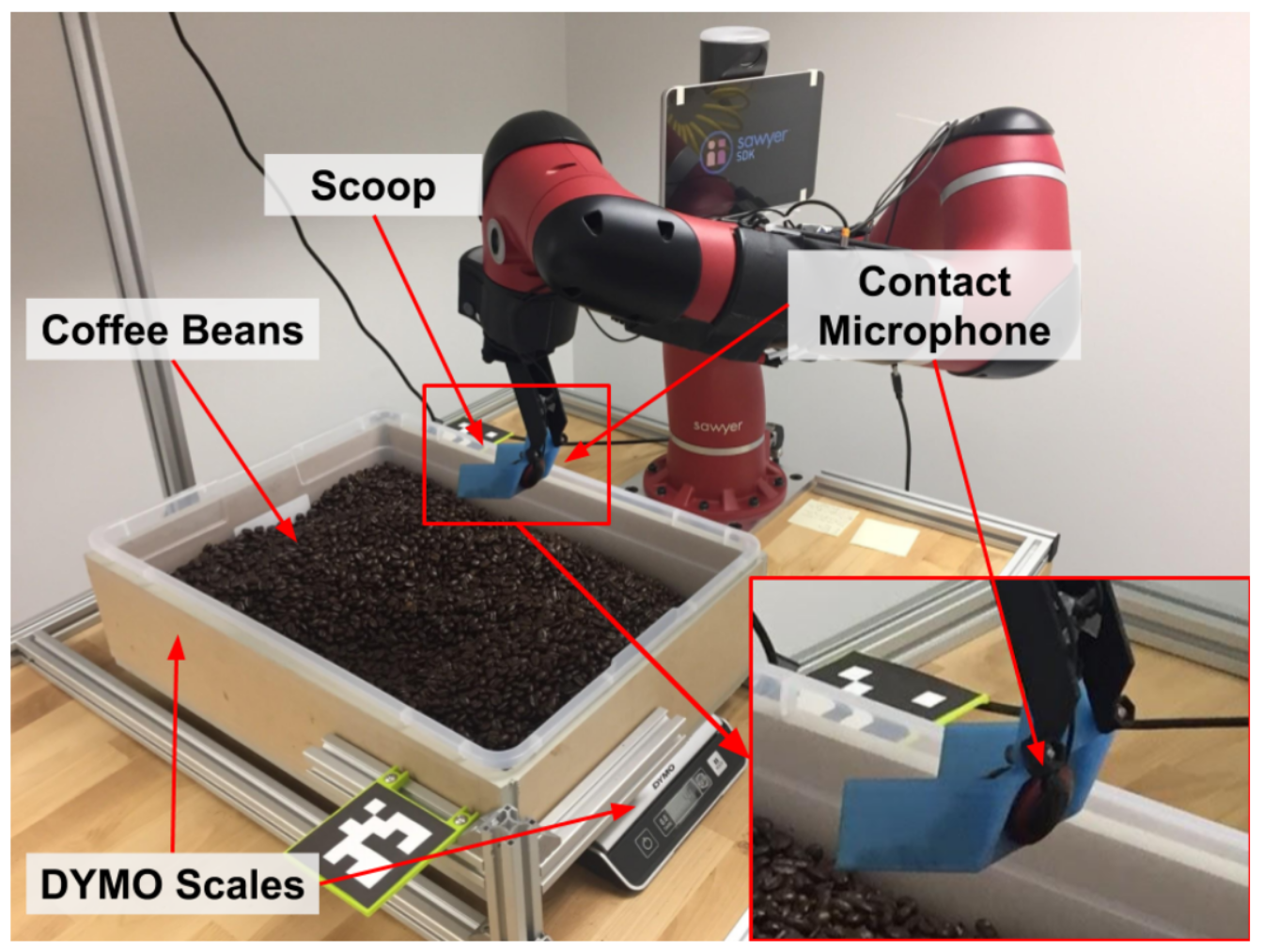

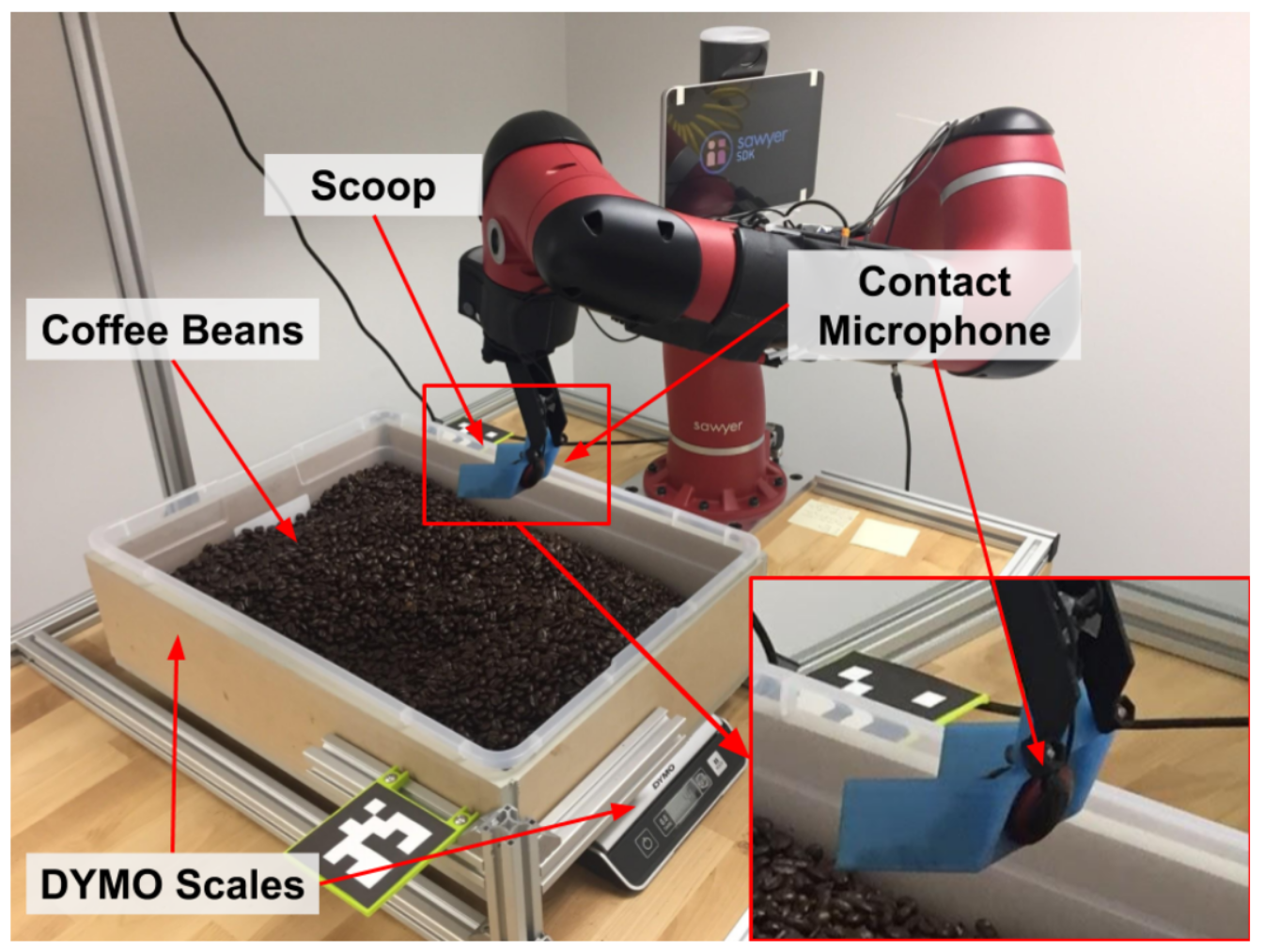

Learning Audio Feedback for Estimating Amount and Flow of Granular Material

Samuel Clarke, Travers Rhodes, Christopher Atkeson, and Oliver Kroemer

@conference{Clarke-2018-110446,

author = {Samuel Clarke and Travers Rhodes and Christopher Atkeson and Oliver Kroemer},

title = {Learning Audio Feedback for Estimating Amount and Flow of Granular Material},

booktitle = {Proceedings of (CoRL) Conference on Robot Learning},

year = {2018},

month = {October},

pages = {529 - 550},

publisher = {Proceedings of Machine Learning Research},

}